Google DeepMind's Genie Makes Super Mario-like Games from Images

Genie transforms images into interactive Super Mario-like games. The science behind it could be a stepping stone to AGI.

February 29, 2024

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Google DeepMind's Genie can create 2D video games from images, like the popular Super Mario Brothers games.

- The science behind it could be a stepping stone to AGI.

DeepMind made its name in the AI space by using video games to evaluate its algorithmic ideas. Some 14 years and an acquisition by Google later, games still sit at the heart of its research – with its new Genie model letting users turn images into video game scenes.

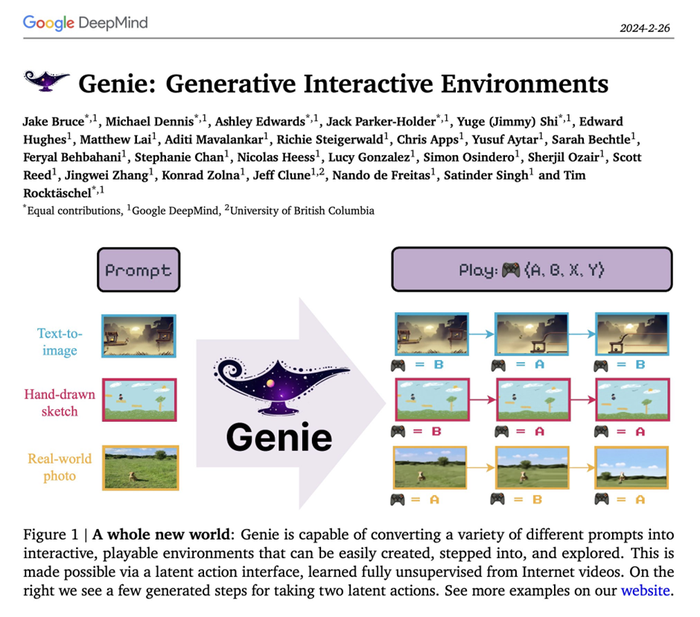

Genie – short for Generative Interactive Environments – was trained on internet videos but can create playable scenes from images, videos, and even sketches that it has not seen before.

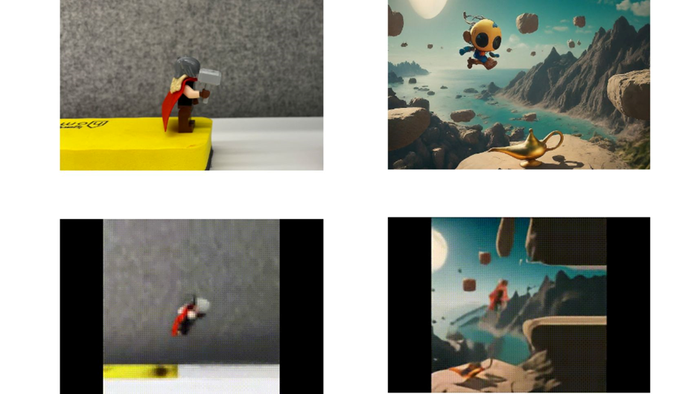

Users can input real-world photographs of, for example, a clay sculpture, and Genie can generate a 2D representation in the style of a 2D platformer – think Super Mario Bros. on Nintendo. The model can build these game environments from just a single image.

It might seem like a fun idea for an AI model, but Google DeepMind believes this generative model has implications for generalist agents – AI systems designed to handle a variety of AI tasks.

The idea is that Genie is a general method – learning latent actions from things like videos that can be transferred to human-designed environments. The method could be applied to other domains without requiring any additional domain knowledge.

Google DeepMind took Genie and applied it to different scenarios by training it on videos that did not have any specific actions happening. The model was able to understand those actions and learn from new environments without needing extra instructions.

The team behind Genie said the project was “just scratching the surface of what may be possible in the future.”

Credit: Google DeepMind

Let’s-a go

Genie force-fed 200,000 hours of internet videos of 2D platformer games like Super Mario and robotic data (RT-1). Despite internet videos being often unlabeled, it learned fine-grained controls, including which parts of the input are controllable but also infers diverse latent actions that are consistent across the generated environments.

The model learns from having watched thousands of internet videos, understanding actions like jumping and applying those to a game-like environment. Show Genie a picture of a character near a ledge for example, and the model will infer that character would jump and generate a scene based on that action.

Credit: Google DeepMind

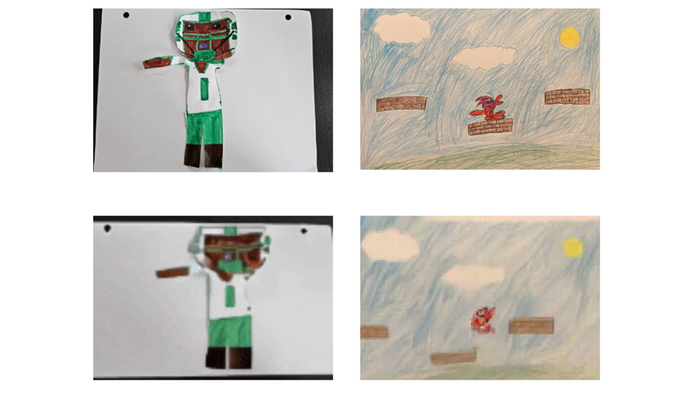

It is like how humans learn from videos – we watch and eventually we pick things up. Genie does the same - and can even make sense of human-drawn sketches and turn those into game-like representations.

Credit: Google DeepMind

Genie is 11 billion parameters in size, with Google DeepMind calling it a “foundation world model” - a world model being a system that learns from how the world works. For a detailed explanation, read Meta Chief AI Scientist Yann LeCun’s definition on X.

Tim Rocktäschel, a DeepMind research scientist who worked on the Genie project, called out Sora, OpenAI’s new video generation model, saying on X (Twitter) it was “really impressive and visually stunning, but as Yann LeCun says, a world model needs actions.”

There was no word on whether the Genie model will be made available, or if it will feature in any future Google products. You can, however, view example generations on the Genie showcase page.

Read more about:

ChatGPT / Generative AIYou May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)