Meet Eagle: The Low-Cost, High-Performing Multilingual Model

Open-source Eagle 7B uses a unique architecture that combines the best of recurrent neural networks and transformers

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- An international group working with the Linux Foundation created a small but powerful multilingual language model: Eagle 7B.

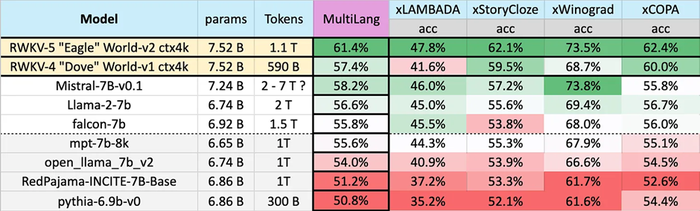

- Eagle 7B outscores popular models including Mistral-7B, Llama 2-7B and Falcon-7B across 23 languages. But it also has flaws.

An international community of AI developers working with the Linux Foundation has created a small but powerful multilingual model that can keep pace with popular open source systems from Mistral and Meta.

Eagle 7B is an attention-free large language model trained on 1 trillion tokens across more than 100 languages.

What makes it unique is that it uses the new RWKV (Receptance Weighted Key Value) architecture, which its creators said in their paper “combines the efficient parallelizable training of transformers with the efficient inference of RNNs” (recurrent neural networks). That means it can go toe-to-toe with transformer systems but the compute is cheaper.

At just 7.52 billion parameters in size, Eagle 7B is a small system but packs a real punch – outscoring popular models including Mistral-7B, Llama 2-7B and Falcon-7B across 23 languages.

Credit: RWKV

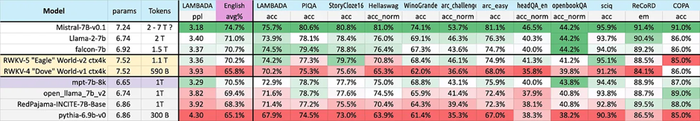

As for its English performance, the model was competitive with rival models, though it lost out on several scores by mere fractions of points.

However, the models that outperformed it were trained on larger numbers of tokens. Still, yet Eagle 7B still held its own.

Credit: RWKV

Eagle 7B may not be as strong at English than rival models, but it is cheaper to run: The underlying architecture allows 10 to 100 times lower inference costs when running and training the model.

RWKV started as an EleutherAI community project led by Google Scholar Bo Peng, with training and compute sponsored by Stability AI and others.

What’s powering Eagle 7B?

The model’s abilities stem from the latest version of its unique architecture, RWKV-v5, which is designed to use fewer resources when running and training compared to transformer-based systems.

RWKV-v5 scales linearly, whereas traditional transformers scale quadratically. The team behind it contends that the linear approach performs just as well as transformer systems, while reducing compute requirements by up to 100 times.

RWKV-v5 takes the best of transformers and recurrent neural networks to provide solid performance levels with faster inferencing and training.

The architecture is also attention-free, meaning it does not rely on the computationally intensive attention mechanism of traditional transformers, thereby improving efficiency and scalability.

Some flaws

While innovative, RWKV is not without its flaws. The team behind it warned that such models are sensitive to prompt formatting, so users need to be mindful of how they prompt the model.

RWKV-based systems are also weaker at tasks that require lookback – so you will need to order your prompt accordingly. For example, instead of saying ‘For the document above do X,’ which will require a lookback, say ‘For the document below do X.’

While Eagle’s English scored suffered in comparison to its multilingual efforts, the developers wrote in a blog post that they are focused on building “AI for the world - which is not just an English world.”

“It is not fair to compare the multi-lingual performance of a multi-lingual model -vs- a purely English model,” the team added. “By ensuring support for the top 25 languages in the world and beyond, we can cover approximately four billion people, or 50% of the world.”

Access Eagle 7B

Eagle 7B can be used for personal and commercial purposes without restrictions, under its Apache 2.0 license.

You can download Eagle 7B via Hugging Face. You can also try it out for yourself via the demo.

The researchers plan to grow the multilingual dataset powering Eagle to support a wider variety of languages.

Also in the works is a version of the Eagle model trained on two trillion tokens, which could drop around March.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)