Nvidia CEO: Two Computing Trends Are Emerging At Once

Product unveiling comes as chipmaker’s market cap soars past $1 trillion

At a Glance

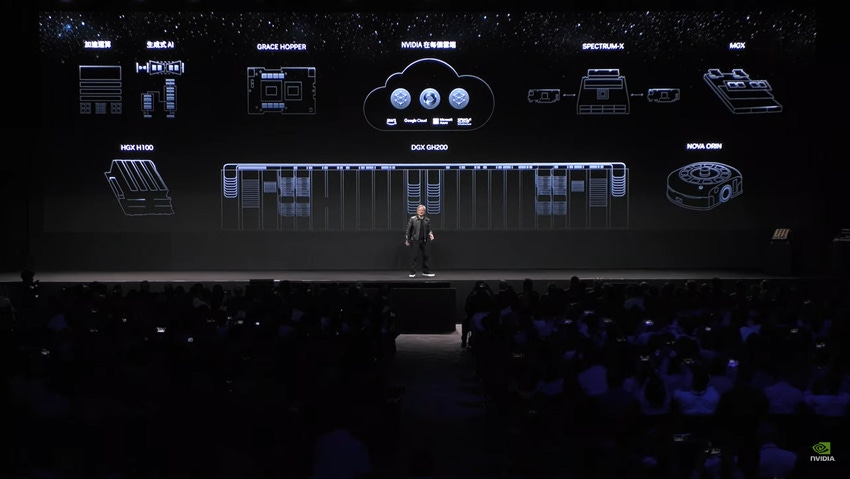

- Nvidia CEO Jensen Huang said a new computing era is here, characterized by accelerated computing and generative AI.

- Huang unveiled a new AI supercomputer, a new 'superchip' and partnerships with SoftBank and WPP.

- Nvidia's market value soared past $1 trillion in intraday trading on Tuesday. It is the most valuable chipmaker in the world.

Nvidia CEO Jensen Huang said the CPU-dominated computing era is ending and two new trends are rising simultaneously: Accelerated computing and generative AI.

He believes each data center will transition from CPU-based infrastructure for general purpose computing to GPU-based accelerated computing to handle the heavy workload of generative AI capabilities for specific domains. He credits this shift to a “new way of doing software” that is deep learning.

“This is really one of the first major times in history a new computing model has been developed and created,” said the chipmaker’s CEO at the recent Computex conference in Taiwan.

Huang contends that technology transitions occur due to efficiency and lower costs. For example, the PC revolution began in the 1980s that made computer affordable for people. Then smartphones came about that bundled a phone, camera, music player and computer in one device. It saved the user money and offered convenience and variety.

Stay updated. Subscribe to the AI Business newsletter

Nvidia’s GPUs are the backbone for AI compute. On Tuesday, the company’s market value soared past $1 trillion in market value intraday as the most valuable chipmaker in the world.

Huang believes accelerated computing will take off because users that “buy more, save more.”

For example, Huang said it will take $10 million, 960 CPU servers and 11 GWh to train one large language model (LLM). With accelerated computing, $10 million can buy 48 GPU servers, use up 3.2 GWh and train 44 LLMs. To splurge, $34 million buys 172 GPU servers using 11 GWh to train 150 LLMs.

On the lower end, $400,000 snags 2 GPU servers, uses up 0.13 GWh to train one LLM.

“We want dense computers, not big ones,” he said.

Data centers will muscle up

To be sure, distributing the compute could accomplish LLM training without using accelerated computing. But Huang said it is expensive to build more data centers. His prediction is that existing data centers will be muscled up with accelerated computing servers.

“Make each data center work more,” he said. “Almost everybody is power-limited today.”

The trends are taking off. “The utilization is incredibly high,” Huang said. “Nvidia GPU utilization is so high … almost every single data center is overextended, there are so many different applications using it.”

“The demand is literally from every corner of the world,” he added. Nvidia’s H100 AI chip is in full volume production.

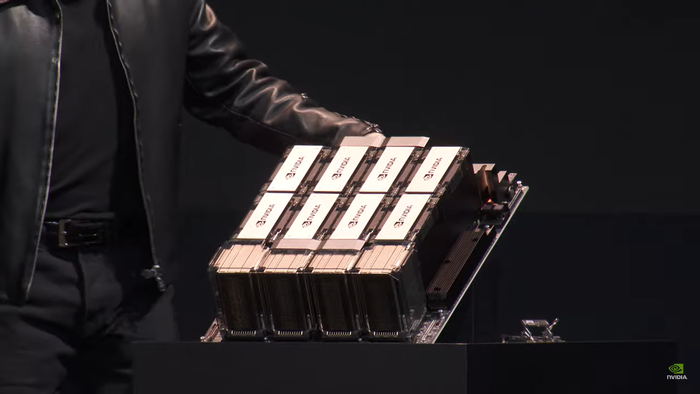

Nvidia CEO Jensen Huang shows off H100 chips, priced at $200,000.

Huang announced that Nvidia is partnering with SoftBank to develop a new platform for generative AI and 5G/6G applications. It will be deployed in SoftBank’s new data centers worldwide to host generative AI and wireless applications on a multi-tenant common server platform.

More than numbers and words

For the first time in history, computers can understand more than numbers and words, Huang said.

“We have a software technology that can understand the representation of many modalities. We can apply the instrument of computer science to so many fields that were impossible before,” he said.

Computers can now learn the language of the structure of many things. This capability came about when unsupervised learning using Transformers learned to predict the next word. Thus, LLMs were created, Huang said.

“We can learn the language of the structure” of many other things, he said.

Once the computer learned the language of different domains, with control and guidance through prompts, the user can guide the AI to generate new information of all kinds, Huang said. This made the transformation of one type of information to another possible, such as text-to-image, text-to-proteins, text-to-music and others.

“We can apply the instrument of computer science to so many fields that were impossible before,” Huang said.

Meanwhile, the barrier to computing is now “incredibly low,” he added. As any user of ChatGPT can attest, one can use a text prompt to generate content. “We have closed the digital divide. Everyone is a programmer now. You just have to say something to the computer.”

“It’s so easy to use that’s why it touches every industry,” Huang said.

What’s more is that AI “can do amazing things for every single application from the previous era,” he said. “Every application that exists will be better because of AI. This computing era does not need new applications; it can succeed with old applications.”

This is why generative AI is being incorporated into suites of existing products by Microsoft, Google and others.

New computing approach

But AI needs a new computing approach: Accelerated computing built from the ground up.

To that end, Huang showed off the Grace Hopper Superchip, which brings together the Arm-based Nvidia Grace CPU and Hopper GPU architectures using the Nvidia NVLink-C2C interconnect technology. High-speed bandwidth of 900 GB per second connects chip to chip. Huang said the GPU and CPU can reference the memory so unnecessary copying is avoided.

The Grace Hopper is in full production.

Grace Hopper Superchip

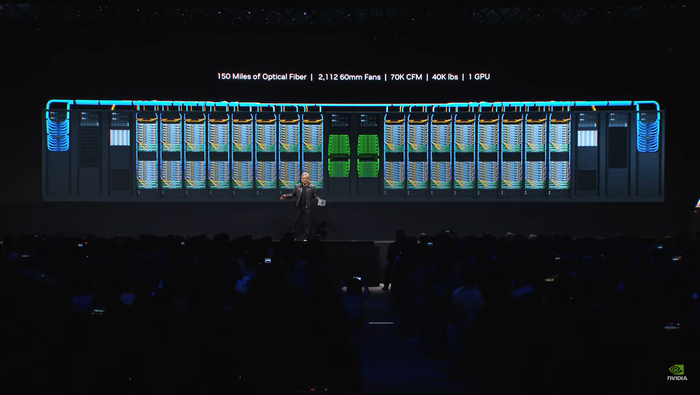

Huang also showed off a new AI supercomputer capable of developing trillion-parameter language models. It connects 256 Grace Hopper Superchips that operates as one “massive” GPU to enable the development of “giant” models for generative AI language applications, recommender systems and data analytics workloads, the company said.

Called DGX GH200, the supercomputer boasts 144 terabytes of shared memory among Grace Hopper Superchips, which are interconnected using NVLink. The supercomputer provides 1 exaflop of performance.

DGX GH200

Huang said Google Cloud, Meta and Microsoft will be the first companies to have access to the supercomputer.

Nvidia also is partnering with WPP, the world’s largest ad and marketing agency, to develop an AI content engine that integrates with popular platforms such as Adobe and Getty Images. It will enable WPP’s designers to use generative AI to create highly personalized content. Huang said currently, ads are served by retrieving information. In the future, content will not be retrieved but be generated for the individual.

Other announcements include the MGX server specification for building AI, HPC and omniverse applications and an accelerated Ethernet platform for hyperscale generative AI called Spectrum-X.

At the end of Huang’s two-hour keynote, even he seemed overwhelmed by the slew of announcements.

“This is too much," Huang said. "This is too much."

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)