Say Bonjour to CrossiantLLM: The Mini Open Bilingual Model

CroissantLLM trained on more tokens than Llama 2. It is a 'truly bilingual' language model that understands nuance

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- French researchers developed CroissantLLM, a small language model with high fluency in French and English

- It is small at 1.3 billion parameters but outperforms its weight class in French. It runs well on PCs and mobile devices.

- Researchers trained it on high-quality French content. The goal: Give French equal footing with English in language models.

There is a delectable new open source model for English and French workloads - and it is snackable enough in size to run on mobile devices.

CroissantLLM is designed to run on consumer-grade local hardware while being “full open, and truly bilingual,” according to a blog by Manuel Faysse, a lead researcher on the team who created it.

The goal is to make French on par with English in AI models. “With CroissantLLM, we aim to train a model in which English is not the dominant language and go for a 1:1 ratio of English and French data!” he wrote.

The model is just 1.3 billion parameters in size but was trained on three trillion tokens – more tokens than the Llama 2 models − and included a dataset comprised of high-quality French content including legal documents, business data, cultural content and scientific information. It uses the Llama model architecture.

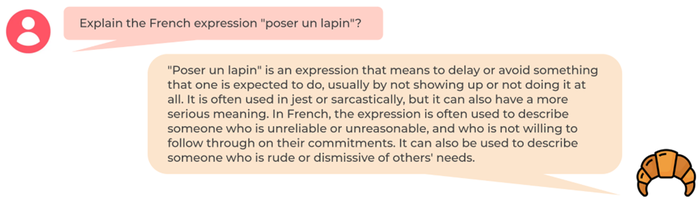

For example, you can prompt the model to explain French terms; Croissant’s deep linguistic knowledge brings out the nuances of the language − et voilà!

Credit: "CroissantLLM: A Truly Bilingual French-English Language Model" https://arxiv.org/pdf/2402.00786.pdf

The model and the underlying datasets were created by researchers from mainly French universities and businesses, including CentraleSupélec from the Université Paris Saclay, lluin Technology in Neuilly-Sur-Seine, France, Sorbonne Universitè in Paris, and others.

Egalitè with English

Faysse said a big challenge was to get enough high-quality French content for the training dataset. The team collected, filtered and cleaned data from varied sources and modalities, whether they are webpages, transcriptions, movie titles and others.

They collected more than 303 billion tokens of monolingual French data and 36 billion tokens of French-English high-quality translation data. “We craft our final 3 trillion token dataset such that we obtain equal amounts of French and English data after upsampling,” Faysse said.

He said the team purposely made CroissantLLM small after noticing that one of the biggest hurdles to widespread adoption of AI models is the difficulty in getting them to run on consumer-grade hardware.

Notably, the most downloaded models on Hugging Face were not the best performers, like Llama 2-70B or Mixtral 8x7B, but smaller models like Llama 2-7B or Mistral 7B, which are “easier and cheaper to serve and finetune,” he said.

CroissantLLM small size lets it run “extremely quickly on lower end GPU servers, enabling for high throughput and low latency” as well as CPUs and mobile devices at “decent speeds,” Faysse wrote.

The trade-off, he said, is that it is not as good in generalist capabilities in reasoning, math and coding compared to larger models. But the team behind CroissantLLM believe it will be “perfect” for specific industrial applications, translations and chat functionality where larger models are not necessarily needed.

The researchers also introduced a new French benchmark to assess non-English language models: FrenchBench. FrenchBench Gen assesses tasks like title generation, summarization, question generation, and question answering − relying on the high-quality French Question Answering dataset, FQuaD. The Multiple Choice section of FrenchBench tests reasoning, factual knowledge, and linguistic capabilities of models.

When tested, CroissantLLM came out among the best-performing for its size − in French − and even was competitive with Mistal 7-B.

Access CroissantLLM

You can download CroissantLLM Base and the Chat version from Hugging Face. The technical report detailing the model’s underlying architecture can be read via arXiv.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)