Unleashing Generative AI’s Next Wave at the Edge

Perf/Watt and Application-Driven LLMs keys to powering the Generative AI at the Edge

February 29, 2024

Generative AI has captured widespread interest by offering business and consumers unprecedented opportunities to directly utilize AI in ways that were previously the stuff of science fiction. Yet, the very expansion of computing power and AI capabilities that has enabled these advancements is now becoming a challenge, as AI training and inference emerge as the dominant computing tasks of the 2020s. In particular, the hardware currently being used to put GenAI at our collective fingertips presents a significant obstacle to extending the capabilities of emerging AI technologies to edge computing, which could unlock considerable value.

The progress in Generative AI so far has primarily focused on servers and the training of ever-growing language models. This current focus is just the beginning, setting the stage for broader technology adoption through scalable deployment at the edge. There is a host of additional use cases that are poised to create a much larger wave of embedded applications for GenAI, from robotics and consumer electronics to security and autonomous driving. However, achieving integration at this scale brings technological hurdles such as energy efficiency, on-device fine-tuning, reliability and cost into sharp focus, all of which demand tailored system-on-chip (SoC) designs.

Ambarella, a leading company in edge AI semiconductors, has been at the forefront of delivering video and AI solutions for both consumer and business needs for more than two decades. The company has long been addressing the unique requirements of edge AI computing by taking a purpose-built approach to creating AI SoCs that are tailored to running the specific algorithms for the set of applications they are targeting, in order to provide the industry’s best power efficiency, and lowest latency, while also consistently delivering the best AI performance per watt with each of its market-specific SoC families. That approach, which Ambarella calls “algorithm first” is now extending to address the intensive needs of Generative AI workloads in a way that can be done efficiently at the edge.

Ambarella’s latest strategic direction to embed Large Language Models (LLMs) and Generative AI into edge AI applications comes at a crucial time when the OEMs designing their next-generation edge devices are looking to make this pivotal shift. This move caters to the rising tide of open-source AI innovation and the demand for models that fall within the 5 to 50 billion parameter range, which Ambarella is already demonstrating at the edge. By targeting the so-called “missing middle,” Ambarella aims to once again optimize performance per watt for this burgeoning market, including demonstrated support of various transformer-based models, as well as LLMs with up to 34 billion parameters.

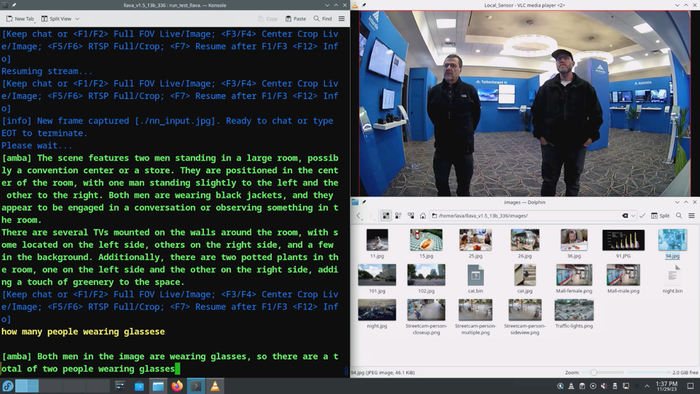

In particular, Ambarella's recent unveiling of its Cooper™ Developer Platform during CES 2024, in combination with the announcement of its latest N1 SoC series, highlights the company’s ability to run complex multi-modal generative AI models like LLaVA. In fact, Ambarella also featured several demos showcasing these capabilities during the show, including live, spontaneous queries of a LLaVA model running on the N1 SoC, which provided real-time textual analysis of both live camera feeds and randomly selected still images (see Figure 1). These new offerings further demonstrate Ambarella’s commitment to advancing edge AI technology.

Figure 1: Ambarella demonstrates N1 SoC during CES via real-time queries of multi-modal LLM.

The broad applicability for efficiently running transformer-based models extends to a broad range of new GenAI applications, from powerful edge servers to AI IoT applications, while also providing a superior developer experience. Ambarella's proprietary AI accelerator, the CVflow® inference engine, is now in its third generation and was designed from the ground up to provide efficiency in machine learning inference. This is also at heart of how Ambarella enabled the new N1 SoC to run key open-source AI LLMs effectively at the edge. Alongside this hardware, Ambarella’s new Cooper software platform provides a comprehensive toolset for developers, reinforcing its commitment to democratizing AI model development and optimizing computing power for the edge across a broad range of applications.

The evolution of Generative AI at the edge represents a critical frontier in computing, with significant implications for efficiency and performance in a multitude of applications. Find out more about Generative AI’s evolution and how Ambarella can help you lead the next wave of innovation at the edge, including a video on the Cooper Developer Platform, a new white paper from Omdia and Ambarella, and registering your interest to receive a Cooper Developer Kit at www.ambarella.com/Cooper.

A new report from Omdia and Ambarella: Generative AI At The Cutting Edge

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)