What is a Large Language Model?

Learn about the different types of large language models and how they can be used to improve your machine learning systems.

The rise of generative artificial intelligence (AI) has brought large language models to consumers and enterprises alike. As complex tools, trained through machine learning, large language models are capable of general-purpose understanding and generation of human languages and code.

This has forced a rapid rethink of the responsible AI tools and techniques necessary to rein in and then hopefully align large language models with both organizational requirements and governmental regulations over time.

What is a language model?

Your Alexa and Siri can process speech audio. You can use ServiceHub to improve your customer service needs. And you can translate phrases at the touch of a button using Google Translate. But you cannot perform any of these natural language processing (NLP) tasks without a language model.

The concept of a “language model” has existed for decades – in essence, language models are probabilistic machine learning models of natural languages, which can be used for numerous tasks, including speech recognition, machine translation, answering questions, semantic searching, and optical character and handwriting recognition.

By analyzing the data, such as text, audio or images, a language model can learn from the context to predict what comes next in a sequence. Most often, language models are used in NLP applications that generate text-based outputs, such as machine translation.

For example, when you start typing an email, Gmail may complete the sentence for you. This happens because Gmail has been trained on volumes of data to know that when someone starts typing “It was very nice …” often the next words are “… to meet you.”

How do language models work?

Language models are fed sizable amounts of data via an algorithm. The algorithm determines the context of the data to determine what comes next. The model then applies what the algorithm dictates to produce the desired outcome, such as making a prediction or producing a response to a query.

Essentially, a language model learns the characteristics of basic language and uses what it gleaned to apply to new phrases.

There are several different probabilistic approaches to modeling language, which vary depending on the purpose of the language model. From a technical perspective, the various types differ by the amount of text data they analyze and the math they use to analyze it.

For example, a language model designed to generate sentences for an automated Twitter bot may use different math and analyze text data differently than a language model designed for determining the likelihood of a search query.

What are the different types of language models?

Types of Large Language Models

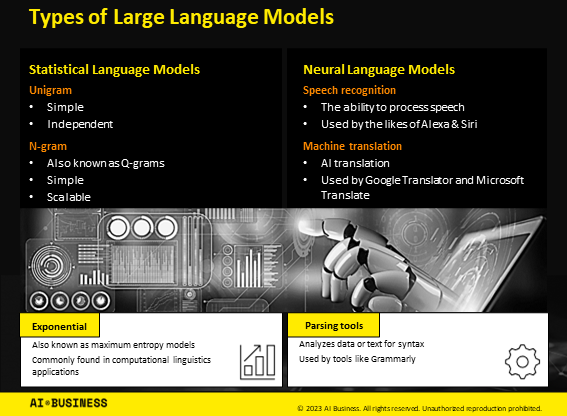

There are two main types of language models:

Statistical Language Models – which include probabilistic models that can predict the next word in a sequence.

Neural Language Models – which use neural networks to make predictions.

Statistical Language Model types

Unigram– Among the simplest type of language model. Unigram models evaluate terms independently and do not require any conditioning context to make a decision. It is used for NLP tasks such as information retrieval.

N-gram – N-grams (also known as Q-grams) are simple and scalable. These models create a probability for predicting the next item in a sequence. N-gram models are widely used in probability, communication theory and statistical natural language processing.

Exponential – Also referred to as maximum entropy models. These models evaluate via an equation that combines feature functions and n-grams. Essentially, it specifies features and parameters of the desired results and leaves analysis parameters vague. Most commonly found in computational linguistics applications.

Neural Language Model types

Speech Recognition – The likes of Alexa and Siri are given their voices through this model type. Essentially, it gives a device the ability to process speech audio.

Machine Translation – The conversion of one language to another via a machine. Google Translator and Microsoft Translate use this type.

Parsing Tools – This model type analyzes data or a sentence to determine whether it follows grammatical rules. Tools like Grammarly use parsing to understand a word’s context to others in a document.

What is a large language model?

Large language models are a type of language model that can understand and generate language. Large language models are trained on massive datasets. They are often derived from raw sources such as social networks and code repositories. Large language models can help to fuel the new wave of generative AI, which is defined by its ability to produce new and original work of its own.

With just a simple text, image or audio prompt, generative AI can produce content in seconds on spec—be it an original essay on trickle-down economics, a picture of New York drawn in the style of Monet or a rap about Reese’s Pieces.

Well before the emergence of generative AI, market researchers concluded that natural language processing models trained on these public data sets inherently contain more bias than those trained on highly curated data sets. For this reason, many enterprise large language models solutions incorporate training (or fine-tuning) data derived from highly curated data sets—and, increasingly, data sets that language models generate.

What are some examples of large language models available today?

Credit: Getty Images

ChatGPT – ChatGPT is the application that truly kick-started the mainstream public’s fascination with AI.

Released in November 2022, ChatGPT is an interface application that allows users to ask it questions and generate responses.

It launched using a combination of InstructGPT and GPT 3.5 before later seeing the powerful GPT-4 powering premium versions of the application.

ChatGPT has gone on to act as the basis for a series of Microsoft products after the software giant invested in OpenAI to gain access to the application.

ChatGPT is a closed system, meaning OpenAI keeps full control and ownership of the application. OpenAI has kept the parameter levels of GPT-4 private.

LLaMA – Large Language Model Meta AI (LLaMA), is designed for researchers and developers to make models.

LLaMA is an open source model designed to be smaller than the likes of GPT-3. It’s designed for users who lack the computing power to develop language models.

Since its release in late February 2023, LLaMA has been routinely fine-tuned by researchers to create other language models, such as Vicuna.

I-JEPA – I-JEPA is an AI model published by Meta in June 2023. The model itself is not the star, but rather how it was built using a new architecture.

The JEPA approach can predict missing information akin to a human’s ability for general understanding, something the generative AI method cannot do.

Meta’s chief AI scientist Yann LeCun has continuously proposed the idea that deep learning AI models can learn about their surroundings without the need for human intervention. The JEPA approach aligns with that vision and doesn’t involve any overhead associated with applying more computationally intensive data augmentations to produce multiple views.

PaLM 2 – Google’s flagship language model, unveiled at the company’s annual I/O conference. The model supports over 100 languages and is designed to be fine-tuned for domain-specific applications.

PaLM comes in a variety of sizes–each of which is named after an animal to represent its size. Gecko is the smallest, and then there's Otter, Bison, and up to Unicorn, the largest.

Auto-GPT – Short for Autonomous GPT, Auto-GPT is an open source project attempting to provide internet users access to a powerful language model.

Auto-GPT is built off OpenAI’s GPT-4 and can be used to automate social media accounts or generate text, among other use cases.

The model grew popular online following its April 2023 launch, with the likes of former Tesla AI chief Andrej Karpathy among those praising the model’s abilities.

Gorilla – The first AI model on this list to utilize Meta’s LLaMA as its body, Gorilla was fine-tuned to improve its ability to make API calls – or more simply, work with external tools.

The end-to-end model is designed to serve API calls without requiring any additional coding and can be integrated with other tools.

Gorilla can be used commercially in tandem with Apache 2.0 licensed LLM models.

Claude – Is developed by Anthropic, which was founded by former OpenAI staff who left over disagreements about close ties with Microsoft.

Anthropic went on to develop Claude, a chatbot application not too dissimilar to ChatGPT apart from one thing–increased focus on safety.

Claude uses constitutional AI, a method developed by Anthropic to prevent it from generating potentially harmful outputs. The model is given a set of principles to abide by, almost like giving it a form of 'conscience.'

Stable Diffusion XL – Stable Diffusion XL is the latest iteration of the text-to-image model that rose to fame in 2022.

SDXL 0.9 also boasts image-to-image capabilities, meaning users can use an image as a prompt to generate another image. Stable Diffusion XL also allows for inpainting, where it can fill in missing or damaged parts of an image, and outpainting, which extends an existing image.

Dolly/Dolly 2.0 – Named after Dolly the sheep, the world’s first cloned mammal, the Dolly AI models from Databricks are designed to be small and much less costly to train compared to other models on this list.

Dolly is a fine-tuned version of EleutherAI’s GPT-J language model. Dolly is designed to be highly customizable, with users able to create their own ChatGPT-like chatbots using internal data.

Dolly 2.0 is built using EleutherAI’s Pythia model family. The later iteration was fine-tuned on an instruction-following dataset crowdsourced among Databricks employees. It’s designed for both research and commercial use.

XGen-7B – XGen-7B, developed by Salesforce, is a family of large language models designed to sift through lengthy documents to extract data insights.

Salesforce researchers took a series of seven billion parameter models and trained them on Salesforce’s in-house library, JaxFormer, as well as public-domain instructional data. The resulting models can handle 8,000 sequence lengths for up to 1.5 trillion tokens.

Vicuna – Vicuna is an open source chatbot and the second model on this list to be a fine-tuned LLaMA model. To fine-tune it, the team behind Vicuna used user-shared conversations collected from ShareGPT.

It cost LMSYS Org just $300 to train the model. Its researchers claim that Vicuna achieves more than 90% of the quality of OpenAI ChatGPT and Google Bard while outperforming other models like LLaMA and Stanford Alpaca. It’s important to note that OpenAI hasn’t published anything on GPT-4, which now powers part of ChatGPT, so it’s difficult to ascertain those findings.

Inflection-1 – Inflection-1 is the model developed by the AI research lab Inflection to power Pi.ai, its virtual assistant application.

Inflection used “thousands” of H100 GPUs from Nvidia to train the model. The startup applied proprietary technical methods to power the model to perform at par with the likes of Chinchilla from Google DeepMind and Google's PaLM-540B.

Inflection kept its language model work entirely in-house, from data ingestion to model design. The model will, however, be available via Inflection’s conversational API soon.

What are large language models used for?

Credit: Getty Images

Large language models can perform a variety of natural language processing task, including:

Text generation and summarization – Generating human-like text via detailed responses. As well as answering a variety of questions. This can also include effective summarization abilities—the ability to parse through extensive pieces of information to deliver concise, understandable summaries—making it a powerful tool for distilling complex content into more manageable forms.

Chatbots – The most common example most humans interact with. Models power chatbots to conduct basic tasks like answering queries and directing customers. Natural language processing-powered chatbots can be used to save humans from handling basic tasks, only to be brought in for more advanced circumstances.

Code generation – Some large language models can generate code across multiple programming languages, offering solutions to coding problems, and helping in debugging and demonstrating coding practices. Its results, while generally reliable, should still be reviewed for accuracy and optimality. ChatGPT is the most popular AI tool among developers, according to a Stack Overflow survey.

Image generation – Large language models can be used to generate images from natural language prompts.

Virtual assistants – By utilizing APIs, large language models can be applied to many applications, including as virtual assistants. For example, by accessing APIs for calendars, Gorilla can be used to power virtual assistant applications. The model could, when queried, return the current date without taking any input, for example.

Translation – Large language models trained on multiple languages can be used to translate large amounts of information.

Security – NLP models can be used to improve a business’s security arsenal. An enterprise can integrate model-powered algorithms that can extract additional context from a user’s personal information and generate questions for them to answer to provide them access.

Operating robots – A team of engineers at Microsoft showcased a demo of ChatGPT connected to a robotic arm and tasked to solve some puzzles. ChatGPT asked the researchers clarification questions when the user’s instructions were ambiguous and wrote code structures for a drone it was controlling in another experiment.

Developing language models – Some large language models are used to help other language and AI models. For example, LLaMA is an open-source model designed for users who lack the computing power to develop language models. LLaMA has formed the underlying basis for a variety of open-source AI models, including Dolly, Alpaca and Gorilla.

Sentiment analysis – Using natural language processing, some large language models can identify subjective information and affective states. Models can power sentiment analysis tools to identify emotions and contexts depicted in a text document. Commonly found in things like product review monitoring or grammar-checking tools.

Audio generation and speech processing – Some large language models, when combined with an audio generation model, can be used to generate text and speech for speech recognition and speech-to-speech translation, for example, Google’s PaLM 2. When combined with AudioLM, PaLM 2 can leverage larger quantities of text training data to assist with speech tasks. Google contends that adding a text-only large language model to an audio-generative system improves speech processing and outperforms existing systems for speech translation tasks.

Health care applications – Large language models have also seen use in the health care sector. For example, Google’s Med-PaLM-2 can be used to determine medical issues within images, such as in X-rays. According to Google researchers, Med-PaLM-2 achieved a nine-times reduction in inaccurate reasoning—approaching the performance of clinicians to answer the same set of questions.

Search improvements – Using natural language prompts in a search tab, some models can access a search-focused API, like Wikipedia search, to return short text snippets or have an improved understanding of tasks. For example, instead of listing all files under a certain name, it would list the most recent file relevant to the context.

Document and data analysis – Large language models can be used to obtain insights from lengthy documents, books, and unstructured sources.

Data mining – Used to find anomalies or correlations within large datasets, data mining can be used to extract information in an enterprise setting, like customer relations management, or to obtain relevant data to build an AI model.

What are the advantages of using a large language model?

Advantages and Disadvantages of using a Large Language Model

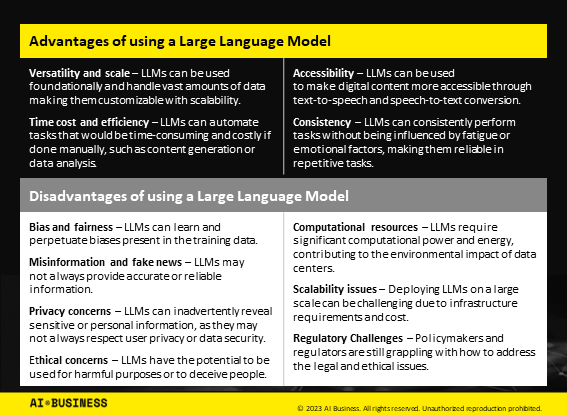

As already described, large language models have many advantageous, specific use cases. But, in a more general sense, a large language model can provide organizations and individual users with numerous advantages, including:

Versatility and scale – Large language models can be used foundationally, allowing developers to customize them for more specific use cases. They can be tailored for use across several tasks and needs via additional training. Large language models can also handle vast amounts of data and scale up to accommodate increasing workloads or datasets.

Time cost and efficiency – A large language model can automate tasks that would otherwise be time-consuming and costly if done manually, such as content generation or data analysis.

Accessibility – Large language models can be used to make digital content more accessible for individuals with disabilities through text-to-speech and speech-to-text conversion.

Consistency – They can consistently perform tasks without being influenced by fatigue or emotional factors, making them reliable in repetitive tasks.

What are the disadvantages of using a large language model?

Credit: Getty Images

While large language models provide several benefits, there are certain disadvantages to their use as well, including:

Bias and fairness – Large language models can learn and perpetuate biases present in the training data, leading to unfair or biased outputs, which can contribute to discrimination and inequality.

Misinformation and fake news – Large language models can generate or spread misinformation. They may not always provide accurate or reliable information, especially when dealing with controversial or unverified topics.

Privacy concerns – Large language models can inadvertently reveal sensitive or personal information, as they may not always respect user privacy or data security.

Ethical concerns – There are ethical concerns regarding the creation and use of such models, including their potential to be used for harmful purposes or to deceive people.

Computational resources – Developing and running large language models requires significant computational power and energy, contributing to the environmental impact of data centers.

Scalability issues – While large language models are scalable, deploying these models on a large scale can be challenging due to infrastructure requirements and cost.

Regulatory Challenges – Policymakers and regulators are still grappling with how to address the legal and ethical issues surrounding these models, which can create uncertainty in their use.

What are the best practices for using a large language model?

Credit: Getty Images

The problem of governing large language models is a complex and nuanced undertaking that demands cooperation between business and technology owners, with both parties agreeing and adhering to a single corporate policy governing the behavior and use of large language models.

Large language models present many challenges for companies seeking to use them responsibly. Large language models can be highly unpredictable, which may result in output that strays from corporate policies concerning language toxicity, bias, and accuracy.

The operationalization of large language models (LLMOps) fortunately builds directly upon the operational tools and techniques already established for more traditional forms of ML (MLOps).

There are three major areas of emphasis to which practitioners must pay careful attention:

The data used to train and fine-tune large language models.

The prompts used to direct and provide context for large language models.

The model outputs in response to those prompts.

The nature of large language models, which allows them to display serviceable capabilities across a wide panorama of use cases, also makes them harder to govern, necessitating the creation of new tools and best practices specific to responsible LLM development.

Perhaps the best way to begin discussing the safe use of large language models in an enterprise setting is to first look backward to a time before transformer models dominated the AI conversation, that is, before the launch of ChatGPT. At that time and before, words like “alignment” and “guardrails” had not yet been figured into AI development efforts. At that time, the notion of responsible AI centered around several very well-understood goals, including the following:

Privacy - Perhaps the most understood aspect, AI system privacy concerns the expectation and enforcement of control over a user’s privacy.

Accountability - One of the most difficult issues to pin down, accountability refers to the ability of an AI solution to be held accountable for its actions.

Safety - To what extent might an AI system represent a danger to its users?

The extreme speculation that has swirled around generative AI might suggest that it has already disrupted, perhaps even overturned, many aspects of society, both professional and personal. Jobs, markets, and even fundamental institutions appear poised for disruption at the hands of this newfangled branch of AI.

Therefore, the temptation is to throw out all that is known about AI, including how to build responsible AI solutions using the guidelines mentioned above. That would be a mistake. At their core, large language models are nothing more than a highly specialized (or generalized, depending on one’s point of view) implementation of deep learning. The same practices and tools that power MLOps can be leveraged to address LLMOps concerns, providing, at a minimum, a means of infusing DevOps into both data engineering and ML to provide a methodology for continuous integration and continuous deployment of large language models.

Considering this problem conceptually, the first course of action for any organization seeking to minimize risk in deploying large language models involves setting articulated expectations. That means establishing a well-defined code of conduct for practitioners, employees, and customers aligned with corporate mandates covering issues like inclusiveness, fairness, reliability, privacy, security, transparency, etc. With this document in hand, companies must then build a set of measures and requirements (relying on established standards like the National Institute of Standards & Technology’s Trustworthy and Responsible AI framework) necessary to meet this code of conduct. Those measures will likely cover the following areas:

Output quality (bias, format, etc.)

Output accuracy (misinformation, hallucinations, etc.)

Cyber (privacy, security, etc.)

Responsibility (copyright, ownership, etc.)

Vulnerability (potential for misuse and abuse)

With these expectations now firmly in hand, project owners must then assemble a responsible AI toolkit capable of observing, documenting, and responding to changes within all LLM metrics. What kind of metrics? In looking just at the rapidly evolving space of prompt engineering, companies will evaluate the way different prompts impact model outputs using both traditional and LLM-specific tools and practices:

A/B testing compares discrete prompt iterations to identify the most accurate output.

Red teaming is an important practice borrowed from cyber security. Red teaming involves actively searching for a means of circumventing model controls surrounding safety, bias, harmful language, etc.

Basic NLP testing applies traditional NLP measures like bilingual evaluation understudy (BLEU) or Recall-Oriented Understudy for Gisting Evaluation (ROUGE) scores.

Traditional ML scoring uses F1 scores, confusion matrices, and other measures to compare ground truth and model outputs.

Self-sufficiency scoring evaluates a set of candidate model outputs to determine the one outcome that is mostly closely aligned with some ground truth.

Hallucination rating measures the probability that a model will hallucinate in response to a given prompt, using a ground truth data reference—often a retrieval augmented generation (RAG) resource using corporate data.

Prompt error rates: Basic prompt measures involve the factual or semantic distance between ground truth and quick output.

Once companies have gained insight into these and many more metrics, the goal is to watch them closely and bring them back in line with the corporate code of conduct using automated procedures and a constantly evolving set of best practices.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)