A Bold Call from OpenAI, Safety Crusaders: Limit Compute

Turing awardee Yoshua Bengio and top universities join call for regulators to focus on hardware, not software, for AI safety

At a Glance

- OpenAI, Turing awardee Yoshua Bengio and top universities urge regulators to focus on governing AI hardware, not software.

- In their paper, they say software is easily duplicated and distributed. Hardware, however, can be physically possessed.

- Slowing down AI development through hardware curbs buys society time to prepare for widespread availability of advanced AI.

The wave of incoming AI regulations like the EU AI Act largely focuses on the software: models and underlying AI systems. A cohort of AI experts including OpenAI, however, argue that the main focus instead should be on the compute – hardware such as chips found in data centers – to ensure AI safety.

In a paper, the authors argue that chips and data centers are the more viable target for scrutiny as these are assets that can be physically possessed, whereas data and algorithms can be duplicated and disseminated.

By focusing on hardware, it would effectively buy time for society to prepare for the widespread availability of advanced AI systems.

The paper, 'Computing Power and the Governance of Artificial Intelligence,' was also co-authored by Turing awardee Yoshua Bengio and experts from the University of Cambridge, the Oxford Internet Institute and Harvard Kennedy School.

They cited the benefits of stronger government scrutiny of compute: increased regulatory visibility into AI capabilities and use; greater ability to steer funds and other resources toward safe and beneficial uses of AI; and enhanced enforcement of rules banning “reckless or malicious” development or use.

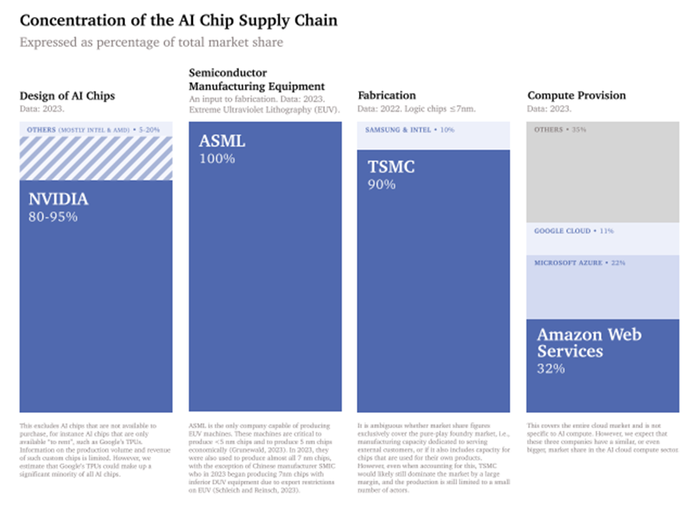

The authors explained that shifting the focus to hardware is more effective since “AI computing hardware is tangible and produced using an extremely concentrated supply chain.”

From the paper, 'Computing Power and the Governance of Artificial Intelligence'

Proposed restrictions

Among the proposals was the potential introduction of compute caps - built-in limits to the number of chips each AI chip can connect to. Other ideas floated by the paper include metaphorical 'start switches' for AI training, which would allow model builders to deny potentially risky AI systems access to data, akin to a digital veto.

One of the proposals is a registry of AI chips with chip producers, sellers and resellers forced to report all transfers. The authors contend this would provide precise information on the amount of compute possessed by nations and corporations at any one time.

Another would see cloud computing providers provide regular reports on large-scale AI training. Also proposed was more general workload monitoring to ensure transparency.

The authors also turned to safety measures on nuclear weapons for inspiration, like having to obtain consent from multiple parties in order to unlock compute for potentially risky AI systems.

“Computing hardware is visible, quantifiable, and its physical nature means restrictions can be imposed in a way that might soon be nearly impossible with more virtual elements of AI,” Haydn Belfield, a co-lead author of the report from Cambridge’s Leverhulme Centre for the Future of Intelligence, said in a university blog post.

While government efforts including the U.S. Executive Order on AI and China’s Generative AI Regulation all touch on compute when considering AI governance, the authors argue that more needs to be done.

Just as “international regulation of nuclear supplies focuses on a vital input that has to go through a lengthy, difficult and expensive process,” said Belfield, “a focus on compute would allow AI regulation to do the same.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)