A risk and security perspective on AI

We outline three potential threat scenarios and their implications

February 8, 2021

The rise of AI and its expansion into business-critical processes transform organizations – and creates new risks.

What if attackers manipulate AI features or steal data?

While risks and mitigation measures are highly organization-specific, the threats are generic.

They apply to all organizations using already AI or planning to do so in the future.

While the AI domain is new to many risks and security specialists, the questions driving through any assessment process are the typical ones (Figure 1):

Which threat actors have the interest to harm an organization, in this context, by attacking its AI capabilities? What is their objective?

What physical or digital AI-related objects and concepts – the AI-related assets – might threat actors attack?

What are potential attack or threat scenarios?

Figure 1: Understanding AI-related threats – the big picture

The seven threat actors

Well-known threat actors are script kiddies, professional cybercriminals, and foreign intelligence services. They are in everybody’s mind when cyberattacks are in the news today. They threaten as well any organization’s AI-related assets, respectively AI-driven business processes and control systems. They aim for stealing intellectual property, blackmailing for ransom, or crashing crucial servers.

These three threat actors are a heterogeneous group when looking at their technical know-how, financial power, and objectives. The types of threat, however, is more extensive and varied. Activists, competitors, partners, and internal employees can be threat actors as well.

When organizations mistreat their employees, some turn against their employers and try to harm them. Furthermore, even good-willing employees make mistakes due to negligence. They do not always follow all security protocols, e.g., because they consider them as inconvenient, unclear, or relevant. Such behavior can result in vulnerabilities. Competitors are natural threat actors since they benefit from illegal activities such as stealing intellectual property or harming ongoing operations. Even partners can be threat actors if they have a hidden agenda. They might want to shift the contracts’ balance to increase their profitability or even secretly plan to enter the market as competitors.

The last threat actor group are the activists. They do not aim for financial benefits but fight against what is, in their eyes, injustice. They want to expose an organization as unprofessional or proof injustice based on the AI models, e.g., if the models discriminate against society subgroups.

The 'Four plus Two' AI-related assets

Asset refers to software, hardware, and data – everything needed for information processing. Four asset types are essential for each organization using AI:

AI models. They help optimizing business processes, oversee assembly lines, control chemical reactors, predict stock market prices, etc. A neural network is a set of parameters and weights or equations. They can be stored in parameter files or are an integral part of application code.

The production system is the software- and hardware system on which the AI model runs. Such a system can be a Java application that contains a single AI model. Alternatively, the production system can be an AI runtime server such as RStudio Server, which executes hundreds of models for dozens of different applications.

Training data is essential for data scientists. They need the data to create and validate AI models.

The training environment on which data scientists create, optimize, and validate the AI models.

Two more assets are relevant because they feed or provide input to the training environment. These are, first, upstream systems and their data respectively external data sources. This data is the base for the actual training data. Secondly, pre-trained models or training sets downloaded from the internet are often the foundation for building organization-specific AI models. They help speed up the work of the data scientists

Any AI-related threat or attack relates to one or more of these assets. When successful, they compromise one (or more) of the information security CIA triangle properties: confidentiality, integrity, and availability.

Threat scenario “Confidentiality Breach”

Stealing intellectual property is the primary motive for threat actors trying to access confidential AI-related information. When competitors get training data or the AI models themselves, this saves them much time and money. Waymo, a self-driving car company, prides itself on more than 20 million self-driven miles and more than 15 billion simulated miles. When competitors get this training data or the generated AI model built with this data, this saves them millions of investments and years of work. A stolen underwriting model enables competitors to get lucrative deals in the insurance business while staying away from potentially problematic customers – even without having any historical data of this market segment.

Traditionally, stealing files – at least from not-internet-facing servers – required sophisticated attacks. Nevertheless, ruling such attacks out is foolish. With the advent of the cloud, companies train AI models in the public cloud. They store their models and training data there. Suppose IT security measures are not on-par with the on-premise world. In that case, attacks against weaky protected public cloud environments succeed much more effortless.

In general, however, unhappy or overburdened employees are the most significant risk. They can transfer any files – including models or training data – out of the company. Since models are often small, it is especially challenging to detect such activities.

Stealing a model, however, is also possible without access to it, just by probing. Suppose an attacker wants to mimic a “VIP identification” service that identifies prominent persons on images. The first step is to crawl the web to collect a large number of sample images (Figure 2). At this point, it is not clear whether pictures show VIPs. So it is not possible to train an AI model with them. The second step is to submit these pictures to the “VIP identification” web service. This service returns which images contain which VIPs. With this additional information, the attacker got a training set to train a neural network mimicking the original one.

Figure 2: Example for an attack mimicking a neural network without having direct access to it. Crawling the web for sample pictures (left), let the to be mimicked service annotate the images (middle), train a new neural network mimicking the behavior of the original one (right).

A final remark about non-financially motivated threat actors: activists can be interested in the model. Their motivation would be to validate whether a model discriminates subgroups of the society systematically, e.g., by pricing them differently than mainstream customers.

Threat scenario “Integrity Violation”

AI-related integrity threats relate, first of all, to the model quality. When threat actors modify a model or interfere with its training, the model classifies images incorrectly or makes unprecise predictions. As a consequence, a factory might produce unusable parts. An insurance company might assume that 90% of their 20-year-old customers die within the next year and stop selling them life policies. These are extreme cases of faulty models. Thus, organizations quickly notice that they have an issue.

In contrast, subtle manipulations are hard to find. How can the business detect a manipulated risk and pricing model that gives customers in southern Germany a 7% too low price while rejecting 10% of lucrative long-term customers in Austria? Such manipulations are not apparent, but they drive away loyal Austrian customers while generating direct financial losses in Germany due to the discounts.

An external threat actor manipulating a specific AI model sounds like too much work, at least if the company under attack is not at the forefront of technical progress. But even companies not in the spotlight of global cybercrime have to be aware of the threats of less-than-perfect AI models. Even good-willing, highly professional data scientists make mistakes, resulting in mediocre or completely wrong models. IT security and risk have to examine the internal quality assurance procedure for AI closely. AI-related quality assurance is often more in a highly agile startup-mode and less rigid than for critical software.

The last remark already shifted the focus from manipulating the model to the overall creation and training process. Bad training data implies bad models, no matter what quality metrics may indicate. What would James Bond do if he does not want to be recognized by surveillance cameras? He would ingest a few hundred pictures of himself in a training set, tag himself as a bird, and retrain the AI model. From now on, surveillance cameras ignore him because he is a bird and not a burglar.

Training data issues are severe and challenging to detect, regardless of whether inadequate test data results from a mistake or an attack. Who looks through millions of pictures to look for wrongly tagged James Bond images? Who understands why a command during test data preparation performs a left outer join and not a right outer join? Why are some values multiplied by 1.2 instead of 1.20001? And why does an upstream system discontinue sending negative values? Is it a mistake, sabotage, or improved data cleansing? Creating training data is highly complex, even without external threat actors.

All the threats and attacks discussed yet require access to training environments, production systems, the AI models, or the training data within an organization. Other threats do not require any of these accesses. They succeed without touching any asset of the targeted organization. Data scientists want to move fast and incorporate the newest algorithms in this quickly evolving field. They download pre-trained models, AI and statistics libraries, and publicly available datasets from the internet. These downloads help to build better company-specific models. Simultaneously, the downloads are a backdoor for attackers that can provide manipulated data or models, especially for niche models and training data.

Another attack type not requiring touching any assets are adversarial attacks. The idea is to manipulate input data so that a human eye does not detect the manipulation but that the AI model produces the wrong result. Small changes in a picture can result in the AI model not detecting a traffic light. This attack works for AI models that are complex neural networks, not for simple linear functions. Neural networks learn from training data. Suppose an attacker manipulates a picture that it becomes too different from the training data. In that case, the model’s behavior becomes unpredictable. Adversarial attacks are attacks where the input looks familiar to the human eye but is outside of the “comfort zone” of the AI model.

Threat scenario “Non-Availability”

The last threat scenario covers the potential non-availability of AI logic in production or a training environment outage. In the latter case, data scientists cannot work. If production systems are down, this impacts business processes. Non-availability is the consequence of attacks against servers. Typciall attacks are crashing the applications with malformed input or flooding the server with (distributed) denial of service attacks.

An aspect with particular relevance for AI is the threat of losing intermediate results or models. In data science, processes tend to be more informal than in traditional software development regarding documentation and version management of artifacts. Such laxness can cause availability risks. When a key engineer is sick, on holidays, or has left the company, his colleagues might not be able to retrain a model. They have to develop a new one from the beginning, which takes a while.

Understanding risks and mitigation

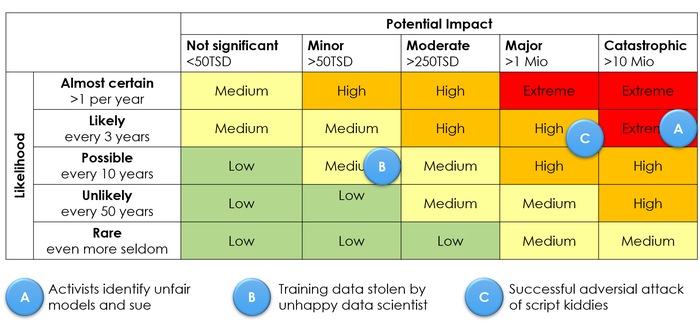

Threats and threat scenarios are generic, whereas risks are company-specific. Risk managers evaluate threats regarding their likelihood and the potential financial impact or other consequences. How much effort does it take, e.g., to steal an AI model, and who might be willing to invest in such an attack? What will be the impact on the business when competitors gain access to the system? A risk matrix such as in Figure 2 is a common visualization of risks.

Based on the risk assessment and a risk matrix, the management has to decide which risks to accept and which to mitigate. Mitigation means investing in organizational measures or technical improvements. Typical mitigation actions in the context of AI are:

Ensure that access control and perimeter security is in place for the training environment and production system. These measures reduce the risk of unwanted transfer of data or models to the outside and unwanted manipulations. They are essential if AI functionality resides in a public cloud.

Enforce stringent quality processes and quality gates. The negligent data scientists are, in most cases, one of the highest risks for poor models. Four-eyes-checks and proper documentation of critical decisions reduce the risk of deploying inadequate models to production.

Ensure that external data, code libraries, and pre-trained models come from reliable sources. Experimentation with such downloads is essential for data scientists. Still, some kind of governance process is necessary when external downloads contribute to AI models deployed to production.

Figure 3: Sample Risk Matrix illustrating an organization’s AI risks

These three measures reduce the potential threat and attack surface of organizations. However, they do not make a proper risk assessment obsolete. A risk assessment takes a closer look at all threat scenarios and threats discussed above. We must not forget: AI is fun and AI brings innovation. At the same time, organizations have to manage their AI-related security risks. Our list of threat scenarios and threats presented above is a simple-to-understand structure for risk specialists. This list guides them on their mission to make their organization’s AI capabilities more resilient against cyber-threats – or can your organization risk to ignore them?

Klaus Haller is a Senior IT Project Manager with in-depth business analysis, solution architecture, and consulting know-how. His experience covers Data Management, Analytics & AI, Information Security and Compliance, and Test Management. He enjoys applying his analytical skills and technical creativity to deliver solutions for complex projects with high levels of uncertainty. Typically, he manages projects consisting of 5-10 engineers.

Since 2005, Klaus works in IT consulting and for IT service providers, often (but not exclusively) in the financial industries in Switzerland.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)