Expert View: Preparing for the EU AI Act

An opinion piece by a Baker Donelson lawyer specializing in data privacy and security issues

On Dec. 8, EU policymakers reached an agreement on the Artificial Intelligence Act (AI Act). As a standard-bearer for global digital and data governance, the EU has been setting regulatory benchmarks on emerging issues ranging from data privacy to targeted advertising practices. After a marathon legislative process that began in April 2021, the EU AI Act will become the world's first comprehensive rule that regulates artificial intelligence.

Companies worldwide that use, develop, and distribute AI will soon feel the ‘Brussels Effect’ that raises AI governance standards across the global economy. While the formal text is still under final revision, senior EU officials anticipate that the historic AI Act will be adopted in April 2024, with certain requirements taking effect in 2026, two years following passage.

What is the EU AI Act?

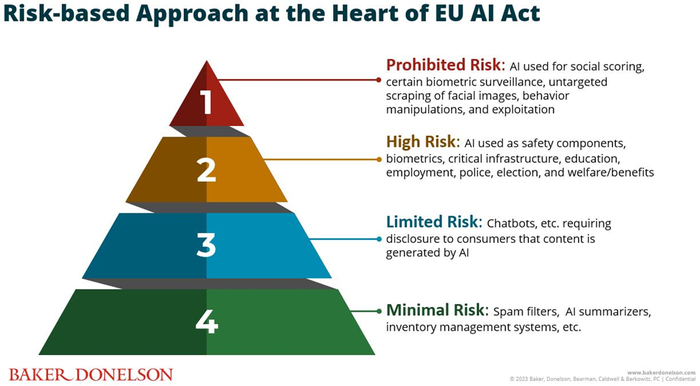

The EU's AI Act is a landmark legal framework first introduced by the EU Commission in April 2021 (see the 2021 Commissioner version here). Its objectives are to ensure the safety, accountability and transparency of AI systems in the EU market. Taking a risk-based approach, the EU AI Act will oversee AI system developers, distributors, importers, and users based on AI systems' adverse impact on individuals and society: with greater AI potential harm comes stronger oversight. The sense of déjà vu is palpable as the AI Act will shape the global AI legislative framework, just as EU privacy regulations like General Data Protection Regulations (GDPR) have done since 2016.

Who is impacted by the AI Act?

The AI Act provides specific definitions and responsibilities for different players in the AI ecosystem that develop, distribute, use, or supply AI systems for the EU market. In some cases, even if an entity is based outside the EU, the AI Act will still apply where "the output produced by the (AI) system is used in the (European) Union."

For example, if an entity based in South America develops an AI system that produces outputs used to assess EU residents for loan or job applications, the Act may apply. As a result, the long-arm reach of the AI Act will likely hold accountable all parties across the distribution chain. AI systems used for military or national security will be exempt while EU lawmakers set strict conditions around the use of remote biometric identification systems (RBI) by law enforcement.

The draft AI Act adopted by the European Parliament in June 2023 (see the 2023 Parliament version here) has brought the following two key players into the limelight:

Provider, which refers to a developer that makes an AI system available on the market "under its own name or trademark whether for payment or free of charge." For example, OpenAI is a provider for making ChatGPT available to EU users.

Deployer, which refers to the party that uses the AI systems for professional purposes or in a business-to-business setting. This term was previously known as the “User" from the original 2021 Commissioner version but has since been renamed as "Deployer" under the 2023 Parliament version.

The draft 2023 AI Act places compliance obligations primarily on the AI Provider, just as how the GDPR holds data controllers to greater accountability for adhering to data privacy principles. In reality, however, AI users (Deployers) will vastly outnumber those who develop and provide AI systems (Providers). In the case of high-risk AI systems described below, the AI Act requires the Deployer to play a critical role in mitigating the risks of AI use in the EU market.

How will the laws classify the risks of AI practices?

Taking a "risk-based approach," the AI Act seeks to regulate AI systems based on whether they could potentially jeopardize end-users' rights and safety under the following four risk categories:

1. Unacceptable AI: Recognizing the harms to EU democracy and human rights, the AI Act outright bans the use of AI in the EU market replicating the dystopian world of surveillance and control:

social credit systems;

biometric surveillance using sensitive personal data (subject to certain exceptions); and

other AI systems used for untargeted scraping of facial images, behavior manipulations, and exploitation.

2. High risk AI: The AI Act determines that certain AI systems pose material potential harm to critical infrastructure, employment, environment, credit scoring, election, border control, health and the rule of law. Developers of a ‘high-risk’ AI system must meet certain requirements, including passing a conformity assessment before its release to the public, registration in an EU database, data governance (including validation, testing, and training), cybersecurity assessment, and affording users opportunities for appeal and redress.

3. Low risk AI: AI systems posing only minor risks, such as chatbots, are subject to transparency responsibilities. These low-risk AI must provide a disclosure to consumers that content is generated by AI.

4. Minimal or no risk AI: Minimal-risk AI includes automated applications, such as AI-powered summarizers, e-discovery tools, and spam filters, among others. Most AI systems used in our daily operations fall under this category.

What are the penalties?

Penalties for violating the AI Act have increased, ranging from €7.5 million ($8.25 million) or 1.5% of global annual revenues to €35 million ($38.5 million) or 7% of global annual revenues. While the final text is pending, the 2023 Parliament version included the following tier-based table for assessing fines:

Unacceptable AI: Up to 7% global annual revenue, which increased from 6% in the 2021 Commission version.

High risk AI: Up to €20 million or 4% of global annual revenues

General purpose AI and foundation models (e.g., ChatGPT): Up to €15 million ($16.5 million) or 2% of global annual revenues, according to the 2023 Parliament version. The new version added provisions to address recent developments in generative AI. For more details, see our article on ‘How the EU's AI Act affects the use of ChatGPT.’

Supplying incorrect information: Up to €7.5 million ($8.25 million) or 1.5% of global revenues, which is a decrease from the initial proposal of €10 million or 2% of global revenues.

How should your business prepare for the AI Act?

At its core, effective AI governance for corporations requires a comprehensive approach, including internal controls and supply-chain management. AI developers and users of high-risk AI in financial services, employment, critical infrastructure, medical devices, health care and others need to conduct a close assessment of their existing operations and ask themselves the following questions:

Which departments are using AI tools?

Are the AI tools processing proprietary information or sensitive personal data?

Do these use cases fall under the unacceptable, high, or low-to-no-risk category under the AI Act?

Is the company an AI Provider that develops the AI tool with potential use in the EU market?

Is the company an AI Deployer that uses AI tools with the potential to produce outputs used in the EU market?

What do our vendor agreements with the Providers of these AI tools say about data protection, use restrictions, and compliance obligations?

The EU's agreement on the AI Act on could have a domino effect on global legislative efforts to tackle AI risks. Together with the recent release of the Biden administration's AI Executive Order in October, the new deal on the EU AI Act signals a paradigm shift on how businesses can leverage AI.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)