Google CEO: Gemini’s Racial Gaff is ‘Completely Unacceptable’

Google also explains exactly what went wrong with Gemini

At a Glance

- Google CEO told staff that Gemini’s racial inaccuracies in image generation of people were ‘completely unacceptable.'

- Gemini was tuned to show a range of people but did not account for times when it should not. Also, it became very cautious.

- Gemini's image generation was built atop Imagen 2. After the gaff, image generation of people was put on hold.

Google recently renamed its AI chatbot, Bard, as Gemini to capitalize on the buzz around its most advanced AI model of the same name. But the conversational AI made a major stumble out of the gate to the displeasure of the CEO.

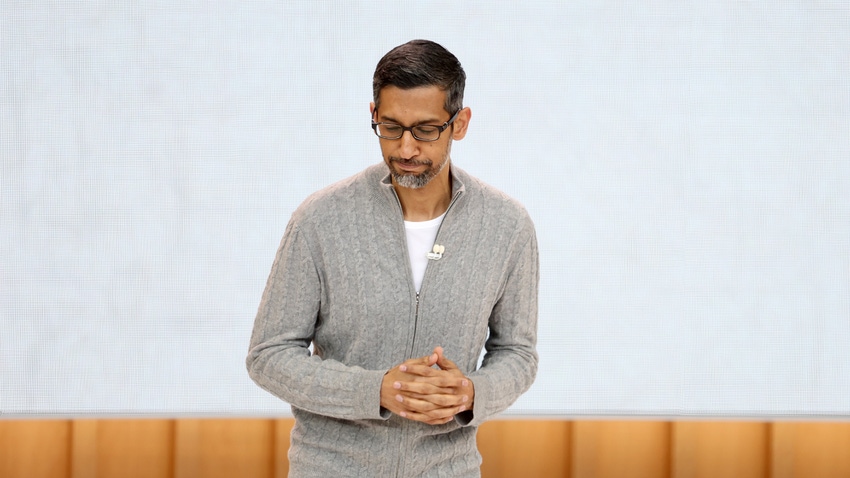

In an internal memo obtained by Semafor, Sundar Pichai said some of Gemini’s image generations depicting historically white figures as people of color "have offended our users and shown bias - to be clear, that's completely unacceptable and we got it wrong."

The gaff has gone viral on social media after Gemini generated images such as depicting America’s founding fathers as Latino or black. The model then also created questionable responses to user queries, such as likening Elon Musk’s influence to that of Adolf Hitler’s.

Google has stopped image generations of people on Gemini until further notice.

To make matters worse, Gemini's social media stabs came at the same time as people were giving effusive praise to rival OpenAI's new video generation model, Sora.

What happened?

In a blog post, Google senior vice president Prabhakar Raghavan said Gemini's image generation feature came from Imagen 2, Google's image generation model. When Google built the feature, it wanted to be inclusive since the product would be global. However, if the prompt specificied an ethnicity - such as a white vet with a dog - the image generation would work as intended.

So what went wrong? Raghavan said the "tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly not show a range." Also, over time, the model became "way more cautious than we intended and refused to answer certain prompts entirely - wrongly intepreting some very anodyne prompts as sensitive."

Raghavan added that Gemini is a "creativity and productivity tool" and as such can hallucinate.

Pichai said the mistakes are being fixed.

“Our teams have been working around the clock to address these issues,” Pichai wrote. “We’re already seeing a substantial improvement on a wide range of prompts. No AI is perfect, especially at this emerging stage of the industry’s development, but we know the bar is high for us and we will keep at it for however long it takes. And we’ll review what happened and make sure we fix it at scale.”

Some internet users accused Google and its Gemini model of bias, with a small minority attempting to frame it as evidence of the Great Replacement Theory, where white people are replaced by non-white people.

Pichai’s memo acknowledged that the responses offended users and showed bias. But the Google CEO said the company would learn from the mistakes.

“We’ll be driving a clear set of actions, including structural changes, updated product guidelines, improved launch processes, robust evals and red-teaming, and technical recommendations. We are looking across all of this and will make the necessary changes.”

Pichai urged Google employees to "learn from what went wrong" and also build on products they have announced in past weeks. It includes foundational advances in their underlying models including a one million-long context window "breakthrough" and its Gemma open models, which have been "well received."

Pichai is the second high-profile Google executive to speak out this week following Gemini’s generation mishaps. Earlier this week, Google DeepMind's CEO Demis Hassabis stated that Google had good intentions in creating a product for a broad user base, but ultimately applied its efforts “too broadly.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)