MIT Develops 'Masks' to Protect Images From Manipulation by AI

PhotoGuard creates invisible masks that distort AI-generated images

At a Glance

- MIT has created ‘masks’ invisible to the eye that can distort edited images, preventing manipulation by AI.

- The researchers said it is better than watermarking.

A team of researchers at MIT has developed a new technique called PhotoGuard that aims to prevent the unauthorized manipulation of images by AI systems.

Generative AI image generation models like Midjourney and Stable Diffusion can be used to edit existing images – either by adding new areas via inpainting, or cutting out objects and remixing them into others, like taking a person’s face and overlaying it on another image.

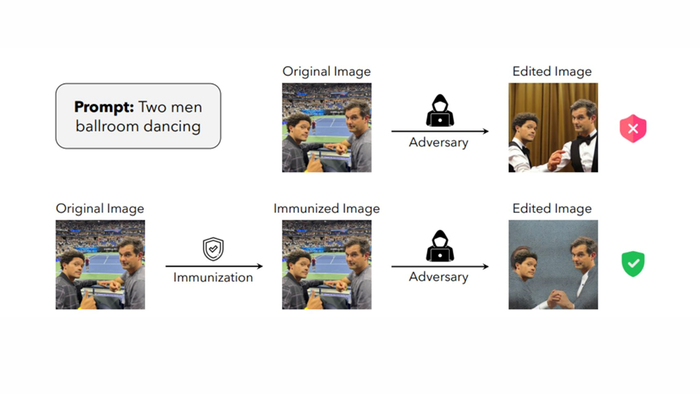

The MIT scientists created what is essentially a protective mask capable of preventing these models from manipulating images. The masks are invisible to the human eye and when interacting with a generative AI image model cause the output to appear distorted.

“By immunizing the original image before the adversary can access it, we disrupt their ability to successfully perform such edits,” the researchers wrote in a paper.

PhotoGuard can be accessed via GitHub under an MIT license – meaning it can be used commercially but requires preservation of copyright and license notices.

Credit: MIT

The purpose of PhotoGuard is to improve deepfake detection. The team behind it argue that while watermark approaches do work, they do not protect images from “being manipulated in the first place.”

PhotoGuard is designed to complement watermark protections to "disrupt the inner workings" of AI diffusion models.

With the use of AI image models like DALL-E and Stable Diffusion becoming more commonplace, there also appears to be increasing instances of misuse especially in social media. The case of Ron DeSantis' election team using AI-doctored images of former President Trump embracing Dr. Fauci shows early signs of issues that could arise.

The need for detecting AI-generated works is increasing – and while to those trained, AI-generated images are easy to spot, some research teams are working to make it easier. Take DALL-E and ChatGPT makers OpenAI, who this week pledged to continue researching ways to determine if a piece of audio or visual content is AI-generated, though the pledge came after it shut down its text detection tool due to its poor performance.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)