IBM's New AI Chip that Mimics Human Brain Outperforms GPUs

IBM's new AI chip NorthPole runs all memory on chip, making it more energy efficient and lowers latency compared to other chips

At a Glance

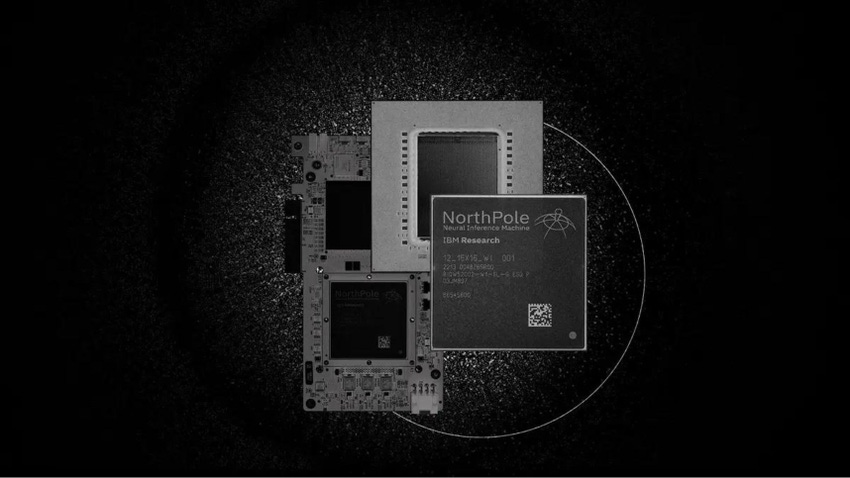

- IBM designed a new AI chip patterned after the human brain, called NorthPole.

- It is 25 times more energy efficient and has 22 times lower latency than a comparable 12-nm GPU on the ResNet50 benchmark.

- But it cannot access external memory so it supports larger neural networks by breaking them down into smaller sub-networks.

IBM is turning to the human brain for inspiration in designing its AI hardware, unveiling a new chip that it said has better latency and is more energy efficient than existing GPUs.

The tech giant’s 12-nm NorthPole chip boasts a new neural network hardware architecture optimized for neural inference tasks such as image classification and object detection.

IBM said the chip is 25 times more energy efficient and has 22 times lower latency than a comparable GPU on the ResNet50 benchmark. Its performance is documented in a paper just published in Science.

NorthPole has 22 billion transistors and extensive on-chip memory, meaning it can be used to store and run compute on-chip without needing to access external memory, further improving speed and efficiency.

“One of the biggest differences with NorthPole is that all of the memory for the device is on the chip itself, rather than connected separately,” according to an IBM blog post. The human brain is similarly self-contained.

By putting all of the memory on the chip, it does not have to continuously shuffle data back and forth − from memory, processing and any other components in the chip. This is called the von Neumann bottleneck.

“It’s an entire network on a chip,” said Dharmendra Modha, IBM’s chief scientist for brain-inspired computing who developed the chip along with his team. He said NorthPole outperforms even chips made with more advanced processes, such as 4-nm GPUs.

IBM also is looking to iterate upon NorthPole, such as experimenting with cutting-edge 2 nm nodes. The current state-of-the-art CPU size is 3 nm.

The strength is a weakness, too

However, NorthPole cannot access external memory. Thus it supports larger neural networks by “breaking them down into smaller sub-networks” to fit its memory and connecting sub-networks together on several NorthPole chips, a technique it calls a “scale-out.”

“We can’t run GPT-4 on this, but we could serve many of the models enterprises need,” Modha said. Also, the chip is “only for inferencing.”

On the other hand, NorthPole could be “well-suited” for edge applications that need to process massive amounts of data in real time, like those in autonomous vehicles.

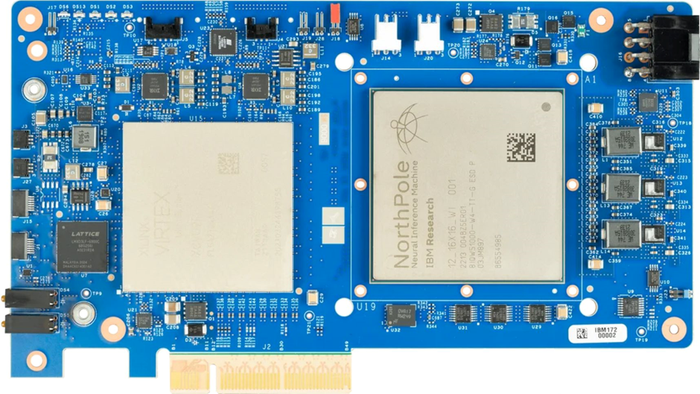

The NorthPole chip on a PCIe card. Credit: IBM

In designing the chip, Modha and his team drew inspiration from the human brain. NorthPole’s NoCs (networks on-chips) interconnect the cores, unifying and distributing computation and memory – which IBM’s researchers liken to long-distance white-matter and short-distance gray-matter pathways in the brain.

There is also the dense intersection that improves neural activations to flow locally, akin to the brain's local connectivity enabling pathways between neurons in nearby cortical regions.

IBM also tried to mimic the brain’s synapse precision – opting for a lower bit precision (two to four) compared to traditional GPUs, which use higher bit precisions (eight to 16). This was chosen to drastically reduce memory and power needs.

What’s next for NorthPole

It is still early days for NorthPole, with IBM planning to do more research. But the tech giant is already exploring possible applications for them.

During testing, NorthPole was largely applied to computer vision-related uses cases since funding for the project came from the U.S. Department of Defense. Such use cases including detection, image segmentation and video classification.

But the chip was also trialed elsewhere, including for natural language processing and speech recognition.

The hardware team behind it are currently exploring mapping decoder-only large language models to NorthPole scale-out systems.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)