Nvidia Unveils Blackwell GPUs to Power Massive AI Models

Nvidia launched the next-generation GPUs capable of running massive AI models at 25x lower cost at GTC 2024

At a Glance

- Nvidia has unveiled its next-gen Blackwell GPUs designed to more efficiently power trillion-parameter generative AI models.

- Blackwell GPUs will also power Nvidia’s supercomputing platform providing 11.5 exaflops of AI supercomputing.

Nvidia is gearing up to power a new industrial revolution.

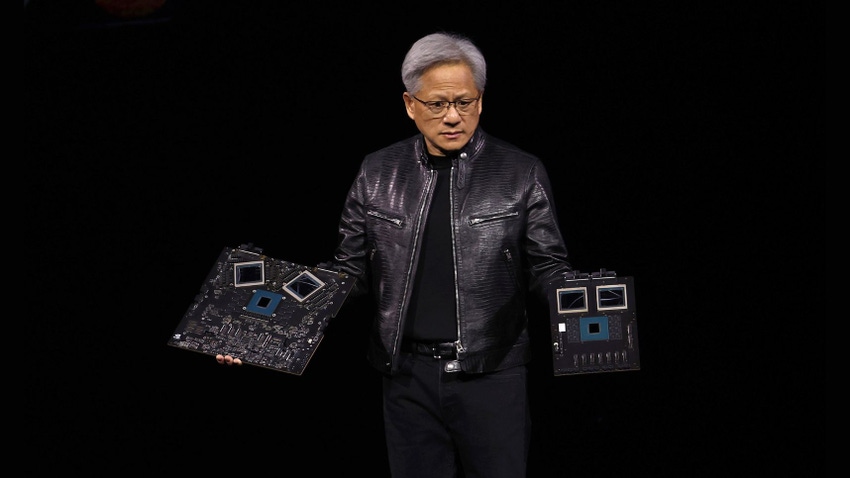

At Nvidia GTC 2024, founder and CEO Jensen Huang used his keynote to demonstrate next-generation generative AI capabilities across a variety of industries that the trillion-dollar company will be set to power.

It is set to use new GPUs to power those workloads. Chief among the GTC announcements was a successor to the Hopper platform, Blackwell.

The Blackwell GPU is designed to enable organizations to build and run real-time generative AI models that are trillions of parameters in size at up to 25x less cost and energy consumption than its predecessor.

The new B200 units offer a 30x performance increase compared with the prior H100 line for large language model inferencing and can support double the compute and model sizes.

“Generative AI is the defining technology of our time,” Huang said. “Blackwell is the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry.”

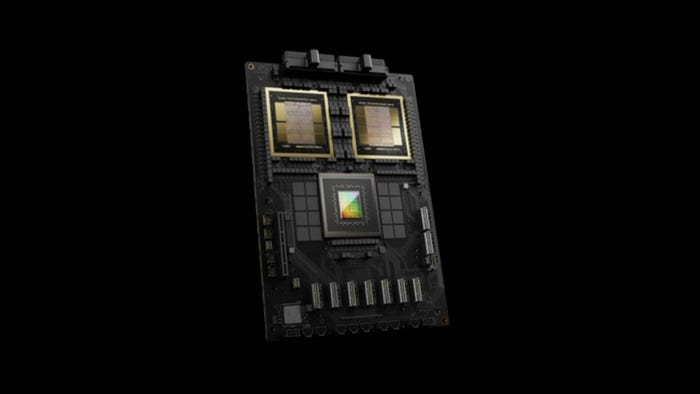

The new Blackwell Superchip platform | Credit: Nvidia

Nvidia announced major AI players are set to use Blackwell, including AWS, Google, Meta, Microsoft and OpenAI, as well as Tesla and xAI.

OpenAI CEO Sam Altman, who has been a vocal critic of hardware limitations in AI, said the new Blackwell platform offers “massive performance leaps and will accelerate our ability to deliver leading-edge models.”

Blackwell is named in honor of David Harold Blackwell, a mathematician who specialized in game theory and statistics, and the first Black scholar inducted into the National Academy of Sciences.

The new GPUs are packed with 208 billion transistors and are manufactured using a custom-built 4NP TSMC process with two-reticle limit dies connected by 10 TB/second chip-to-chip link into a single, unified GPU.

They feature four-bit floating point AI inference capabilities and a compression engine reserved for accelerating database queries.

Like the Hopper series, Blackwell will be available as a ‘Superchip’ consisting of two GPUs and a Grace CPU connected with a 900GBps chip-to-chip link.

Nvidia said that Blackwell-based products will be available starting later this year.

AWS, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to offer Blackwell-powered instances.

The GB200 will be available on Nvidia’s DGX Cloud platform, which gives enterprise developers access to the infrastructure and software needed to build and deploy Gen AI models.

AWS, Google Cloud and Oracle Cloud Infrastructure will host Blackwell-based instances later this year, while the likes of Dell, HPE, Lenovo and Supermicro are expected to deliver a range of server products based on Blackwell. Software firms like Cadence and Synopsys will also use Blackwell-based processors to accelerate their offerings, Nvidia announced.

Blackwell boosts supercomputers

The new Blackwell-powered Nvidia SuperPod | Credit: Nvidia

Blackwell Superchips are also set to power Nvidia’s next-generation AI supercomputer infrastructure.

The Blackwell-powered DGX SuperPod boasts new liquid-cooled rack-scale architecture and provides a whopping 11.5 exaflops of power.

Each DGX GB200 system features 36 GB200 Superchips — which include 36 NVIDIA Grace CPUs and 72 Blackwell GPUs — connected as one supercomputer.

Customers use the supercomputing platform to process trillion parameter AI models with constant uptime for superscale generative AI training and inference workloads.

Huang said: “DGX AI supercomputers are the factories of the AI industrial revolution.”

Microservices for building AI applications

Alongside the Blackwell GPUs, Nvidia also announced generative AI microservices for its AI Enterprise platform.

Version 5.0 of the Enterprise platform now includes downloadable software containers for deploying generative AI applications.

Among the available microservices are Nim for optimizing inferencing for popular AI models and the CUDA-X collection, which contains tools for building applications.

“Established enterprise platforms are sitting on a goldmine of data that can be transformed into generative AI copilots,” said Huang. “Created with our partner ecosystem, these containerized AI microservices are the building blocks for enterprises in every industry to become AI companies.”

Partnerships

Nvidia regularly announces several partnerships at GTC and this year was no different.

It is expanding its collaboration with Oracle on sovereign AI solutions that enable governments and enterprises to deploy AI factories, data centers that power several applications at once.

The company unveiled an agreement with Google to bring the DGX Cloud service to Google Cloud. The H100-powered DGX Cloud platform is also now generally available on Google Cloud.

Nvidia also announced new integrations with Microsoft that would see Azure adopt Blackwell Superchips for AI workloads. Also revealed was DGX Cloud’s native integration with Microsoft Fabric to streamline custom AI model development.

Microsoft Copilot, its generative AI productivity tool, will soon be powered by Nvidia GPUs, the two companies jointly announced.

AWS is to offer Nvidia Blackwell GPU-based Amazon EC2 instances to accelerate the performance of building and running inference on large language models.

And Nvidia is also working with SAP which will see the latter use the new NIM microservices to deploy AI applications.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)