Perfect AI model, broken app: Integration patterns and testing needs

It is a long way from having a working machine learning model on a local laptop to having a full-fledged fashion store with a mobile app incorporating this model

July 24, 2020

Shopping apps make our life easier and more fun. They suggest products to customers that perfectly match their shopping cart items and shopping history: a pink luxury handbag for the lady with an elegant pink dress? Click! A blue shirt and black socks for a gentleman buying a dark grey suit? Click!

Machine learning and predictive analytics algorithms run in the background.

They are used widely to increase sales, detect fraud, or improve the maintenance life cycle for complex machines. But how does the AI interact with the rest of the application – and what are the implications for software testing and quality assurance?

From prototyping to integration

It is a long way from having a working machine learning (ML) model on a local laptop to having a full-fledged fashion store with a mobile app incorporating this model so that shoppers buy like crazy. As a first step, data scientists want to understand the data, experiment on how to prepare best the data, and create their first models. This is typically done in a lab environment.

The machine learning model, however, is only one (important) component of the overall solution. In our example, it triggers which fashion items are shown to the customer in the app. But fashion apps or online stores have many non-machine learning-related features: product presentation, search for items, a shopping cart, and payment handling. Even if the machine learning model works perfectly, it is worthless if not integrated into the overall solution. For this integration, there are three main patterns: precalculate, reimplement, and runtime environment.

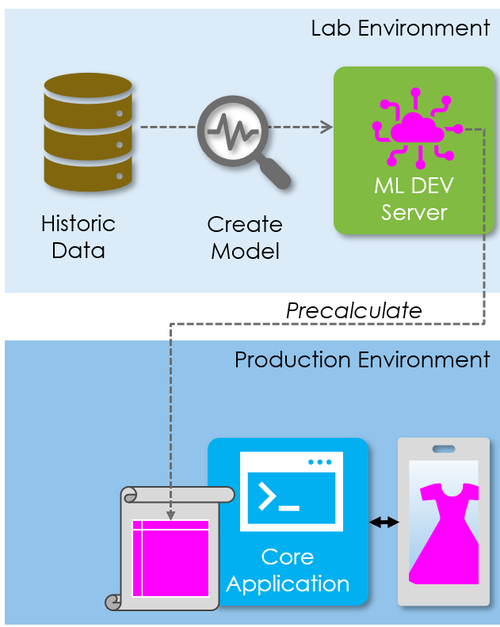

The “precalculate” integration pattern

“Quick and easy” characterize the precalculate pattern (Figure 1). It allows using machine learning insights in applications without technical integration. A data scientist creates the model in the lab environment – and runs and applies the model on the actual data as well. His deliverable is not the machine learning model, but a list with directly usable results. In our fashion store example, the list contains the individual fashion items each customer should be targeted with when opening the app.

Figure 1: Integration pattern "precalculate"

This pattern requires minimal engineering work on the application side, just an interface for uploading a list. There is no need for complex real-time interfaces, coordinating release dates, opening ports, setting up data feeds, or installing new servers. These are often time-consuming tasks in larger organizations. They delay projects, incur costs, and ruin business cases.

The drawbacks of the precalculate pattern relate to manual work. Engineers and data scientists have to collaborate and must be available to update the data. Also, humans make mistakes. Generating a list with the wrong data or uploading a wrong list has a massive impact on the business. Targeting customers in the middle of summer with ski boots, for example, harms the profits.

Then, there is the information security and data privacy aspect. Engineers get potentially access to a large amount of sensitive data.

Finally, the precalculated data might be outdated. If a customer bought a pink dress two hours ago, the fashion shop might continue for days and weeks to bombard her with ads for pink dresses until the lists are recalculated and uploaded again. How frequently this happens depends on the use case and business context. If an online store sells wedding dresses, there is no need to update customer data quickly. Customers most likely do not buy a second or third dress in a week. However, if you are in the fast-fashion business, having near real-time data is a competitive advantage.

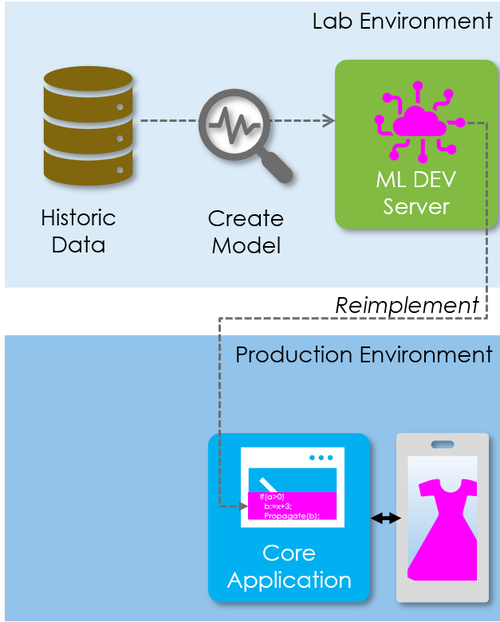

The “reimplement” pattern

The reimplement pattern is one option to work with newer data. Again, the data scientist creates a machine learning model in the lab environment. He hands over the model to the software engineering team as a kind of specification. The engineers implement (recode) the model in the fashion app or the backend server. Therefore, they use the coding language of their choice, e.g. Java, C#, or Python (Figure 2). This is an elegant solution for models depending only on a few data items. The architectural complexity of the fashion app and the backend does not increase much.

Figure 2: Integration pattern "reimplement"

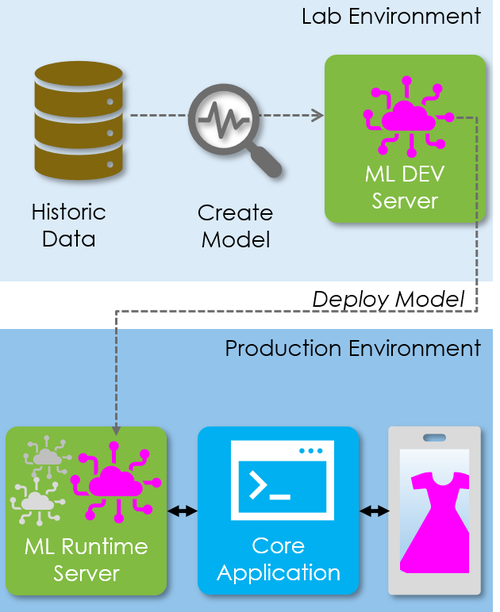

The runtime environment pattern

The third integration pattern is “runtime environment”. Java made the concept of runtime environments widely known. Java has a development environment. Engineers write the code and, when ready, convert it to Java bytecode. The bytecode runs on all servers and computers with a Java runtime environment (JRE).

The runtime environment integration pattern follows this model. The data scientist creates a model in his lab environment. Then, this model is deployed to a server on production on which the adequate analytics software technology is installed. Products such as RStudio Server or SAS belong to this category. In the fashion app example, the app communicates with the application on the backend server, which invokes the runtime environment for applying the machine learning model on the provided data (Figure 3). This architectural pattern is helpful for organizations with many machine learning models and if there are synergies (at run-time) regarding data sources needed for various models.

Figure 3: Integration pattern "runtime environment"

Continuously self-improving machine learning

As the table below summarizes, all three integration patterns rely on a machine learning model that is created at one point in time and used afterward. Thus, the training data is not “real-time” data, but a little bit older. Newer and additional data is only considered when the machine learning model is retrained and updated. Two models apply (!) the model on the newest data (reimplement and runtime environment patterns), one does not (precalculate pattern).

Many managers, especially on the business side, are disappointed when they learn that the machine learning models do not learn continuously by incorporating automatically newly available data. They were expecting a continuously self-improving machine learning approach. In most cases, this is neither necessary nor in their best interest.

There is a need for updating the machine learning model if the world changes. House pricing models do not require to be updated on an hourly base. Thus, there is no need to invest in automating seldom executed activities.

Secondly, automating the model training and building self-improving machine learning systems is technically feasible, but might have unintended side effects. A self-learning algorithm requires an optimization goal which can turn out to be too narrow-minded. If, for example, the goal is to increase the margin, the fashion shop might try to sell all customers only extravagant ties and super luxury handbags. This can increase profits in the short term. But at some point, customers will stop using the app if they want to buy shoes, dresses, and shirts because the app presents always ties and handbags. Fully automated optimization processes do not detect such trends. Humans, in contrast, do. Thus, fully automated and self-improving learning models are not recommended in most cases and, consequently, this article does not go into the details.

Integration Pattern | Precalculate | Reimplement | Runtime Environment | Self-Improving |

Model Currentness | Model creation time | Model creation time | Model creation time | Not in scope |

Data Currentness | Precalucation time | (Near) real-time | (Near) real-time |

Quality assurance and testing

I know from my experience as a test manager that a horde of testers and high testing efforts do not guarantee that managers know afterward the software quality. Testing systematically is the crucial challenge. The more complex a software solution, the more important gets testing the right things and the more important are integration and end-to-end tests. This applies also to software solutions that incorporate artificial intelligence, for example in the form of machine learning models.

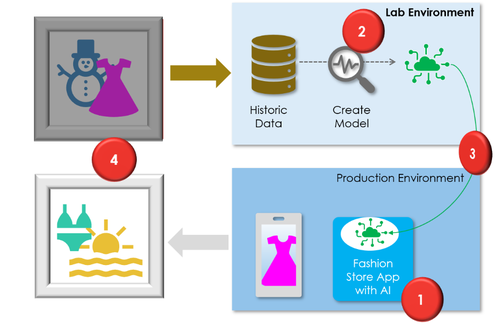

Three areas are important for test strategies and test concepts (Figure 4):

Testing the software solution excluding the machine learning model and functionality

Validating the quality of the machine learning model

Verifying and testing the integration of the machine learning model and the rest of the software solution

AI experts might not be familiar with how to test the software solution itself (Figure 4, ①), but it is a common activity for testers. They define test cases based on the software specification and perform them manually or implement test automation scripts. They perform load and performance tests or check whether the software crashes. They test whether there are issues with interfaces to other applications. For the fashion app, typical test cases are about the presentation feature for fashion items, the shopping cart functionality, or the payment process, etc.

Figure 4: Areas for quality assurance for software incorporating machine learning models

The second quality assurance topic is validating the machine learning model (Figure 4, ②). It is unfamiliar to most testers and a standard task for data scientists. This validation is important because machine learning models are crucial to the overall success of an application. The fashion app can present potential customers maybe ten or twenty fashion items. Either customers see trendy fashion items matching their taste quickly or they are gone. The financial success depends on how good the model performs and, thus, validating the models is a core challenge.

The good news is: there are various quality metrics for machine learning (see [Hal20] for details) and data scientists work with them on a daily base when creating new models. There are open quality assurance aspects, but they relate more to the organizational context: methodological and procedural errors and governance. Governance refers to who decides when to switch to a new model – and how to deal with situations when the model is not optimal. Does the data scientist simply deploy a new model when he has a new one? Should the business approve it based on a detailed comparison of the behavior of the old and the new model?

Methodological and procedural errors relate to the actual tasks data scientists perform. They make an error in a calculation, choose the wrong features, etc. This can have severe consequences, e.g. the fashion app could try to sell pink handbags to teenage boys due to some confusion and mistakes during the creation of the machine learning model. Should an organization assume their data scientist never makes procedural or methodological errors? If not, who reviews the model creation process or the result? Such mistakes can result in models with perfect metrics that are useless and ruin the organization.

The third quality risk can be summarized as follows: perfect machine learning model, perfectly tested software features, but the application and end-to-end-processes do not work. This relates to issues with the integration of the machine learning model (Figure 4, ③). The exact test needs depend on the chosen pattern. The precalculate pattern does not consider a technical integration between the machine learning model and the actual application. It is a manual process to prepare and upload the precalculated data into the application. Checklists or the four-eyes principle reduce the risk of wrongdoings.

The reimplement pattern requires to take the model from the lab environment and implement it in the solution. The lab model becomes the specification. Testers use the lab model and check whether the actual implementation has the intended behavior. Ideally, testers and data scientists work out the test cases together. Also, they need to be verified and reworked after the machine learning model is updated, at least, if the update is more than just changing some weights of a neural network.

The integration pattern runtime environment encapsulates all AI-related technology and configurations of an organization in one server component. Its setup has to be verified after the initial installation. Later, when models are added e.g. for a fashion store app, there is the need for end-to-end tests of the processes to ensure that all interfaces are up and running and work as expected. The model management is another source of error. Testers or the application managers have to ensure that the intended machine learning model with the right parameters is connected to a specific application.

Model degeneration

Companies test before the “go live.” They want to avoid problems in production afterward. Normally, once software works, it works. This is different for machine learning models and components. A machine learning model condenses the experience of hundreds, thousands, or millions of data points. The model “works” and is useful if the experience from the past helps to understand the present and the future. If the reality today differs from the one reflected in the training data set, the model is not useful. When the model learns before Christmas that affluent women buy long pink dresses, these are insights that are not relevant anymore next June when everybody prepares for a beach holiday. This process of machine learning models making less good predictions over time is named model degeneration.

Model degeneration poses a different quality assurance challenge than the three areas of testing mentioned above. It can only be detected when monitoring the model and its variables after the go-live (Figure 4, ④). There are three indicators that the model might be outdated:

Input values change (mean, distribution, etc.). Shopping carts contain 70% swimwear instead of 80% winter clothes. Only 5% instead of previously 25% of the customers have golden credit cards. These are sample indicators that the world changes, and, thus, recreating the machine learning model might make sense.

Significant changes in the output variables (mean, distribution, etc.). Previously, the model proposed around 1000 different shopping items with an average price of CHF 150. This month, the model suggests only 20 different products with an average price of CHF 15. When the output changes drastically, this is also an indication that the world changed.

Declining forecast quality. In the past, 47% of shoppers bought a proposed fashion item. The rate drops now to 20%. When this happens, it is obvious that the model is not working as good as in the past. Such metrics are especially helpful since the business understands them directly.

These three indicator types have in common that they can be implemented and monitored without much effort. A more complex alternative is to recalculate the machine learning model periodically with the then-current data and compare it with the deployed model in use. If there are (relevant) deviations, the previous model is replaced with the newer machine learning model. However, it is a financial question of how often organizations want to recreate models. Key cost drivers are the needed effort for generating and deploying models and for automating some or all of the tasks.

The conclusion

Quality assurance for applications with machine learning components does not require new techniques and methodologies. The challenge is that knowhow has to come from two distinct communities – AI and software testing. This article brings the learnings from the two communities together with emphasizing integration patterns.

Testing and quality assurance differ depending on whether there is only manual integration (“precalculate”), whether the machine learning model is reimplemented in the application code, or whether all models in the company are deployed to a dedicated runtime environment. Taking these learnings into consideration, organizations and IT departments can structure their testing and quality assurance processes. They can prevent that organizations create a great machine learning model while the overall application, in the end, is broken.

Klaus Haller is a Senior IT Project Manager with in-depth business analysis, solution architecture, and consulting know-how. His experience covers Data Management, Analytics & AI, Information Security and Compliance, and Test Management. He enjoys applying his analytical skills and technical creativity to deliver solutions for complex projects with high levels of uncertainty. Typically, he manages projects consisting of 5-10 engineers.

Since 2005, Klaus works in IT consulting and for IT service providers, often (but not exclusively) in the financial industries in Switzerland.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)