Three recommendations to avoid experimental pitfalls and create valid, accurate results

Coveo experts outline the three best practices that should be in place to improve industry-led consumer research

July 12, 2021

Sponsored Content

We’re A/B testing everything now. It opens new, exciting avenues for research on consumer behavior.

But companies don’t always go about consumer research in the best way.

Digital giants such as Google, Facebook, and Amazon have gotten used to listening to their customers using rigorous data collection and A/B testing.

What they hear looks quite different for the vast majority of smaller industry players, though. Even well-established companies that understand the importance of considering data rather than the Highest Paid Person's Opinion (HiPPO) are breezing over some of the best practices academia requires to ensure verifiable, unbiased results.

A mature approach to experimentation requires not only the right infrastructure but also compliance with a set of best scientific practices. After all, many findings in behavioral science have been frequently questioned for their alleged lack of generalizability, with failed replications often attributed to scientific malpractices such as p-hacking or limited understanding of statistical principles.

This piece looks at what best practices should be in place to improve industry-led consumer research. While A/B testing can give us great insights, we need to make sure the test is run in a responsible, accurate way.

1. Ensure you have the right infrastructure in place

The right infrastructure for experimentation is a combination of several elements, including reliable instrumentation (to record things like clicks, add to carts, and conversions) and data pipelines to data scientists.

There are many tasks and skills involved in successful experimentation, so it helps to have a team with extensive knowledge of scientific literature to generate meaningful hypotheses. It is also crucial to master the statistical skills to best interpret results, whether descriptive or inferential.

Industry specific domain knowledge is also key, as it helps detect potential problems with the sample under consideration in light of users’ behavior (e.g. you may have a different population of users on weekends than weekdays), identify the most relevant KPIs for an experiment (e.g. Clickthrough Rate, Conversion Rate, Average Order Value), and also carry out guardrail monitoring to ensure that running experiments aren’t harming the most important metrics.

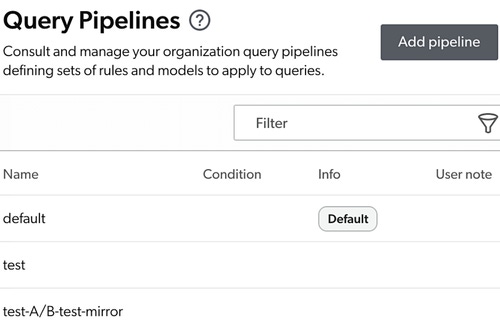

Beyond a versatile team with key knowledge areas, you also need your infrastructure to be scalable - to run experiments simultaneously -, and flexible - as different experiments need different configurations.

The right infrastructure should also include an easy way to replicate your experiments. We saw recently that a substantial set of previously published experiments in psychology and behavioral science couldn’t be replicated. The ‘Reproducibility Crisis’ is common knowledge in the scientific community, but most businesses haven’t even begun to realize this problem.

To mitigate these types of failures, collaborate with academic partners and follow best practices. No harking, no p-hacking, and don’t misunderstand the meaning of p-value.

2. Partner with academia for best practices

Looking at the lineups of presenters at elite AI and ecommerce conferences last year, either academics or industry practitioners published the papers. With a few notable exceptions, mixed teams of authors from industry and academia aren’t common practice yet.

But this is critical. Experimental research practices by the overwhelming majority of industry players are lagging a few years behind those in academia. Collaborations between universities and industry are win-wins as parties can share data, relevant domain expertise, and provide theoretical input.

Industry can give academics larger samples, realistic environments, and domain expertise. Academia can provide the latest and best statistical and experimental practices.

For instance, a consumer research best practice industry should pull from academia is an ethics review, by an institutional review board or research ethics committees. This is important as companies consider more complicated A/B testing like personalized pricing. It can lead to a loss in total consumer welfare if not approached in an ethical manner.

Using an industry-academia partnership will arguably elevate industry experimentation practices beyond just statistical principles, with more sensitivity paid also to key ethical aspects of experimentation.

3. Don’t prioritize ease-of-use over rigor

Complying with best practices may not be facilitated by the experimentation platforms currently available, as the latter have typically prioritized ease-of-use over rigor in A/B testing, limiting the scope and analysis of experiments and actually allowing scientific malpractices. The consequences of this can be bad for businesses because they could end up basing their decisions on inaccurate conclusions.

This means that companies who wish to embrace an experimentation culture shouldn’t blindly rely on these platforms. They should instead do their own due diligence for everything from building a hypothesis to estimating the duration of the experiment and interpreting the results.

For example, they should formulate clear and precise experimental hypotheses before starting the experiment. While experimentation tools can expose a lot of data and KPIs, it’s critical not to fall for “cherry-picking” and “HARKing.” Don’t choose only the metrics that support your hypothesis or adjust your hypothesis after looking at the data, so that it matches experiment results.

Avoid mechanical and uncritical applications of statistical inference. Most A/B testing platforms don’t support Bayesian analysis or advanced statistical methods, although these have become increasingly adopted.

Even worse, in some cases, experimentation platforms don't just offer an overly simplistic, naive view of experimentation. They can occasionally proactively encourage experimental malpractices, inviting for instance experimenters to terminate the test when they are pleased with the outcomes. To combat this, it's vital for experimenters to adhere to stopping rules and avoid peeking at the data and falling subject to repeated significance testing errors.

Despite these areas to work on, the industry has begun to embrace other best research practices. For instance, universities and funding agencies are unveiling the latest open access or open data initiatives. This makes research available for reuse, replication, and further analyses. It's a great start, but the industry needs to go further to safely and successfully embrace the new culture of experimentation.

While relying on one's instincts to make decisions is dangerous, relying on poor experimentation can be equally problematic. Especially when researching consumer behavior, it's important to implement the right infrastructure, partner with academia, and not prioritize ease over rigor.

Andrea Polonioli is a product marketer at Coveo. He has a passion for innovation-driven companies and a research background in cognitive science.

Jacopo Tagliabue is the lead AI scientist, at Coveo. He was the co-founder and CTO of his own startup, Tooso, before it was acquired by Coveo in 2019.

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)