Tears In Rain: Can Emotion AI Transform Customer Care?

Tears In Rain: Can Emotion AI Transform Customer Care?

March 9, 2018

Key takeaways

Emotion AI - otherwise known as affective computing - is part of a broader shift in our relationships with technology becoming more conversational, less one-sided

The applications of emotion AI are manifold, but the strongest use cases lie in augmenting human emotional intelligence - as demonstrated by use cases from firms audEERING, Cogito, and Affectiva

Big data challenges lie ahead for emotion AI, largely thanks to the broader issues surrounding the way we define human emotions. It's unclear whether these obstacles can be bridged by neural networks.

By Ciarán Daly

LONDON, UK - Think back to your last year in customer interactions. How many customer complaints did you process? Out of those, how many people complained about contact centre wait times? Now, ask yourself: how many of those customers were angry, sad, or dissatisfied about the level of service they received? Do you even know?

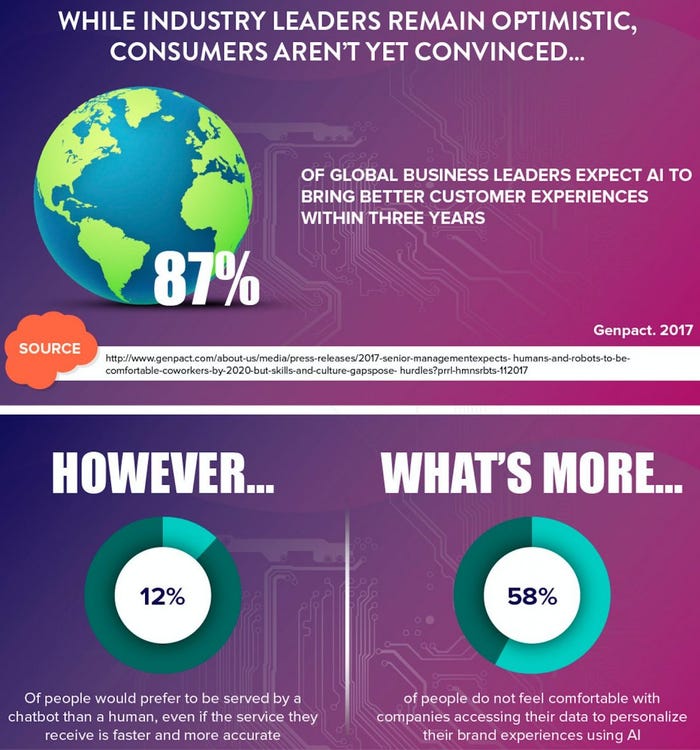

Satisfying customers, it seems, is a neverending challenge. Significant inroads have already been made with AI to cut down bottlenecks and response times, from chatbots to back-end automation. However, understanding customer dissatisfaction using primarily quantitative measures such as response times is no doubt reductive.

This is because no matter how fast your response times are, customers will never be satisfied until they truly feel that their voices have been heard. The problem of dissatisfaction, far from being a numbers game, has its roots in a much broader issue: the typical one-sidedness of the way in which we relate to technology.

Using voice recognition, natural language processing, and machine vision data, this could all be about to change, thanks to emotion AI - otherwise known as affective computing.

"You are currently #532 in the queue. A representative will be with you shortly. We thank you for your patience."

[caption id="attachment_10765" align="alignright" width="344"] Rana El-Kaliouby, CEO, Affectiva[/caption]

Rana El-Kaliouby, CEO, Affectiva[/caption]

Firstly, it is important to understand that emotion AI is part of a broader shift in the way we conceive of technology. As smart devices becomes more and more integrated into the everyday lives of both consumers and businesspeople, tech is becoming less instrumental and more conversational.

Affectiva CEO Rana el-Kaliouby believes this is down to our relationship with technology "becoming a lot more conversational and relational." Her company's solutions focus on gauging emotion in facial and voice recognition, and claim to have analysed over 6 million faces for use cases in both the automotive and media sectors. She argues that, "if we are trying to build technology to communicate with people, that technology should have emotional intelligence."

This doesn't quite imply that companies should be looking at developing robots capable of melancholy and depression in the vein of Marvin the Paranoid Android. The central challenge of emotion AI is training machines to recognise, interpret, and respond to human mood and emotion in digital interactions. Björn Schuller, CEO of audEERING and Associate Professor of Machine Learning at Imperial College London, believes that the challenge of emotion AI is three-fold.

"Actually, we are quite emotional with machines already. If your computer breaks down, don't you sometimes slam your keyboard, or shout at the loading screen?"

Firstly, there's the ability of a machine to recognise and understand human emotion - and this is primarily where major advances are taking place today. Then, there's the ability of a machine to show emotion, regardless of whether it has an emotion or not. The third leads into a series of sold-out speaking engagements Schuller has held across London entitled "Can AI Have Emotion?" - namely, machines emulating human emotion.

"In the movies, we are always being shown that people will interact very emotionally with machines, right?" he asks. "Actually, we are quite emotional with machines already. If your computer breaks down, don't you sometimes slam your keyboard, or shout at the loading screen?"

[caption id="attachment_10761" align="alignleft" width="375"] Marvin The Paranoid Android, V1 and V2[/caption]

Marvin The Paranoid Android, V1 and V2[/caption]

While swearing at your keyboard can be cathartic, human frustration towards technology continues to fall on mostly deaf ears. That’s because computing paradigms are naturally oriented towards logic and reason, rather than emotional and interpersonal nuance.

What's more, our tendency to lash out at inanimate objects does not begin and end with computers. It actually speaks to a much wider frustration with systems, a frustration most prominent when we’re stuck on unending hold in a call centre queue, or thwarted by an uncooperative credit card.

Imagine, for a moment, that you've been stuck in a call-centre queue for 40 minutes. Your fury is only exacerbated by the tinny jazz muzak coming down the line; your significance in the machine boiled down into song. Even your repeated cursewords don't seem to be doing the trick.

This time, though, something's different. The system picks up on how angry you are, how long you've been waiting, and immediately bumps you up to #1 in the queue. As you finally speak to a human, they seem unusually empathetic - listening to your concerns, anticipating the way you're feeling, and adapting their behaviour to your personal, one-on-one interaction.

This, in a nutshell, is what emotion AI can really address for businesses: that hot, burning feeling of frustration faced by customers when they come across a bottleneck in your service workflow. "If you have a tool capable of drawing attention to an other's emotion and social behaviour in much greater detail than you ever could, this will generate even more accurate and precise data in the future," Schuller says. "Even our conversation right now could be enhanced by giving me feedback about details I might have missed."

Related: The AI Customer Revolution [Free Infographic]

The age-old problem with emotion

Just as AI brings us back to fundamental discussions about the nature of mind and consciousness, emotion AI returns us to age-old questions around emotion. Is the way we conceive of emotion fundamentally flawed? Is affect and feeling simply an issue of data pattern recognition in the brain—and if so, are we ignoring some much deeper aspects of human behaviour and outlook?

Nearly half a century ago, the American psychologist Ulrich Neisser outlined ‘three fundamental and interrelated characteristics of human thought that are conspicuously absent from existing or contemplated computer programs':

Human thinking always takes place in, and contributes to, a cumulative process of growth and development.

Human thinking begins in an intimate association with emotions and feelings which is never entirely lost.

Almost all human activity, including thinking, serves not one but a multiplicity of motives at the same time.

Nevertheless, in the decades that followed, AI systems were designed to understand human cognition without accounting for emotion. That’s perhaps why today, emotion is seen primarily through the quantitative lens of data—which is causing companies major headaches as they attempt to answer the very qualitative problems outlined by psychologists nearly a century ago.

“We simply have very little labelled data—but also, the concept of emotion itself is not as sharply defined as many other pattern recognition tasks."

“To recognise emotion is, at first, a pattern recognition process—and so far, this has largely been a data problem, if you consider that speech recognizers these days are trained with the same amount of speech in a few hours as a human would hear in their entire lifespan,” argues Schuller.

“We’re talking about highly sensitive, difficult-to-obtain data. A lot of it has to be acted at first, but students in a lab pretending to be angry is not anywhere near subtle or realistic enough,” he goes on to explain. “We simply have very little labelled data—but also, the concept of emotion itself is not as sharply defined as many other pattern recognition tasks. Even psychologists have huge ongoing disputes about how best to model emotion.”

[caption id="attachment_10762" align="alignright" width="446"] Waiting to speak to a customer care agent feels like years for some customers. Emotive AI can fix that.[/caption]

Waiting to speak to a customer care agent feels like years for some customers. Emotive AI can fix that.[/caption]

The difficulty undoubtedly lies in the culturally-contingent, context-specific nature of emotions. Neel Burton M.D. illustrates the problem effectively in Psychology Today:

“If I say, ‘I am grateful’, I could mean one of three things: that I am currently feeling grateful for something, that I am generally grateful for that thing, or that I am a grateful kind of person. Similarly, if I say ‘I am proud’, I could mean that I am currently feeling proud about something, that I am generally proud about that thing, or that I am a proud kind of person. Let us call the first instance (currently feeling proud about something) an emotional experience, the second instance (being generally proud about that thing) an emotion or sentiment, and the third instance (being a proud kind of person), a trait. It is very common to confuse or amalgamate these three instances […] Similarly, it is very common to confuse emotions and feelings.”

Augmenting human staff with emotion AI

While he agrees that obtaining large volumes of appropriate data is still the biggest challenge facing emotion AI, Dr. John Kane, VP of Signal Processing and Data Science at Cogito, a conversational guidance company, remains sceptical about the accuracy of emotion-related data. “In real life, people don’t display emotion in the sort of caricatured ways you would expect them to. If you develop machine learning systems using this data, then apply them to real conversations in the wild, you might find that they fall down quite a bit. This can be really, really problematic.”

[caption id="attachment_10767" align="alignleft" width="320"] Dr. John Kane, VP Signal Processing and Data Science, Cogito[/caption]

Dr. John Kane, VP Signal Processing and Data Science, Cogito[/caption]

This is why, Kane explains, Cogito uses a continuous – rather than categorical - scale for labelling emotion across tens of millions of hours of call centre data. Cogito also operate an internal annotation team, specifically trained to continuously label the emotional dimensions of these interactions.

Their work is used to continuously bolster training and evaluation data for emotion-based machine learning systems - and is the backbone of their fully-automated real-time insights solution for call centre agents. Kane believes that this augmentation of human workers is the core use case for emotion AI.

"Affective computing isn't just about getting AI to recognise emotion, but developing an emotion-sensitive AI system that can provide meaningful feedback to people in real-time while they're actually doing their jobs. I see this technology as a sort of friendly cyborg sat on your shoulder," Kane laughs. "It's able to read through the qualitative aspects of the interaction and provide you with objective feedback as and when things happen. The idea is to encourage customer agents to use soft skills, timing, and their own emotion - things humans are so good at - to produce better interactions."

"When you're developing any emotion-based AI system, you really need to think about the human in the loop."

For Kane, emotion AI is pivotal to placing real human interactions at the centre of voice technology - and this is evident in Cogito's mission statement to provide integrated, real-time conversational guidance to contact centre staff using emotional recognition.

"When you're developing any emotion-based AI system, you really need to think about the human in the loop. Call centre agents might've completed 50 or 60 calls in a day, and it's quite understandable that they lose some awareness of how they - and the customer - are coming across," he says. "Providing real-time, meaningful feedback at salient moments is a really effective way of creating better interactions, and we've found this to be really effective in enterprise call centres. I think it comes down to being able to combine machine learning with behavioural signs in human-computer interaction research, in order to really provide something that is meaningful and useful for agents."

Feeling the future

The business use cases today are clear, but it remains to be seen whether the core challenge of emotion AI - bridging an affective gap between systems and users - will ever be overcome.

Schuller sees use cases across the spectrum of human interactions with technology, with implications for human mental health, social behaviour, and emotional literacy. "Particularly if I look at the digital natives - my children, for example - they use Alexa and expect that they can talk to every machine that more or less looks a little bit like Alexa," he says. "I imagine that the next generation of children will grow up speaking very naturally to these machines because the machines will be able to understand that.

[caption id="attachment_10768" align="alignright" width="299"] Björn Schuller, Associate Professor of Machine Learning, ICL; CEO, audEERING.[/caption]

Björn Schuller, Associate Professor of Machine Learning, ICL; CEO, audEERING.[/caption]

He believes that this will not only change the way we interact with machines, but it might also have some 'dark aspects' regarding human-to-human interactions in the future. "If children have socially and emotionally competent robots to look after them, or the elderly have robots to look after them, maybe at some point human behaviour itself will change in the long-run, because you might be used to always having friendly, kind robots around. That's one of the worries of mine - that it might impact human to human behaviour once we're surrounded by machines with such social intelligence but they are still servants."

For now, though, emotional AI technology is augmenting social interaction for those who most need it. Schuller's own company, audEERING, focuses primarily on audio emotional analysis and speech analysis. They provide solutions which can be run in real-time on-device using a smartphone. The data does not leave the device, and gives you access to a value-continuous representation of emotional feedback. Today, it's already being deployed for a range of mental health cases by the company.

"The major use case right now is a solution for individuals on the autistic spectrum that have difficulty in recognising others' emotions. It gives them feedback on the emotion of others - Sheldon Cooper from the Big Bang Theory, for example, could have an app on his smartphone telling him when people are angry or sad. If you think further into the future, these solutions will be more precise and robust, and with much broader applications. If you were a car salesman, in the future you might be much more successful because this would give you precise feedback on the best moment to pursue clients, for instance."

As affective computing systems align increasingly with AI, it's unclear what the social impacts of emotionally intelligent interfaces could be. We're yet to see a world where everybody's feelings can be quantified down to unique datapoints, but the implications of this technology for customers today are profound. Only time will tell whether emotional AI strengthens human connections, or ultimately hollows them out. It's up to businesses to make that call.

Based in London, Ciarán Daly is the Editor-in-Chief of AIBusiness.com, covering the critical issues, debates, and real-world use cases surrounding artificial intelligence - for executives, technologists, and enthusiasts alike. Reach him via email here.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)