Google Pursues Multitasking Medical AI to Unlock Health Data Insights

New Google models can understand connections across medical images and notes to support health care professionals

At a Glance

- Google researchers revealed promising medical AI models to provide clinicians with insights using multiple data sources.

- Med-PaLM-M and ELIXR can disseminate X-rays, doctor’s notes and genomic data to create reports on patient conditions.

Google is experimenting with multimodal AI large language models to power medical use cases.

Researchers for the tech giant have been working on developing several multimodal medical AI systems - AI systems that can process and understand data from different modalities like images, text and genomics.

Among Google’s research is Med-PaLM M, a large multimodal model that can encode and interpret various biomedical data modalities using the same model weights.

Another project is ELIXR, where scientists combined a language-aligned image encoder with Google’s flagship language model, PaLM 2, in an attempt to create a general purpose X-ray AI system.

“If successfully matured, multimodal medical large multimodal models might serve as the basis of new assistive technologies spanning professional medicine, medical research, and consumer applications,” wrote Greg Corrado and Yossi Matias, head of health AI, and vice president of Engineering and Research at Google Research, respectively, in a blog post.

Med-PaLM M

Among their experiments, Google’s researchers are trying to build a fully generalist system capable of absorbing information from various sources.

In a paper, ‘Towards Generalist Biomedical AI,’ Google researchers detail Med-PaLM M, which can draw on multiple sources of data to perform tasks.

Rather than having separate encoders and adapters for each data modality, Med-PaLM M combines one language model – PaLM – and a single vision encoder (ViT) to understand language and image data, respectively.

Corrado and Matias explained: “In this set up, text and tabular data modalities are covered by the LLM text encoder, but now all other data are treated as an image and fed to the vision encoder.”

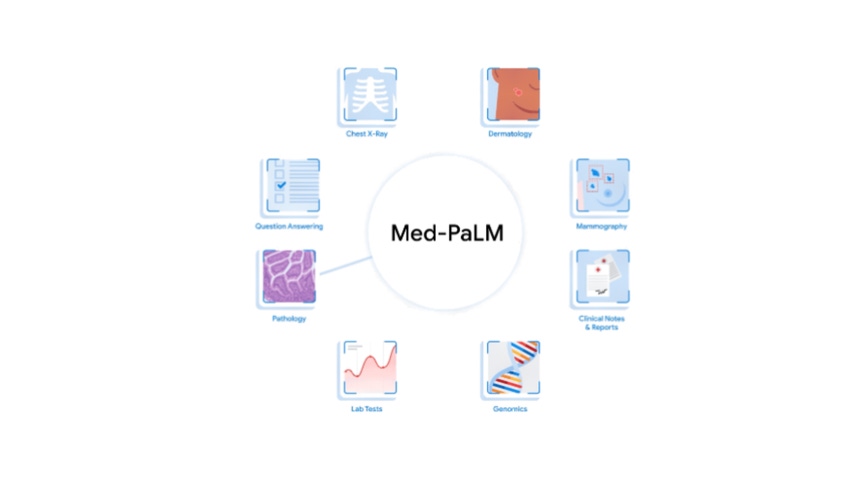

Med-PaLM M takes biomedical data from images, genomic records, and clinical documents like doctor’s notes or X-rays and can draw insights from those various data sources.

Google hopes that to create a medical-style system capable of multitasking. Credit: Google Research

Med-PaLM M was used to come up with insights on some 250 chest X-rays. Google found clinicians preferred the reports it generated in up to 40.5% of cases.

Google’s paper suggests that the tool could have potential clinical utility, as it often surpassed other specialist models on MultiMedBench – a new multimodal biomedical benchmark its researchers had to make just to assess the model. MultiMedBench encompasses 14 diverse tasks such as medical question answering, mammography and dermatology image interpretation and radiology report generation and summarization. Google’s team found the datasets used to test it were limited in size, modality and task diversity – a major issue considering they were developing a system capable of multitasking.

Google scientists also found it challenging to scale the model due to a scarcity in medical data. The paper reads, “Given the wide array of modalities and tasks such generalist models are expected to understand and tackle, it is crucial that the encoders for such diverse modalities are scaled jointly with the language model. Otherwise, for tasks that require interpretation of data from a combination of modalities, the performance will end up being bottlenecked by the weakest encoder.”

Med-PaLM M is not being open sourced. However, the team behind it have provided details regarding the underlying methodology and how they built on top of previously published models so other researchers can test out similar approaches.

Google ELIXR

Embeddings for Language/Image-aligned X-Rays, or ELIXR, is another attempt by Google researchers to create general purpose AI systems for medical uses, specifically understanding and interpreting X-ray images via machine learning.

The paper, ‘ELIXR: Towards a general purpose X-ray artificial intelligence system through alignment of large language models and radiology vision encoders,’ details how the researchers created the method.

The boffins trained a medical information adapter that maps the output of an existing or refined image encoder into an large language model-understandable form.

The resulting system performs well at its tasks and even performs capabilities it wasn’t trained for, including semantic search and visual question-answering.

The Exlir approach. Credit Google Research

Compared to existing methods including supervised contrastive learning (SupCon), ELIXR required two orders of magnitude less data to reach similar performance, Google researchers found.

ELIXR also showed promise on chest X-Ray (CXR) vision-language tasks, showcasing overall accuracies of 58.7% and 62.5% on visual question-answering and report quality assurance tasks, respectively.

Corrado and Matias wrote that ELIXR uses “relatively modest computational resources” to train its adapter layers and allows the underlying large language model to “build on existing highly-optimized and validated models in each data domain.”

Google sets AI sights on health care

Google’s researchers have been experimenting with AI models for health care settings long before ELIXR and Med-PaLM M.

The most prominent example is Med-PaLM-2, showcased at the company’s recent I/O event. Med-PaLM-2 can be promoted to determine medical issues with images, like X-rays. According to Google, the model achieved a nine times reduction in inaccurate reasoning, approaching the performance of clinicians to answer the same set of questions.

Google Cloud also has its own new medical imaging platform, The Medical Imaging Suite. Unveiled in October 2022, the medical imaging platform offers a suite of AI tools for clinicians to use, including imaging datasets and dashboards and tools like BigQuery and Looker.

Stay updated. Subscribe to the AI Business newsletter.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)