How Neural Networks Can Think Like Humans And Why It Matters

Some neural networks are now more similar to human cognition than ever before, according to a study published in Nature

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Some neural networks are now more similar to human cognition than ever before, according to a study published in Nature.

- A method called meta-learning for compositionality helps neural networks learn from a few tasks without special programming.

AI may be starting to think more like people.

In a recent study, scientists proposed that some neural networks are now more similar to human cognition than ever before. It is part of a growing body of research drawing connections between AI and human thought.

“Being made to model human thought processes is critical for developing AI systems that can be tailored to human needs and preferences so that they can effectively assist humans towards their goals,” Chenhao Tan, a professor of computer science at the University of Chicago who was not involved in the research, said in an interview.

Smarter networks

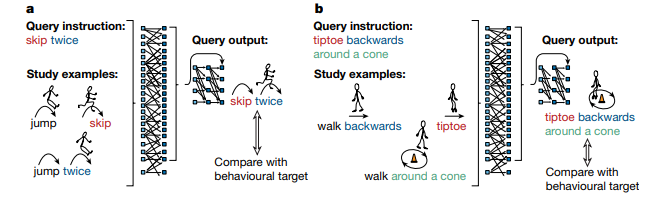

The research published in Nature showed that neural networks can think like humans by using a method called meta-learning for compositionality (MLC), which helps them learn from a few specific tasks without needing special programming or design. Instead, the system guides the network with high-level instructions or examples, and the network learns independently.

In the study, experiments involved both artificial intelligence models and human volunteers. Researchers used a made-up language featuring words like "dax" and "wif." These words were associated with either colored dots or a function that manipulated the order of these dots in a sequence. In this way, the word sequences determined the arrangement of the colored dots.

When presented with a nonsensical phrase, both the AI models and the human participants had to deduce the underlying "grammar rules" that governed the relationship between the words and the dots. The human volunteers successfully generated the correct sequences of dots approximately 80% of the time. When they made errors, these mistakes tended to be consistent, such as interpreting a word as representing a single dot rather than a function that rearranged the entire sequence of dots.

According to scientists, people are good at learning new ideas and mixing them with what they already know. For instance, when children learn to "skip," they can also grasp the idea of "skipping backward" or "skipping around a cone twice" because of their ability to put concepts together. There has been a long debate about whether neural networks can do this kind of systematic thinking, with some arguing that they cannot.

“Our implementation of MLC uses only common neural networks without added symbolic machinery and without hand-designed internal representations or inductive biases,” the authors wrote in their paper. “Instead, MLC provides a means of specifying the desired behavior through high-level guidance and/or direct human examples; a neural network is then asked to develop the right learning skills through meta-learning.”

Just as our brains consist of interconnected neurons that process information, AI neural networks are composed of artificial neurons that work collectively to recognize patterns, solve problems, and learn from data, Iu Ayala Portella, the CEO of AI consultancy Gradient Insight, said in an interview. The network’s structural likeness is at the heart of AI's ability to understand and interpret complex data.

“One fundamental contrast lies in the nature of AI's processing power,” Portella added. “While AI can analyze and process data at incredible speeds, it lacks the innate human capacity for understanding context, empathy, and true comprehension. AI can mimic language, but it doesn't truly ‘understand’ the way a human does. For instance, it may generate coherent text but lacks the emotional depth and context-awareness of a human writer.”

Human-like AI?

The new research comes as some recent reports indicate that AI could be developing human-like intelligence. In March, Microsoft researchers released a research paper contending that the large language model GPT-4 marked an advancement toward achieving artificial general intelligence, or AGI. This term refers to a machine capable of performing general tasks at the same level as the human brain.

Not everyone agrees with the Microsoft researchers' assertions. Gary Marcus and Ernest Davis, AI researchers from New York University, proposed that GPT may have undergone training on articles related to theory of mind tests and could have memorized the answers.

Scientists from the University of Sheffield found in a recent study that AI can imitate how humans learn, but it is unlikely to think exactly like humans unless it can artificially sense and feel the real world.

The research, published in the journal Science Robotics, asserted that human intelligence arises from the complex brain subsystems shared by all vertebrates. The researchers argued that this brain architecture, combined with a human's real-world experiences and evolutionary learning, needs to be addressed when constructing AI systems.

Stay updated. Subscribe to the AI Business newsletter.

“"ChatGPT, and other large neural network models, are exciting developments in AI which show that really hard challenges like learning the structure of human language can be solved. However, these types of AI systems are unlikely to advance to the point where they can fully think like a human brain if they continue to be designed using the same methods," researcher Tony Prescott said in a news release.

One advantage of human-like AI is that it could be better aware of context than current models, Portella noted.

“For example, AI in autonomous vehicles that understands human-like reasoning can make safer and more predictable decisions on the road,” he added. “In fields like health care, AI that emulates human thought processes can assist in diagnosing complex diseases by considering a patient's history, symptoms, and emotional state, just as a human doctor would.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)