NeuroAI: Tapping the Human Brain to Fuel AI Development

Is brain power the secret to unlocking AI understanding?

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Can AI learn better by mimicking how the human brain learns from examples and prior experiences?

- Google DeepMind's Dileep George said NeuroAI is an alternative to the current endless scaling of systems to improve them.

The most complex computer in the world is the human brain. This slab of fat, water, protein, carbs, salts and nerves is more complex than any AI system to date. Could understanding how it works be the key to unlocking the next generation of AI?

Experts at the World AI Cannes Festival seemed to think so. The concept that they call NeuroAI refers to the way our brains learn from examples and prior experiences and this could be more beneficial to teaching AI tasks than merely training it with ever-increasing increments of data.

Tristan Stöber, a research leader from Ruhr-Universität Bochum, cited the example of baby horses – how they can walk mere hours after they are born.

Imagine having a kind of neuromorphic hardware that would allow for a neural network to be integrated directly onto a device, mimicking the potential action of the brain.

Stöber explained: “You have asynchronous computation. It's not like a neural network where for every time step, every clock step, you compute the activity of all neurons at the same time. But you basically integrate information and only when it reaches a certain threshold, it sends an electric pulse further. And that means that when there's nothing interesting, there is hardly any energy use.”

He proposed such systems could be used to power robots more akin to those seen in science fiction, allowing for AI to potentially gain a better understanding of the world around them, something systems currently lack in great detail.

From balloons to planes

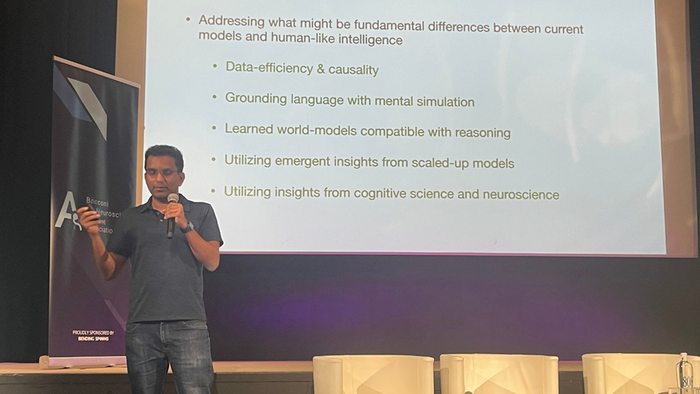

Dileep George, director of research at Google DeepMind, proposed NeuroAI as an alternative method for endlessly scaling systems in a bid to improve them.

Google DeepMind's Dileep George

George likened the concept to early flight – most aeronautics efforts in the early 20s and 30s focused on balloons that endlessly scaled. But following 1937’s Hindenburg disaster, airplanes became the focus.

The DeepMind research director said NeuroAI could be the AI equivalent of the airplane and that researchers should not be satisfied with solely scaling systems.

Today’s large language models are trained on mostly language tokens. According to George, that is not enough, as he advocated for grounding models with mental simulations as a means to teach them how to perform tasks.

“Language is just a way to control mental simulations,” he said, adding that even if humans did not have language, their experiences would be enough to provide them with information to make actions. “Sentences alone do not make sense. Language can fake it just like balloons can fly.”

Synapses on a chip

Achieving brain-like AI is a long way off and requires a lot more research. There have been some breakthroughs, like IBM’s NorthPole chips that are meant to mimic how the human brain processes information.

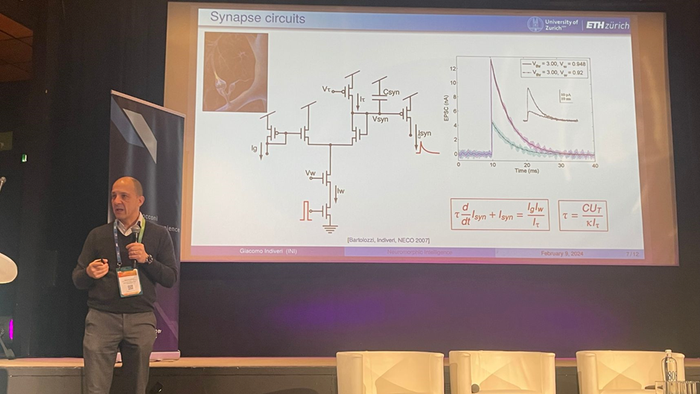

One breakthrough showcased in Cannes was the idea of synapse circuits. Professor Giacomo Indiveri from the University of Zurich and ETH Zurich showcased the idea of recreating brain synapse circuits in chips.

Prof. Giacomo Indiveri from the University of Zurich and ETH Zurich

Indiveri explained that these simple circuits can be recreated with transistors and placed on a chip to create neuromorphic processors.

These are designed to use little power, are lower latency and more compact. Indiveri said drones are already using them today.

However, if you want to scale up such processors, that is where it gets tough. Indiveri explained that to scale a neuromorphic processor you will need a larger area on a chip. Such systems are low accuracy.

“I would not use these chips to control my bank account, but I would put it in a Rosie the Robot,” he said.

If it is possible to build synapse-like structures on small-scale chips today, with more research, the mysteries of the brain could make what is improbable of AI finally a reality.

Read more about:

Conference NewsAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)