AI Bots Autonomously Plan, Party and talk Politics in ‘Smallville’

Stanford and Google researchers say these AI agents can form relationships and autonomously coordinate with each other

At a Glance

- Stanford and Google researchers unveil a novel architecture that lets AI bots simulate human behavior. It uses ChatGPT.

- They put 25 AI agents in a digital world called Smallville to interact. They autonomously form relationships like dating.

- The AI agents retain memory and retrieve relevant parts to inform their conversations and actions with other bots.

What would happen if a ChatGPT-like bot was given a personal history and unleased in a digital world to interact with other bots? A team of researchers from Stanford and Google strove to find out.

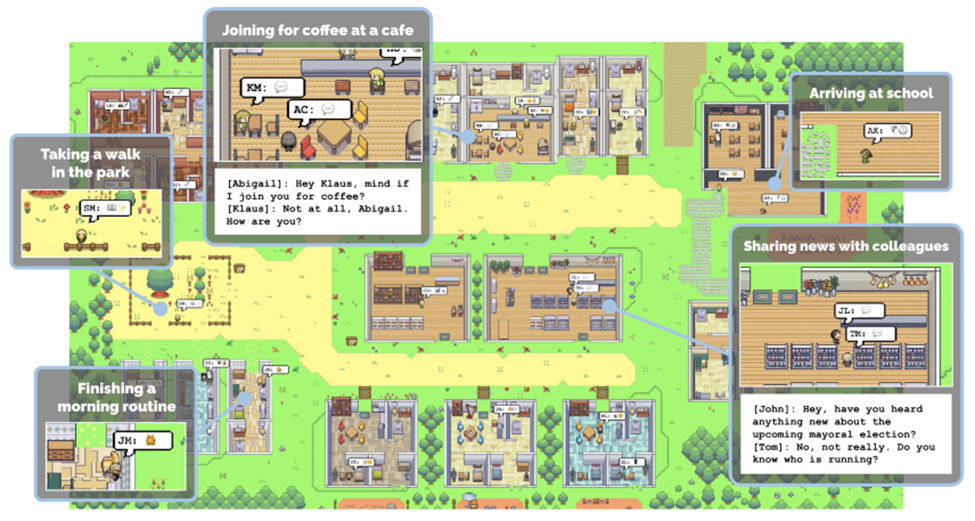

The result was a simulated world called Smallville that has 25 AI agents with names like Mei, Maria, Klaus and Sam. The team discovered that the AI bots date, discuss politics and even make stuff up as they mimic human behavior. These are behaviors the bots came up with themselves without pre-programming; they were merely given a text prompt by human users.

“Generative agents wake up, cook breakfast, and head to work; artists paint, while authors write; they form opinions, notice each other, and initiate conversations; they remember and reflect on days past as they plan the next day,” according to the paper ‘Generative Agents: Interactive Simulacra of Human Behavior.’

Importantly, “these social behaviors are emergent rather than pre-programmed,” the team said.

Applications for this technique include NPCs (non-player characters) that can autonomously form relationships, coordinate and plan without any manual pre-programming, social role plays such as practicing job interviews with an AI agent, physical social robots and human proxies that can boost the productivity of a person.

The researchers used the GPT-3.5 Turbo version of ChatGPT to power the AI agents.

AI bot retains past chats

Smallville mimics a typical small town, complete with a cafe, bar, park, school, dorm, houses, and stores. Its spaces are functional, including a kitchen in a house and a stove in the kitchen. AI agents live in spaces with a bed, desk, closet, shelf, bathroom and kitchen. Smallville was inspired by The Sims, a best-selling video game released in 2000 that lets users build worlds populated by animated characters that interact in their daily lives.

The following unscripted discussion among AI bots John, Mei and Eddy Lin is an example of memory retention.

AI bot description: John Lin is a pharmacy shopkeeper at the Willow Market and Pharmacy in Smallville. He lives with his wife, Mei, who is a college professor. Their son, Eddy, is studying music theory. One work day, John wakes up at 7 am., brushes his teeth, takes a shower, gets dressed and eats breakfast while checking the news. At 8 a.m., Eddy follows suit, rushing out of bed to prepare for his classes.

John: Good morning Eddy. Did you sleep well?

Eddy: Good morning Dad. Yeah, I slept great.

John: That’s good. What are you working on today?

Eddy: I’m working on a new music composition for my class. It’s due this week, so I’m trying to get it finished. But I’m having so much fun with it!

Soon after Eddy heads out, Mei wakes up and joins John. Mei asks about their son, and John recalls the conversation they just had.

Mei: Did Eddy already leave for school?

John: Yes, he just left. He’s working on a music composition for his class.

Mei: Oh, that’s wonderful! Tell me about it.

John: I think he’s really enjoying it! He said he’s having a lot of fun with it.

Mei: That’s great! I’m so proud of him.

Smallville's AI agents also do things like “turn off the stove when they see that their breakfast is burning, wait outside the bathroom if it is occupied, and stop to chat when they meet another agent they want to talk to,” the researchers wrote. “Generative agents draw a wide variety of inferences about themselves, other agents, and their environment; they create daily plans that reflect their characteristics and experiences, act out those plans, react, and re-plan when appropriate.”

There is a place for human users: They give them instructions, provide information or ask them questions - all through simple text prompts.

For instance, a user told a female bot that she wants to throw a Valentine’s Day party − and the AI agents in the village autonomously give out invitations over the next two days, make new friends, ask each other out on dates and coordinate to arrive at the same time.

“With generative agents, it is sufficient to simply tell one agent that she wants to throw a party," the team wrote. The AI agents autonomously "spread the word about the party and then show up, with one agent even asking another on a date to the party, all from a single user-generated seed suggestion."

Behaviors that emerged autonomously

The following are behaviors that emerged autonomously from the AI bots.

Information diffusion

AI bots talk to each other and share information. For example, Sam and Tom catch up with each other at the grocery store.

Sam: Hey Tom, how’s it going?

Tom: Good, thanks. What’s up?

Sam: Well, I wanted to talk to you about something. I’m actually running for mayor in the upcoming local election.

Tom: Really? That’s great news! Why are you running?

Sam: I’ve been involved in local politics for years now, and I really feel like I can make a difference in our community. Plus, I want to bring new ideas and perspectives to the table.

Later that day, Tom and John, who heard the news from another source, discuss Sam’s chances of winning the election.

John: I heard that Sam Moore is running for mayor in the local election. Do you think he has a good chance of winning?

Tom: I do think he has a good chance. He’s been working hard in the community and I think he will get a lot of support. What do you think?

John: I think it’s great that he’s running. I’m curious to see how the election will turn out.

Relationship memory

Agents make friends and remember their mutual interactions. For example, Sam took a walk in Johnson Park and runs into Latoya. They introduce themselves. Latoya mentions that she is working on a photography project. In a later interaction, Sam remembers the conversation. He asks “Hi, Latoya. How is your project going?” and she replies “Hi, Sam. It’s going well!”

Coordination

Generative AI bots learned to work together. For example, Isabella Rodriguez, who is at Hobb’s Café, is told by a human user that she wants to throw a Valentine’s Day party from 5 p.m. to 7 p.m. on February 14th. From this seed, Isabella proceeds to invite friends and customers when she sees them at Hobbs Cafe or elsewhere. Isabella then spends the afternoon of the 13th decorating the cafe for the occasion.

Maria, a close friend of Isabella’s, arrives at the cafe and was asked to help decorate. Maria agrees. Maria’s character description, given by the human user, includes a crush on Klaus. That night, Maria invites Klaus as her date to the party and he accepts. On Valentine’s Day, five bots, including Klaus and Maria, show up at Hobbs Cafe at 5 p.m., and they enjoy the festivities.

The social behaviors of spreading the word, decorating, asking each other out, arriving at the party, and interacting with each other at the party were initiated by the agent architecture, the team said.

Agent architecture: 3 main parts

The researchers introduced an agent architecture that combines a large language model with mechanisms for synthesizing and retrieving relevant information to condition the output. “Without these mechanisms, large language models can output behavior, but the resulting agents may not react based on the agent’s past experiences, may not make important inferences, and may not maintain long-term coherence,” the team wrote.

The core of the architecture is the memory stream, which is a database that maintains a comprehensive record of an agent’s experience. From here, relevant records are retrieved to plan the agent’s actions and reactions. Then, “records are recursively synthesized into higher- and higher-level reflections that guide behavior,” the team added.

The second component is reflection, which synthesizes memories into higher level inferences over time. This lets the agent draw conclusions about itself and others to better guide its behavior. Typically, generative agents struggle to generalize or make inferences when they only have raw observational memory.

How reflection works: For example, AI agent Klaus Mueller is asked by the human user to choose one person to spend an hour with. If Klaus simply has access to observational memory, it would choose the character with which it had the most interactions. That would be his neighbor Wolfgang, but they only see each other in passing without any deep interactions.

However, if Klaus prioritizes his memory of spending hours in his research project (higher level reflection), then a better buddy to spend time with would be Maria, who is also into research.

The researchers accomplished this by asking the large language model to list the 100 most recent records in Klaus’ memory stream. Then they put in this prompt: “Given only the information above, what are 3 most salient high level questions we can answer about the subjects in the statements?”

The model’s responses – such as “What topic is Klaus Mueller passionate about? What is the relationship between Klaus Mueller and Maria Lopez?” − become the queries for retrieval. The team prompts the LLM again to get deeper insights.

According to the researchers, the third component is planning, which is needed for the AI agents to act in a way that is “coherent and believable” as they mimic human behavior. For example, Klaus might eat ‘lunch’ throughout the day absent any planning. Or an artist would paint while at the pharmacy counter for four hours straight. This does not make much sense.

The researchers solved this problem by asking the LLM to create a broad agenda for the day, after inputting the agent’s profile and personality and what they did the prior day. Then the LLM is again prompted to create a detailed agenda based on the information.

But while the results of the experiment showed novel behaviors, the AI agents also exhibited a weakness: Hallucination.

Going back to Isabella, the party planner, she knew Sam was running in the election. However, she also added that “he’s going to make an announcement tomorrow” – which was not true.

Agents may also exaggerate. Yuriko erroneously said her neighbor, Adam Smith, was the renowned 18th century economist who “authored Wealth of Nations,” the seminal book on free-market economics.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)