Meet Gorilla: The AI Model That Beats GPT-4 at API Calls

Researchers want to build an ‘open source, one-stop-shop for all APIs’

At a Glance

- UC Berkeley researchers have created Gorilla, an AI model designed to offer improved API calls without any additional coding.

- Gorilla beats Anthropic’s Claude and Open’s GPT-4 at API calls while reducing hallucinations and supporting version changes.

Researchers at UC Berkeley have released an AI model capable of communicating with APIs faster than OpenAI’s GPT-4.

The researchers unveiled Gorilla, a Meta LLaMA model fine-tuned to improve its ability to make API calls – or more simply, work with external tools. Gorilla itself is an end-to-end model and is tailored to serve correct API calls without requiring any additional coding.

“It's designed to work as part of a wider ecosystem and can be flexibly integrated with other tools,” the team behind it said.

According to the team behind Gorilla, models like GPT-4 struggle with API calls “due to their inability to generate accurate input arguments and their tendency to hallucinate the wrong usage of an API call.”

The researchers argue that Gorilla “substantially mitigates” hallucinations and can enable flexible user updates or version changes.

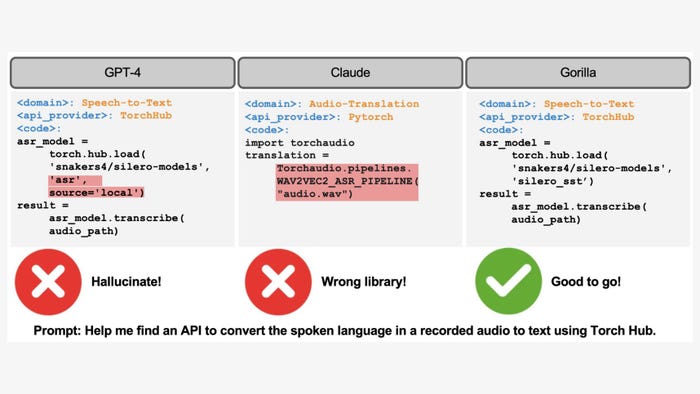

Example API calls generated by GPT-4, Claude, and Gorilla for the given prompt. In this example, GPT-4 presents a model that doesn’t exist, and Claude picks an incorrect library. In contrast, Berkeley's model, Gorilla, can identify the task correctly and suggest a fully-qualified API call. Image: UC Berkeley

According to the researcher’s paper, Gorilla outperforms both GPT-4 and Anthropic’s Claude in terms of API functionality accuracy as well as reducing hallucination errors.

The team behind the model said they want to build “an open source, one-stop-shop for all APIs” for large language models to interact with. They stressed that the Gorilla project will “always remain open source.”

It’s not the only model or concept where large language models interact with APIs. Facebook parent Meta recently published Toolformer, a way for language models to handle multiple API calls for natural language processing use cases. Toolformer, for example, uses a calculator to answer math questions or a machine translation system to predict text autonomously.

APIBench dataset

To evaluate the model's ability, the researchers published APIBench, a dataset that combines APIs from repositories platforms including HuggingFace, TorchHub and TensorHub.

The researchers constructed a dataset comprised of every API call in TorchHub (94) and TensorHub (696) and the most downloaded 20 models per task category from HuggingFace (a total of 925). The UC Berkeley scientists also generated 10 synthetic user question prompts per API using Self-Instruct.

To evaluate the functional correctness of the generated API, the researchers adopted a common AST sub-tree matching technique. They explained:

“We first parse the generated code into an AST tree, then find a sub-tree whose root node is the API call that we care about (e.g., torch.hub.load) and use it to index our dataset. We check the functional correctness and hallucination problem for the LLMs, reporting the corresponding accuracy.” Gorilla is then finetuned with document retrieval using the dataset.

Both the Gorilla model and the code are available via GitHub.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)