Meta Researchers Use AI to Generate Images from the Human Brain

Meta researchers developed a system to generate reconstructions of images based on human brain activity. It is imperfect, but a breakthrough.

At a Glance

- Meta has developed an AI system that can generate images based on what a person is thinking when shown an image.

- The system is not perfect, with generations often distorted, but could pave the way for real-time brain-computer interfaces.

Imagine being able to generate images from a human’s brain activity. That is exactly what researchers at Facebook parent Meta have done with artificial intelligence.

In a new paper titled ‘Brain Decoding: Towards a Real-Time Decoding of Images from Brain Activity,' researchers outlined an AI system capable of decoding visual representations in the brain using magnetoencephalography (MEG) signals.

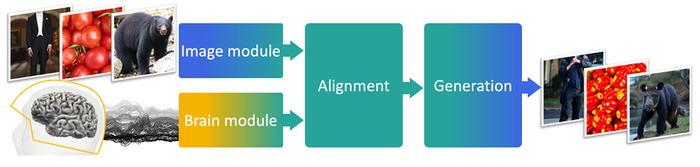

The researchers used deep learning models to align MEG signals with pretrained representations of images, allowing them to identify matching images from brain activity. The resulting process let them create images reconstructed from the MEG signals by feeding them into generative models.

The images did lack fine low-level visual details compared to reconstructions from Functional magnetic resonance imaging (fMRI) due to MEG signals having a lower spatial resolution. However, the experiment showed it is possible to decode visual information from the human brain, a potential way to enable brain-computer interface applications.

“We're excited about this research and hope that one day it may provide a stepping stone toward non-invasive brain-computer interfaces in a clinical setting that could help people who have lost their ability to speak,” Meta’s AI team wrote in a post on X (Twitter).

How does it work?

Meta’s researchers developed a method for reconstructing visual images from brain activity recorded with MEG signals.

MEG is used to map brain activity by recording magnetic fields produced by electrical currents occurring naturally in the brain via magnetometers. MEG is largely used in clinical settings to find irregularities in the brain, but researchers have been experimenting with it to measure brain activity.

Meta's team created a three-module pipeline to decode images from MEG signals, which consists of the following:

Pretrained image embeddings

An ‘MEG module’ trained end-to-end

A pretrained image generator

They took a convolutional neural network and trained it with contrastive and regression objectives to align MEG signals with image embeddings. The researchers used a public dataset of MEG recordings acquired by volunteers developed by Things, an international consortium of academic researchers.

Meta’s team took the MEG signals and continuously aligned them to the deep representation of the images, which can then condition the generation of images at each instant. The idea was to identify the matching image from brain activity.

By using a deep learning-based system, the researchers were able to improve retrieval accuracy compared to linear models.

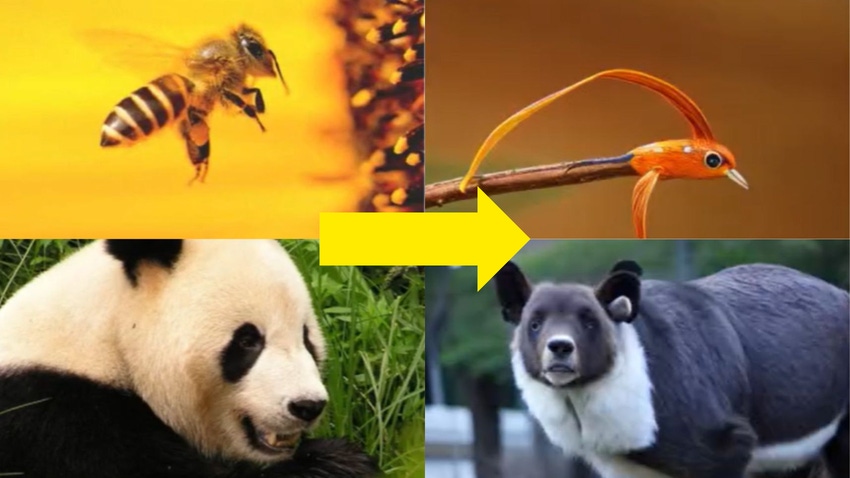

The resulting images are imperfect – the system generated often inaccurate low-level features by misplacing or misorienting some objects in the generated images. However, Meta’s researchers achieved their goal – to generate a continuous flow of images decoded from brain activity in real-time.

The team behind the MEG AI system believes it could be used to assist patients whose brain lesions make it a challenge to communicate – something that requires greater speed than an fMRI-based system could produce. "The present effort thus paves the way to achieve this long-awaited goal," the paper reads.

Using AI to interface with the human brain is a fledging, but growing area of research. The Elon Musk-led startup Neuralink is among the most famous working in this space, with the company moving to its first human clinical trial in September.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)