Nvidia Unveils New Chips, Services to Power Generative AI

DGX Cloud lets users access an AI supercomputer from a browser

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- AI Business breaks down the biggest AI updates from Nvidia GTC 2023.

- H100 chips are coming to Oracle Cloud, AWS and Azure, and new platforms will power large language models.

- Also unveiled is a new AI supercomputer in Japan and generative AI partnerships with Adobe, AWS, Shutterstock and others.

AI took center stage at Nvidia GTC 2023 with CEO Jensen Huang’s keynote centered around what was billed as a “defining moment in AI."

Huang said AI is the energy source powering the “warp drive engine” that is accelerated computing.

“The impressive capabilities of generative AI created a sense of urgency for companies to reimagine their products and business models,” he said. “Industrial companies are racing to digitalize and reinvent into software-driven tech companies. To be the disruptor and not the disrupted.”

Chips: H100 updates and Quantum DGX

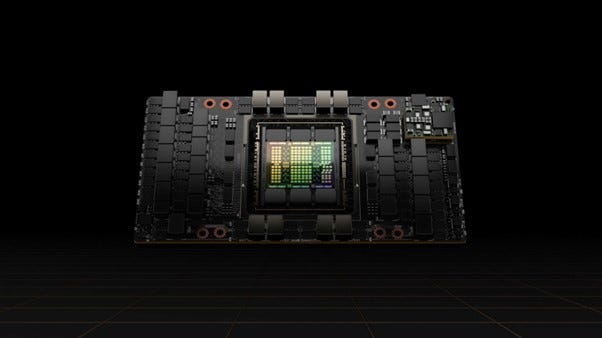

It would not be a GTC without an update on chips: Among the biggest semiconductor news at GTC was that vendors including Oracle Cloud Infrastructure, AWS and Microsoft Azure are offering new products and services featuring the new Nvidia H100 Tensor Core GPU, its recently released AI hardware.

According to Nvidia, the H100 ‘Hopper’ Tensor Core GPUs are up to 4.5 times faster than its current fastest AI chip, the A100.

Oracle will offer ‘limited availability’ of its Compute bare-metal GPU instances featuring H100s. AWS’s forthcoming EC2 UltraClusters of Amazon EC2 P5 instances are also set to feature H100s. This follows Microsoft Azure’s private preview announcement last week for its H100 virtual machine, ND H100 v5.

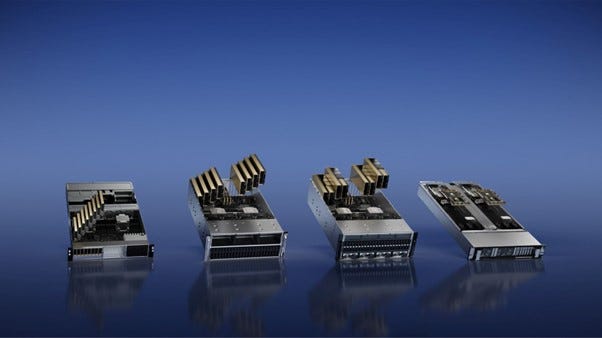

Sticking with hardware, Nvidia also launched four inference platforms for generative AI applications.

The platforms combine Nvidia software with processors including the L4 Tensor Core GPU and the H100 NVL GPU. Each is optimized for workloads including AI video, image generation, large language model deployment and recommender inference.

Nvidia L4 for AI Video is designed to deliver 120 times more AI-powered video performance than CPUs, while Grace Hopper for Recommendation Models is built with graph recommendation models in mind, as well as vector databases and graph neural networks.

According to Nvidia, the H100 NVL for Large Language Model Deployment is “ideal for deploying massive LLMs like ChatGPT at scale.” With 94GB of memory via its Transformer Engine, the platform provides up to 12 times faster inference performance at GPT-3 compared to prior generation A100s at a data center scale.

The platforms’ software layer features the Nvidia AI Enterprise software suite, which includes TensorRT, a software development kit for high-performance deep learning inference, and Triton Inference Server, an open source inference-serving software that helps standardize model deployment.

Nvidia also unveiled the cuLitho software library, which can be used by chipmakers for design and manufacturing.

CuLitho is a library with optimized tools and algorithms for GPU accelerating computational lithography and the manufacturing process of semiconductors by orders of magnitude over current CPU-based methods.

The software library is being integrated by chip foundry market leader TSMC, as well as electronic design automation company Synopsys.

Equipment maker ASML was also said to be closely working with Nvidia on GPUs and cuLitho and is planning to integrate support for GPUs into all of its computational lithography software products.

“The chip industry is the foundation of nearly every other industry in the world,” said CEO Huang. “With lithography at the limits of physics, Nvidia’s introduction of cuLitho and collaboration with our partners allows fabs to increase throughput, reduce their carbon footprint and set the foundation for 2nm and beyond.”

In more hardware news, Nvidia announced a GPU-accelerated quantum computing system, the Nvidia DGX Quantum. Read more about this potential world first in our sister publication, Enter Quantum.

Partnerships with Adobe, Getty, Microsoft

Every GTC sees Nvidia announce a string of new partnerships or expansions to existing ones. This year proved no different as the company announced a deal with Adobe to co-develop generative AI models.

Adobe and Nvidia’s generative AI innovations will focus on integrations into applications used by creators and marketers.

Adobe unveiled a slew of generative AI capabilities for its Creative Cloud suite last October, but its deal with Nvidia will see the pair jointly develop and market new generative AI models via products such as Adobe Photoshop, Premiere Pro and After Effects. The models will also be marketed via the new Nvidia Picasso cloud service for broad reach to third-party developers.

Nvidia also announced it is working with Shutterstock to create generative 3D assets from text prompts. The partnership will see 3D models trained with Shutterstock assets using Nvidia Picasso to convert them to high-fidelity 3D content, allowing users to create 3D models in minutes for uses in industrial digital twins, entertainment and gaming.

The models will then be accessible via Shutterstock’s platform, as well as Turbosquid.com and Nvidia’s Omniverse platform.

Another company to have struck an agreement with Nvidia is Getty. The pair are working to develop two generative AI models for users to create custom images and videos from text prompts.

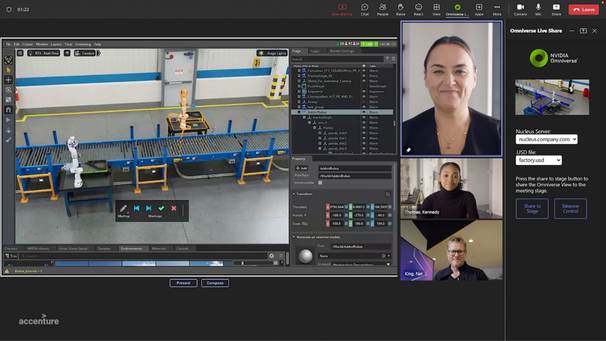

Nvidia revealed it is working with Microsoft as well to bring its industrial metaverse offerings to Azure users.

Azure will now host NvidiaOmniverse Cloud, a platform-as-a-service in which users can design and deploy industrial metaverse applications and Nvidia DGX Cloud (more details down below).

Additionally, the pair are working on productivity and collaboration, including connecting Microsoft Teams, OneDrive and SharePoint with Omniverse, allowing Teams users to collaborate on digital twins in Omniverse, for example.

Nvidia inked another partnership with a cloud provider: It is working with AWS on AI infrastructure for large language models.

The companies will jointly work on building out scalable AI infrastructure to train, model and develop generative AI applications. The joint work features Amazon Elastic Compute Cloud (Amazon EC2) P5 instances powered by Nvidia H100 Tensor Core GPUs to deliver up to 20 exaFLOPS of compute performance for training models.

And Google Cloud is to integrate Nvidia L4 Tensor Core GPUs to power generative AI applications. Google Cloud is the first cloud services provider to offer Nvidia’s L4 Tensor Core GPU. L4 GPUs will be available with optimized support on Vertex AI, which now supports building, tuning and deploying large generative AI models.

Telco giant AT&T is working with Nvidia to use the latter’s AI tech to process data, build digital avatars for employee support and optimize service-fleet routing.

And Nvidia also announced that it is collaborating with Medtronic, a medical devices company. The partnership will see Nvidia’s AI tech integrated into Medtronic’s GI Genius Intelligent Endoscopy Module, an AI-assisted colonoscopy tool to help physicians detect polyps that can lead to colorectal cancer.

DGX Cloud: An AI supercomputer in your browser

Another unveiling at GTC 2023 was DGX Cloud, an AI supercomputing service that gives enterprises access to infrastructure and software needed to train models for generative AI applications.

DGX Cloud provides dedicated clusters of Nvidia DGX AI supercomputing, paired with the company’s AI software, making it possible for companies to access their own AI supercomputer from a web browser.

Enterprises can rent DGX Cloud clusters for $37,000 per month. Users can manage and monitor workloads using Nvidia Base Command so the right amount of infrastructure can be set for each job.

Each instance of DGX Cloud features eight Nvidia H100 or A100 80GB Tensor Core GPUs for a total of 640GB of GPU memory per node.

Biotech company Amgen, property insurance cloud platform CCC and enterprise workflows company ServiceNow are among the initial users of DGX Cloud, as showcased during the GTC keynote.

“We are at the iPhone moment of AI. Startups are racing to build disruptive products and business models, and incumbents are looking to respond,” said Huang. “DGX Cloud gives customers instant access to Nvidia AI supercomputing in global-scale clouds.”

Tokyo-1: AI supercomputer for drug discovery

Nvidia announced it is working with Japanese general trading conglomerate Mitsui & Co. on Tokyo-1, an AI supercomputer.

Tokyo-1 will be powered by Nvidia hardware, including its 16 Nvidia DGX H100 systems, each with eight NVIDIA H100 Tensor Core GPUs.

The supercomputer will be used to build and train generative AI models for drug discovery and molecular dynamics simulations.

The AI supercomputer will be accessible to Japan’s pharmaceutical companies and startups, and Tokyo-1 users can leverage LLMs for chemistry, protein, DNA and RNA data formats through the Nvidia BioNeMo drug discovery software and service.

Xeureka, a Mitsui subsidiary focused on AI-powered drug discovery, will be operating Tokyo-1, which is expected to go online later this year.

“Tokyo-1 is designed to address some of the barriers to implementing data-driven, AI-accelerated drug discovery in Japan,” said Hiroki Makiguchi, product engineering manager in the science and technology division at Xeureka. "This initiative will uplevel the Japanese pharmaceutical industry with high-performance computing and unlock the potential of generative AI to discover new therapies."

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)