Vicuna Makers Build Small AI Model That Rivals GPT-4 in Power

Using LLM Decontaminator, a new tool to perfect datasets, the researchers achieved powerful results from a small LLM

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- The researchers who built Vicuna unveil a new LLM that achieves GPT-4 results at just 13 billion parameters in size.

The team behind the Vicuna language model has developed a new smaller-sized AI model that competes with OpenAI's GPT-4 in terms of performance.

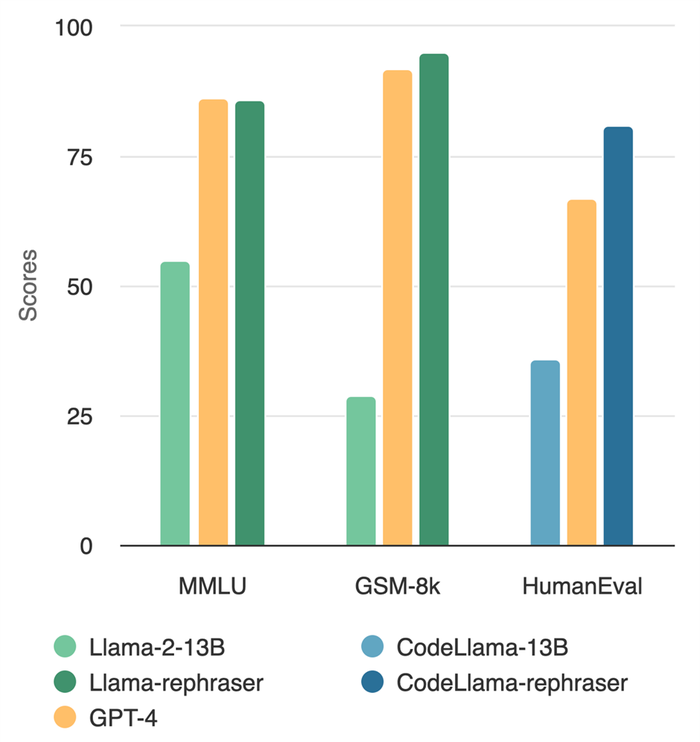

LMSYS Org unveiled Llama-rephraser which stands at just 13 billion parameters in size. But the model managed to reach GPT-4 performance in major benchmarks.

How? The team achieved this feat by rephrasing the test set – by paraphrasing a test sample, the model was able to generalize and achieve its high performance.

By changing sentences in the dataset, it helped the model not just memorize the text but understand the meaning. They then tested it by prompting with changed phrases to see if the model truly understands the material. The resulting output saw Llama-rephraser able to return correct response.

The novel approach saw the 13 billion Llama-rephraser achieve GPT-4 level results on benchmarks including the popular MMLU which covers basic math, computer science, law and other topics, as well as HumanEval, which tests a model’s code generation.

Is this a breakthrough?

The biggest achievement from Llama-rephraser’s development, according to the team behind it, is how it challenges understanding of data contamination in language models.

Contamination occurs when information from test sets leaks into training sets, which could lead to an overly optimistic estimate of a model's performance – especially when it comes to a 13 billion parameter model achieving GPT-4 results.

During their research building Llama-rephraser, they found that GPT-generated synthetic data like CodeAlpaca may result in subtle contamination - something that was hard to detect.

How did they do that? Through a new tool called LLM Decontaminator. This quantifies a dataset's rephrased samples compared to a benchmark. Effectively, developers can estimate the contamination of rephrased samples in the dataset, so they can remove them.

You can access LLM Decontaminator as it is open source on GitHub. LMSYS Org has also published examples of how to remove rephrased samples from training data using the new tool.

A boon for businesses?

As businesses adopt AI solutions, the need to make things smaller becomes clear to keep running costs low. Some are even finding that running larger open source models can run up millions in terms of costs.

Access to a smaller model like Llama-rephraser that’s akin in power to GPT-4 could help business balance performance with cost.

Though add to the mix a tool like LLM Decontaminator that would allow businesses to perfect their existing systems – meaning current-gen models could improve and companies would not have to fork out increased cash in development costs building an entirely new model.

“We encourage the community to rethink benchmark and contamination in large language model context, and adopt stronger decontamination tools when evaluating large language models on public benchmarks,” an LMSYS Org blog post reads.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)