Open Source vs. Closed Models: The True Cost of Running AI

Is open source really cheaper? Here's a cost breakdown.

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Meta's open source language models have startups questioning whether accessibility is worth potentially higher running costs.

Meta’s open source release of its powerful large language model Llama 2 earned plaudits from developers and researchers for its accessibility. It went on to form the basis for AI models such as Vicuna and Alpaca – as well as Meta’s own Llama 2 Long.

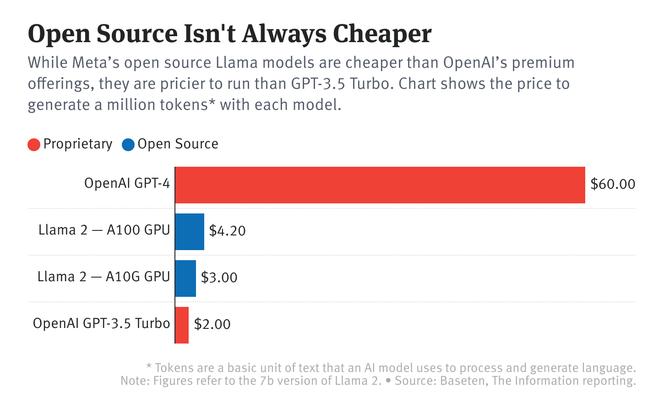

Costs to operate the model, however, can be more expensive than proprietary models. The Information reports that several startups are spending around 50% to 100% more on running costs for Meta’s Llama 2 compared with rival OpenAI’s GPT-3.5 Turbo, although top-of-the-line GPT-4 remains far more expensive. Both LLMs underlie ChatGPT.

Credit: The Information

Sometimes the cost is exponentially even higher. The founders of chatbot startup Cypher ran tests using Llama 2 in August at a cost of $1,200. They repeated the same tests on GPT-3.5 Turbo and it only cost $5.

AI Business has contacted Meta for comment.

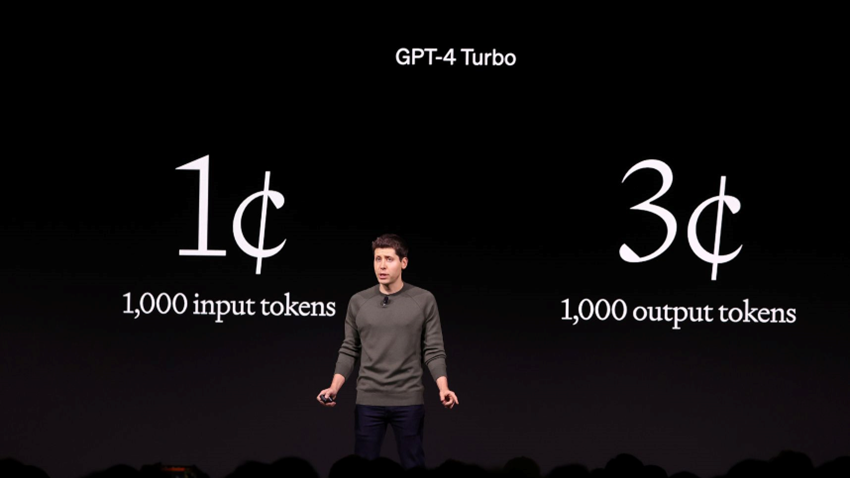

Last week, OpenAI unveiled a new, more powerful model that costs even cheaper to run. At its DevDay event, OpenAI said the new GPT-4 Turbo is three times cheaper than GPT-4 (8K model) – costing one cent per 100 input tokens. To get developers to give it a try, OpenAI gave each of its conference attendees $500 in free API credits.

While Llama 2 is free for users to access and tinker with compared to OpenAI’s closed systems, the sheer difference in running costs could turn companies away.

Credit: Justin Sullivan/Getty Images

Why open source can be more expensive

One reason comes from how companies use the specialized servers that power the models. OpenAI can bundle the millions of requests it gets from customers and send the batch to chips to process in parallel rather than one at a time, according to The Information.

In contrast, startups like Cypher that use open source models while renting specialized servers from cloud providers may not get enough customer queries to bundle them. As such, they do not benefit fully from the server chips’ capabilities the way OpenAI can, a Databricks executive told the news outlet.

To be sure, the cost of using open source LLMs can vary widely depending on the task, the number of requests it serves and how much needs to be customized for a certain product. For simple summarization, the cost can be relatively low while complex tasks might need more expensive models.

Another possibility is “we don’t know how much operating cost OpenAI is simply ‘eating’ right now,” Bradley Shimmin, chief analyst for AI and data analytics at sister research firm Omdia said. “We have no visibility into the cost of running any of OpenAI’s models. I’m sure they’re benefiting from economies of scale that would far outgun those available to mom and pop enterprises seeking to host a seven-billion parameter model on AWS or Azure.”

“However, from what we do know of model resource requirements and what we’re learning about model resource optimization, it is unlikely that these moves will overturn the current trend toward smaller model adoption in the enterprise, especially where issues like transparency, openness and security/privacy may far outweigh ease of use and even capability itself.”

Using a sledgehammer to crack a nut

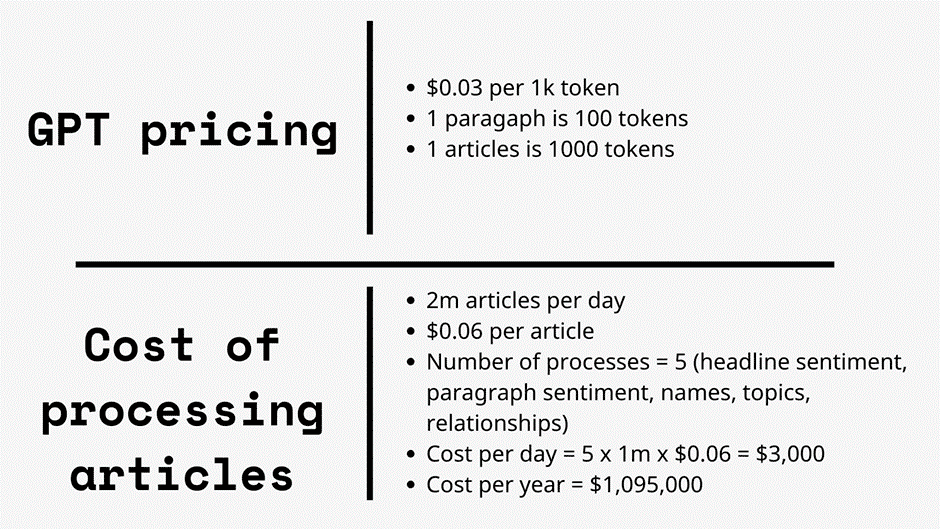

This week, Permutable.ai published a detailed analysis of its actual costs to use OpenAI’s tech: approximately $1 million annually or 20 times more than using in-house models.

Credit: AI Business x Permutable.ai

That means OpenAI's pricier models are best reserved for tougher tasks. CEO Wilson Chan told AI Business that using ChatGPT for smaller tasks is like using a sledgehammer to crack a nut — effective but exerting far more force than needed. The computational and financial resources required for heavyweight models may not align with practical demands, resulting in inefficient power and budget allocations.

“The costs associated with deploying such powerful AI models for minor assignments can be significantly higher than employing tailored, more nuanced solutions. In essence, it's akin to utilizing a cutting-edge sports car for a stroll around the block,” he said. “This stark juxtaposition underscores the importance of evaluating the scale and nature of the task at hand when choosing the appropriate AI model, ensuring a harmonious balance between capability and cost-effectiveness."

Costs comparison

The costs of running large language models largely depend on size. Llama 2 comes in various sizes, the biggest of which is 70 billion parameters. The larger the model, the more compute is needed to train and run. However, users might get a better performance.

In emailed comments, Victor Botev, CTO and co-founder at Iris.ai, said parameters can be reduced using methods like quantization, whereby you modify the precision of a model’s weight and flash attention, an attention algorithm used to reduce bottlenecks stemming from transferring data between hardware.

“You can also reduce the costs − sometimes significantly so. However, this risks degrading the quality of response, so the choice depends on your use.”

Botev said that models with fewer than 100 billion parameters on-premises require at least one DGX box (Nvidia software and hardware platform). Each of these DGX boxes is priced around $200,000 at current market prices and comes with a three-year guarantee. He calculates that for running something like Llama 2 on-premises, the annual cost for hardware alone would be about $65,000.

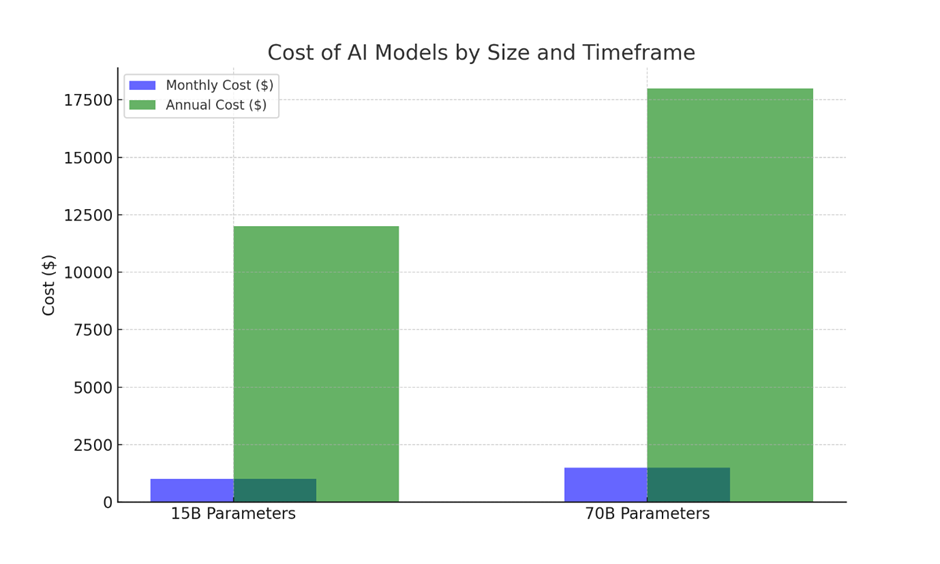

However, when it comes to running models in the cloud, the costs differ significantly based on the model's size. For models below 15 billion parameters, he said, the cloud operation cost is around $1,000 monthly, or $12,000 annually. As for models with around 70 billion parameters, the cost escalates to approximately $1,500 per month, or $18,000 annually.

AI Business via ChatGPT

“Unfortunately, models out of the box rarely provide the quality that companies are looking for, which means that we need to apply different tuning techniques to user-facing applications. Prompt tuning is the cheapest because it doesn’t affect any encoded knowledge, with costs varying from $10 to $1000,” Botev said. “Instruction tuning is most useful for domains where the model needs to understand specific instructions but can still use its existing training knowledge to respond. This domain adaptation costs between $100 to $10,000."

“Finally, fine-tuning is the most expensive process. It changes some fundamental aspects of a model: its learned knowledge, its expressive reasoning capabilities, and so on. These costs can be unpredictable and depend on the size of the model, but usually cost around $100,000 for smaller models between one to five billion parameters, and millions of dollars for larger models.”

Here come the small models

Enter the idea of using smaller but more-cost effective models for specific use cases. There are already smaller variations of Llama 2, coming in at seven billion and 13 billion parameters. But new systems are emerging at pace. There’s Phi 1.5 from Microsoft, which now has multimodal capabilities, offering a miniscule 1.3 billion parameters. Other popular smaller models include Pythia-1b from EleutherAI and MPT-1b from the Databricks-owned MosaicML.

All these systems are open source, but as Omdia chief analyst Lian Jye Su puts it, “Open source is never cheap to begin with, especially when forking is introduced to the vanilla model for enhancement or domain-specific capability.”

Moreover, "all OpenAI models are inherently proprietary. The idea of sharing their profit with OpenAI via licensing fee or royalty may not sit well with some businesses that are launching gen AI products and prefer not to. In that case, the model cost probably is less of a priority,” the analyst added.

Anurag Gurtu, CPO at StrikeReady, said that startups should balance model costs with the potential return on investment.

“AI models can drive innovation, create personalized user experiences, and optimize operations. By strategically integrating AI, startups can gain a competitive edge, which might justify the initial investment,” he said. “As the AI field advances, we're seeing more efficient models and cost-effective solutions emerge, which will likely make AI more accessible to startups and developers in the future.”

Access to compute

Another major issue affecting running costs is access to hardware. AI is hot right now and companies are looking to adopt or deploy AI in some way, and that requires access to compute.

But demand is outweighing supply. Market leader Nvidia has seen a huge increase in demand for its H100 and A100 GPUs, delivering some 900 tons of its flagship GPUs in Q2 alone. It also just unveiled a higher-memory, faster version of H100, appropriately called H200, as rivals AMD and Intel get ready to compete with their own new AI chips.

Without steady access to compute, companies will have to pay more to meet their needs. Options in the market include the ‘GPUs for rent’ space from Hugging Face, NexGen Cloud and most recently AWS. But hardware-intensive demands for running computations on a model like Llama 2 requires powerful chips.

In emailed comments, Tara Waters, chief digital officer and partner at Ashurst, said that consumption-based pricing for public models has forced some startups to curb use by potential customers looking to trial and pilot before they buy.

“It can also make customer pricing a more difficult conversation, if it's not possible to offer price certainty. The availability of open source models could be seen as a panacea to this problem, although the new challenge of needing to have the necessary infrastructure to host a model arises,” she said.

“We have seen a rise in more creative strategies being employed to help manage them — for example looking to apply model weightings without hosting the model itself, as well development of mid-layer solutions, to reduce unnecessary consumption for similar and repeat queries.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)