Survey: Gap Across Roles in Responsible AI Involvement

Ironically, sustainability managers are among the least involved

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

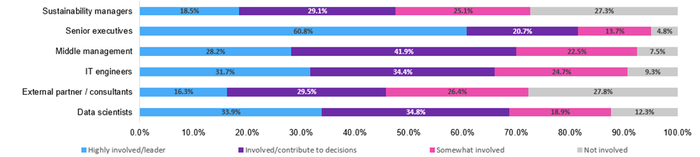

- An AI Business survey showed that 61% of senior executives are "highly involved" in responsible AI at their companies.

- But a surprise is that sustainability managers are one of the least involved groups: only 18.5% are highly involved.

- Also, nearly 40% of companies do not have a process for ensuring responsible AI plans are used throughout the supply chain.

In a promising shift towards ethical AI practices, an AI Business survey showed that 61% of senior executives across industries globally are “highly involved” in responsible AI at their companies.

This signals that responsible AI is now at the forefront of corporate agendas, marking a paradigm shift in how AI is being integrated into business strategies and ethical considerations.

Data scientists and IT engineers are also highly engaged, at 34% and 31.7% respectively as the next two highest ranking cohorts.

However, a surprise is that sustainability managers are one of the least involved groups: only 18.5% are highly involved, just above the showing for external partners/consultants, which came in last.

The flip side confirms the finding: Sustainability managers have the second highest percentage of no involvement, at 27.3% compared with 27.8% for external partners/consultants, again in last place.

The disparity in engagement levels between senior executives and sustainability managers perhaps indicates a need for better communication and cross-disciplinary collaboration. Another reason could be that sustainability is just one part of responsible AI and companies should expand the job description beyond environmental and social issues.

Among the survey respondents, 63.4% are directly involved in strategy decisions for AI responsibility while 27.3% had some influence on them. Only 9.3% have little or no involvement in responsible AI decisions.

The survey was conducted in October and had 227 respondents, with 43.2% in North America, 25.1% in Western Europe and 9% in Africa. Half of respondents work in corporate management or R&D and technical strategy.

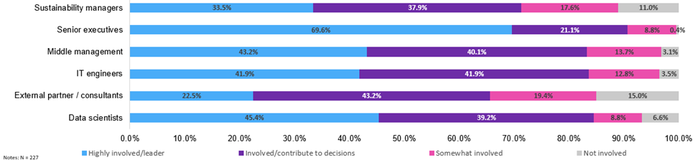

What respondents want to see

When asked what level of engagement they would like to see in one year, respondents pointed to even higher involvement: 69.6% for senior executives, 45.4% for data scientists and 41.9% for IT engineers.

Notably, sustainability managers did not fare that well: Respondents wanted to see 33.5% of sustainability managers highly involved in ethical AI, the second to lowest showing. The last place went to external partners/consultants again.

Ethical AI in the supply chain

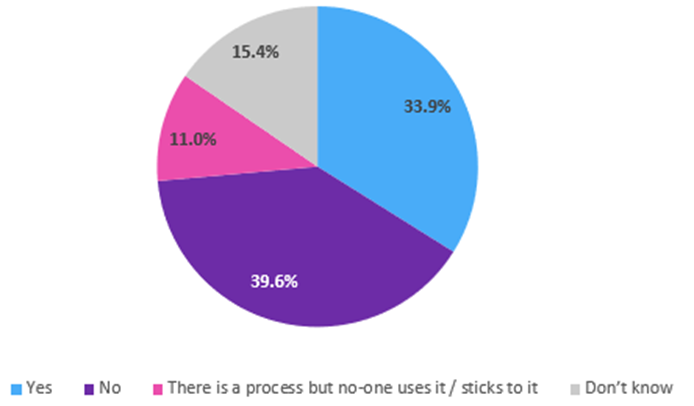

The survey also revealed another gap: while nearly 90% of companies are implementing, actively assessing or have deployed responsible AI strategies (see part one in this series), the same cannot be said of their supply chain.

The survey showed that nearly 40% of companies do not have a process for ensuring responsible AI plans are used throughout the supply chain. It just edges out those that do: 34% have such a process. Tellingly, 11% also have a process but do not apply it.

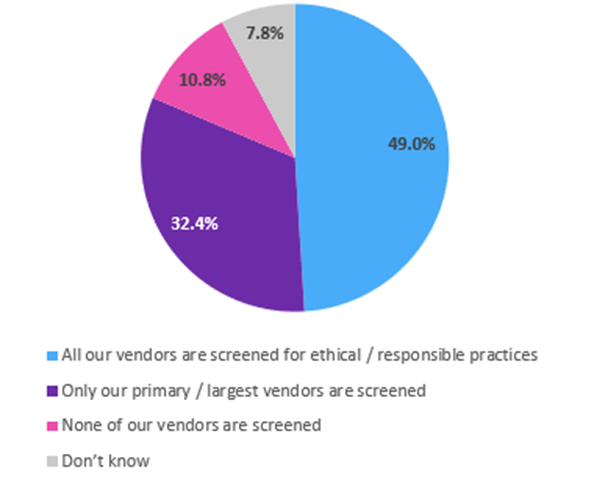

Among those who do have a screening process, half say that all their vendors are vetted for responsible AI, while a third only screens their largest or primary vendors. Another 11% say they do not screen vendors.

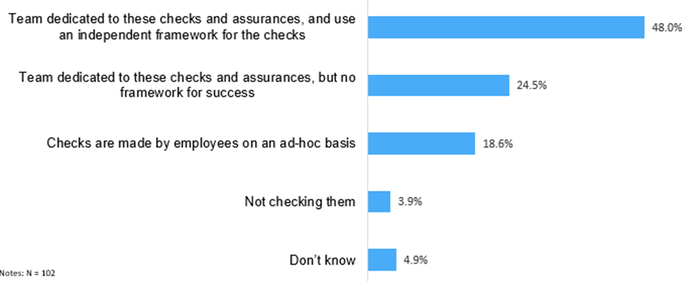

As for how they are checking their suppliers’ declarations, half said they have a team dedicated to these checks and assurances. They also use an independent framework for the checks. Another quarter of respondents said they have a dedicated team but no framework for success. About 19% said employees make ad-hoc checks.

The dual gaps in responsible AI – within the organization and with vendors – signal that work still needs to be done to make ethical AI a priority for all.

Pragmatic cynicism

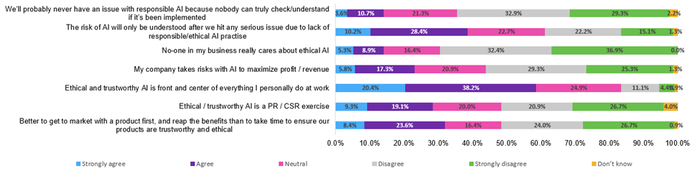

At least, belief in responsible AI appears to be strong. Among respondents, nearly 60% said they “agree” or “strongly agree” that “ethical and trustworthy AI is front and center of everything I personally do at work.”

However, there is a bit of pragmatic cynicism as well: nearly 40% believe that “the risk of AI will only be understood after we hit any serious issue due to lack of responsible/ethical AI practice.” It means that they do not believe their companies will do ethical AI for its own sake but rather only if it affects the bottom line. This was the second highest percentage answer.

Coming in third is the group that believes it is “better to get to market with a product first, and reap the benefits than to take time to ensure our products are trustworthy and ethical.” This garnered a third of the votes.

Whether companies exercise ethical AI because they believe it is the right thing to do or they do it to mitigate financial and legal risks, at least they are paying attention to the issue. They will soon be forced to do it anyway once governments around the world pass their own respective AI regulations.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)