Survey: Majority of Companies Embrace Responsible AI Amid Global Regulatory Discussions

Nine out of 10 companies are actively incorporating responsible AI in their practices, according to a new AI Business survey.

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Nearly 90% of companies are implementing, assessing or have deployed responsible AI strategies, an AI Business survey showed.

- The most important applications to have responsible AI are predictive analytics, customer experience and chatbots.

- Half say their companies' approach to AI is "measured, thoughtful" and "moving at the right speed."

A new survey by AI Business reveals that nearly 90% of companies are implementing, actively assessing or have deployed responsible AI strategies. This posture comes at a critical time as governments worldwide are actively discussing and formulating regulatory frameworks for AI.

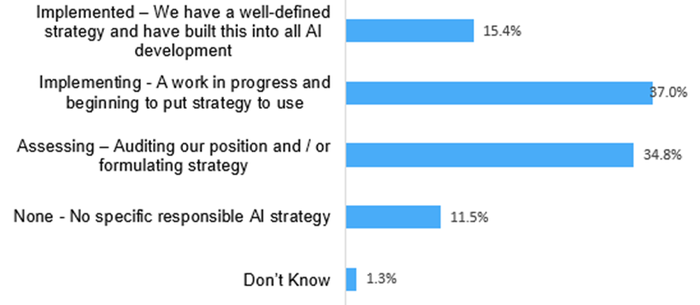

The survey shows that 37% are implementing their responsible AI strategies while 34.8% are assessing their positions and formulating a strategy. Meanwhile, 15.4% have already implemented a “well-defined” strategy and built it into all AI development.

Governments around the world have been developing regulations to rein in AI harms, with the EU’s AI Act being the most advanced and comprehensive. It is expected to pass into law in early- to mid-2024. U.S. President Biden has issued an executive order that opens Big Tech’s AI models to public red-teaming. And U.K. Prime Minister Rishi Sunak just wrapped up the first global AI Safety Summit at historic Bletchley Park.

However, the private sector is not waiting for regulations to come down the pike from their governments; they are already stepping up. The speed at which businesses have pivoted is remarkable as well, given it was only a year ago that generative AI was thrust into the public consciousness with the release of OpenAI’s ChatGPT − despite AI having been around for decades.

Today, the astonishing capabilities of large language models and the chatbots built atop them have also brought to the forefront their attendant risks – hallucinations, biases, copyright infringement, privacy breaches and cybersecurity vulnerabilities – and underscored the urgent need for responsible AI practices.

Private sector surges ahead

The benefits of ethical AI range from enhancing customer trust to mitigating regulatory and legal risks. To that end, companies are starkly aware of the ethical implications of AI and are taking proactive steps to ensure their AI systems are transparent, fair and accountable.

Diving deeper into their practices, 72% said they either have a program in place, it is being constructed or otherwise planned. Only a quarter said their companies do not have data management and governance for AI while the remainder of respondents did not know their companies’ state of affairs.

As for the companies’ approach to AI, 45.8% of respondents said it was “measured, thoughtful” and “moving at the right speed.” However, the second largest cohort, at 26%, said their companies were “moving ahead quicker than we can ensure implementation of responsible AI.” Perhaps most concerning is that 19% have no programs to ensure responsible AI.

The survey was conducted in October and had 227 respondents, with 43.2% in North America, 25.1% in Western Europe and 9% in Africa. Slightly over half of the respondents worked in companies with fewer than 100 employees while the second largest cohort, at 12.8%, had more than 10,000. Half of respondents work in corporate management or R&D and technical strategy.

What success looks like

Despite the apparent enthusiasm for responsible AI, the path forward is fraught with challenges. Key among these is the lack of standardized definitions and benchmarks for what constitutes responsible AI. Additionally, the dynamic nature of AI technology means that regulations and corporate policies need to be continually updated.

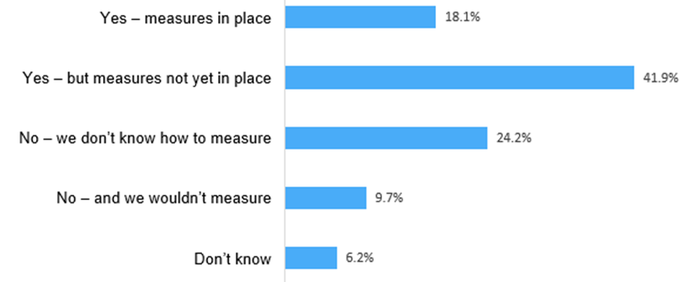

That is why companies need to monitor their ethical AI practices. Here, the companies surveyed have a solid yet weaker showing: 60% said they measure whether responsible AI has been implemented correctly (lower than the nearly 90% that are implementing or have deployed ethical AI). Also, only 18.1% have these measures in place while 41.9% are still in progress.

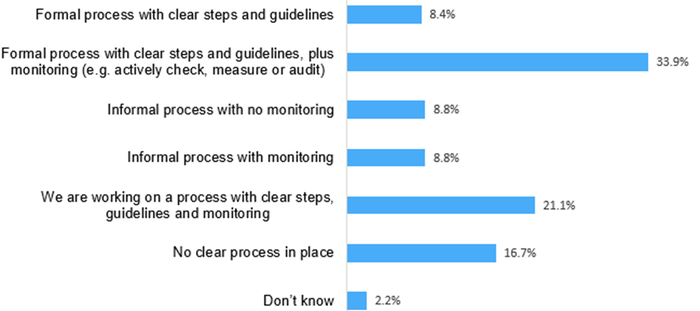

These steps to measure ethical AI are part of a formal process with “clear steps and guidelines, plus monitoring,” meaning a team actively checks, measures or audits responsible AI practices, for 33.9% of respondents. The second largest group, at 21.1%, are those whose companies are still working on a process that has clear steps, guidelines and monitoring. Nearly a fifth have no process in place or do not know.

As for when companies start considering responsible AI issues, 40% said they do so before the technology is even designed or implemented while 41.4% said they do it during the design or implementation stage. Only 10.6% said they consider ethical AI issues after implementation.

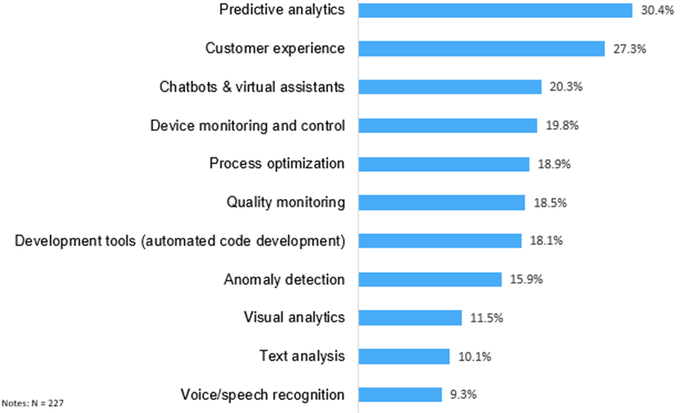

Respondents also voted on the most important applications to have responsible AI built into them: predictive analytics (30.4%), followed by customer experience (27.3%) and chatbots and virtual assistants (20.3%).

Respondents also voted on the most important applications to have responsible AI built into them: predictive analytics (30.4%), followed by customer experience (27.3%) and chatbots and virtual assistants (20.3%). At the bottom are voice/speech recognition, text analysis and visual analytics. (Respondents selected two answers each.)

The survey’s findings underscore a critical juncture in the field of AI. As companies increasingly align with responsible AI practices, and governments move closer to enacting AI regulations, the stage is set for a new era in AI development: One where ethical considerations are not an afterthought but a fundamental aspect of AI innovation.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)