Cerebras Builds 'Fastest' Supercomputer Network in the World

The Condor Galaxy system of nine supercomputers gives market leader Nvidia competition in AI training and compute

At a Glance

- Cerebras unveils Condor Galaxy, a network of nine AI supercomputers it bills as the fastest ever built.

- Condor Galaxy will expand available AI training capacity at a time when market leader Nvidia has a backlog.

- Cerebras CEO: Setting up a generative AI model takes "minutes, not months and can be done by a single person."

Cerebras Systems, the AI hardware startup known for continually raising the bar on computing, has unveiled Condor Galaxy, a network of supercomputers it said will be the fastest ever built.

Named after the largest galaxy in the universe, Condor Galaxy consists of a system of nine linked supercomputers that the company said promises to “significantly reduce” AI model training times.

The supercomputing system will have a total expected capacity of 36 exaFLOPs, or over 20 times greater than the current fastest supercomputer in the world, HPE’s Frontier at Oak Ridge National Laboratory.

This additional capacity comes as current cloud providers look for alternatives to Nvidia, which dominate the AI chip and computing market but has a backlog to fill, according to Reuters.

Cerebras has sold the first supercomputer for $100 million to G42, a technology holding company based in the UAE that owns cloud computing and data center businesses, a company spokesperson told AI Business. The two partners will offer access to this supercomputer as a cloud service.

"There will be some excess capacity that we hope to wholesale with Cerebras to customers in the open-source AI community from many places around the world, especially in the U.S. ecosystem,” G42 Cloud CEO Talal Al Kaissi told Reuters.

This is the first time Cerebras has partnered not only to build a dedicated AI supercomputer but also to manage and operate it.

The first supercomputer

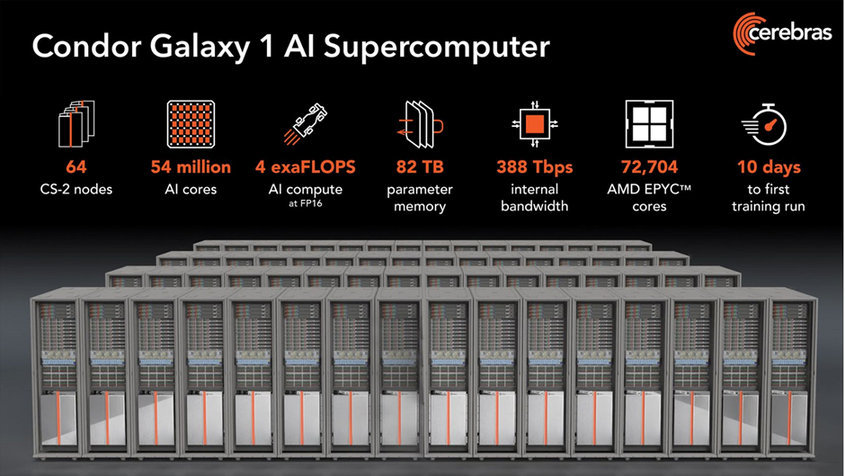

The first of the nine supercomputers has been deployed at the Colovore data center in Santa Clara, California. Called CG-1 for Condor Galaxy 1, it boasts 4 exaFLOPs and 54 million cores. In comparison, HPE’s Frontier has nearly nine million cores and 1.7 peak exaFLOPs, according to Top 500.

Cerebras is building two more supercomputers with 4 exaFLOPs each, in Austin, Texas and Ashville, N.C., which are scheduled to be operational in the first half of 2024, the Cerebras spokesperson said. Six other supercomputers whose locations were not disclosed will be built in 2024.

CG-1 “dramatically reduces AI training timelines while eliminating the pain of distributed compute,” said Cerebras CEO Andrew Feldman, in a statement. He contends that many cloud companies spend billions to build "massive GPU clusters" and are "extremely difficult to use."

"Distributing a single model over thousands of tiny GPUs takes months of time from dozens of people with rare expertise," Feldman added. "CG-1 eliminates this challenge."

With CG-1, setting up generative AI models takes "minutes, not months and can be done by a single person," Feldman said.

Access to CG-1 is available now.

Cerebras CEO Andrew Feldman stands atop Condor Galaxy systems awaiting delivery. Credit: Cerebras

Under the hood

CG-1 alone has 64 of Cerebras’ CS-2 systems, which are based on the company’s plate-sized WSE-2 AI chips containing 2.6 trillion transistors and 850,000 AI cores. Some 72,700 AMD EPYC cores alongside 54 million Cerebras cores feed it 388 terabits per second of fabric bandwidth.

CG-1 alone can train AI models up to 600 billion parameters in size - add eight more for the entire network. CG-1 also supports training of long sequence lengths, up to 50,000 tokens out of the box, without any special software libraries.

All programming for CG-1 is done entirely without distributed programming languages, meaning large AI models can be run without weeks spent distributing work over thousands of GPUs.

Credit: Rebecca Lewington/ Cerebras Systems

AI training takes off

As companies scramble to build or customize their own AI models, they are going to need to train AI models – and vendors are looking to offer their services to this fast-growing market.

Bedrock from Amazon offers customers access to preexisting models from Anthropic and Stability AI as well as developer tools to build and scale their own generative AI applications.

In June, HPE entered the training platform market with GreenLake for Large Language Models.

Other vendors include Microsoft Azure, Google Cloud, SambaNova, Graphcore and the now-Intel-owned Habana.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)