Nvidia Shipped 900 Tons of AI Chips in Q2 – a Chunk to One Client

An “acceleration” of demand for Nvidia's AI chips is raising average server prices, according to Omdia research

At a Glance

- Nvidia shipped 900 tons of its new H100 GPUs in Q2, according to analysts at Omdia.

- It comes from surging demand for Nvidia AI chips, most of which likely came from Meta.

- In five years, analysts see large language models becoming as numerous as consumer-facing apps.

Chipmaking giant Nvidia shipped a whopping 900 tons of its flagship H100 GPU in the second quarter due to a “bold acceleration of demand,” according to a new report from sister research firm Omdia.

Its latest “Cloud and Data Center Market Snapshot” report said "an avalanche" of GPUs is arriving at the data centers of hyperscalers – to the detriment of server shipments. Deliveries of servers are down by one million units or 17% from the prior year. In July, it fell for the first time in 15 years.

Instead, Omdia reports a "bold acceleration” towards AI GPUs, with the price tag of AI hardware driving up the average price of servers by over 30% year-over-year. This “dramatic shift” in the server mix towards highly configured servers led Omdia to forecast the market size to be $114 billion, up 8% year-over-year.

Nvidia is the company set to benefit the most, with Omdia reporting that 22% of its $13.5 billion second-quarter revenue was driven by a single customer, likely Meta. Omdia also expects Microsoft, Google and “a few other top 10 cloud service providers” to become “priority clients” of Nvidia.

Demand for AI hardware will continue until the first half of 2024, especially given that chip foundry TSMC is increasing the packaging capacity required to build the H100.

Demand for AI hardware also saw a vendor and even the U.S. government opt to ‘rent out’ their existing infrastructure – such as discounted access to the Perlmutter supercomputer or Hugging Face’s new Training Cluster as a Service.

However, demand for AI hardware does not mean AI usage has likewise seen rapid growth. "The rapid investment in AI training capabilities that we're currently experiencing should not be confused for rapid adoption," Omdia's report read.

It's all about efficient, domain-specific AI

Omdia expects the AI wave to continue until 2027. In five years, it further forecasts that the number of large language models will be as numerous as consumer-facing apps.

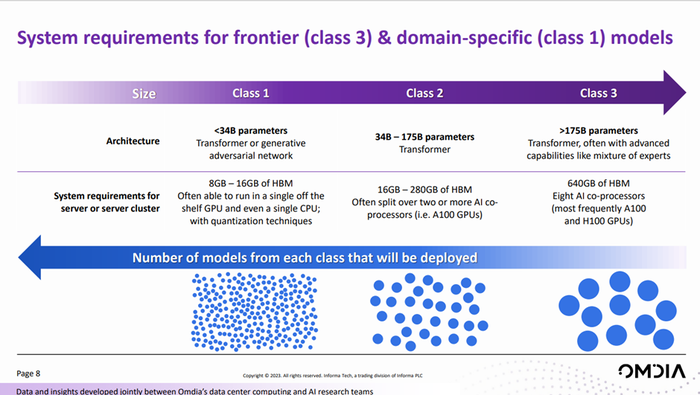

AI will turn to more specialized uses, with broader models taking a back seat. Industry-specific models akin to Med-PaLM, Bio-GPT, BioBert, and PubMedBert will be the focus but will be built by fine-tuning the broad, multimodal frontier models such as ChatGPT or Claude.

However, the need for AI servers should decrease over time even if AI adoption by enterprises and other organizations increase. That is because domain-specific AI models have “significantly fewer” parameters, dataset size, tokens and epochs, according to the research firm.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)