Google DeepMind's Six Levels of AGI

DeepMind researchers introduce 'Levels of AGI,' similar to the six levels of autonomous driving, to set an industry standard

At a Glance

- DeepMind researchers define artificial general intelligence through six 'levels of AGI' focused on capability, not mechanism.

- The goal is to have a standard definition of AGI in the industry, similar to the six levels of autonomous driving.

- Meta Chief AI Scientist Yann LeCun thinks AGI is poorly termed and would rather use 'human-level AI.'

Google’s DeepMind team is trying to refine the debate around Artificial General Intelligence (AGI) by clearly defining the term.

Some AI optimists see AGI as their ultimate goal and claim it is the initial step toward artificial superintelligence. However, what exactly AGI means is seldom made explicit. The phrase is often used vaguely as software that, once it surpasses a certain elusive threshold, becomes equivalent to human intelligence.

“We argue that it is critical for the AI research community to explicitly reflect on what we mean by AGI and aspire to quantify attributes like the performance, generality and autonomy of AI systems,” the team wrote in a preprint published on arXiv.

By providing a common framework to evaluate AGI, the researchers hope to establish an industry standard against which AI models are evaluated.

What exactly is AGI?

Currently, there is no agreed-upon definition of AGI. OpenAI’s charter refers to AGI as “… highly autonomous systems that outperform humans at most economically valuable work.”

Most experts say that unlike narrow AI, which excels in specific tasks like language translation or game playing, AGI would possess the flexibility and adaptability to handle any intellectual task a human can. This entails not just mastering specific domains but also transferring knowledge across various fields, displaying creativity and solving novel problems.

To pinpoint precisely what AGI is, the Google researchers drew inspiration from the six-level system used in autonomous driving, which clarifies progress in that field. The team examined prominent AGI definitions and identified key principles essential for any AGI definition.

First, Google researchers say, the definition of AGI should emphasize capabilities rather than the methods AI uses to achieve them. This approach means AI does not have to mimic human thought processes or consciousness to be considered AGI.

They also point out that more than mere generality is needed for AGI. The AI models must reach specific performance benchmarks in their tasks. However, they note that this performance does not have to be demonstrated in real-world scenarios. It is sufficient if a model shows the potential to surpass human abilities in a given task.

Some suggest that for AGI to be real, it might need to be embedded in a robot so it can interact with the physical world.

But the DeepMind team says that is not necessary. They believe AGI should focus on intelligent thinking tasks, like learning how to learn. They also say measuring AGI by how well it does actual functions that matter to people is essential. Instead of just looking for one final goal, they think we should watch how AGI develops over time.

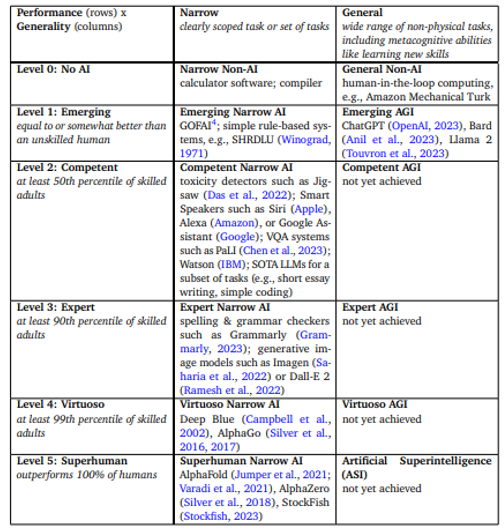

DeepMind has come up with a way to rank AGI called "Levels of AGI." It starts with "emerging" (as good as or a little better than a person who is not skilled) and goes up to "competent," "expert," "virtuoso," and "superhuman" (better than any human). This ranking works for both simple AI that does one thing and complex AI that does many things.

The team points out that some specific AI systems, like DeepMind's AlphaFold, are already superhuman. They also think that advanced chatbots like OpenAI's ChatGPT and Google's Bard might be starting levels of AGI.

AGI, coming soon?

There are those within the AI community who believe AGI will arrive soon. When questioned about the possibility of realizing AGI within the next decade, Nvidia's CEO Jensen Huan suggested recently that it might occur even earlier. “Depending on your definition, I believe it's possible," he stated at The New York Times DealBook Summit.

Nicole Valentine, an expert in fintech and AI, suggested in an interview that AGI is already here. She said AGI just has not reached its full intellectual potential.

“As the software absorbs its surroundings and is trained, it will appear more mature over time,” Valentine added. “The question for us is how we humans manage the risk and opportunity of software that has the ability to plan, learn, communicate in natural language, share knowledge, build memory and reason.”

Earlier this year, a team of AI experts caught attention with their preprint paper, "Sparks of Artificial General Intelligence: Early experiments with GPT-4." The researchers highlighted that GPT-4 can tackle new and challenging tasks across various fields like mathematics, coding, vision, medicine, law and psychology, demonstrating performance almost at par with humans. They suggested that GPT-4 could be seen as an initial, though not yet complete, form of AGI.

However, others say that we are a long way off from anything approaching human-level intelligence in computer form. Meta’s Chief AI Scientist Yann LeCun has said there is no such thing as AGI since “even human intelligence is very specialized.” He suggested that the term AGI be replaced by ‘human-level AI.’

But LeCun acknowledges that machines will "eventually surpass human intelligence in all domains where humans are intelligent," as that would meet most people's definition of AGI.

Proponents of AGI say it could lead to significant advancements across all sectors, from medicine to space exploration.

Assaf Melochna, president of the AI company Aquant, said in an interview that “it is important to acknowledge that, much like social media's manipulation in society and elections, AGI carries a parallel risk, potentially on a larger scale.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)