AMD CEO Sees 'Very High' Interest in AI Chips, Signals China Plan

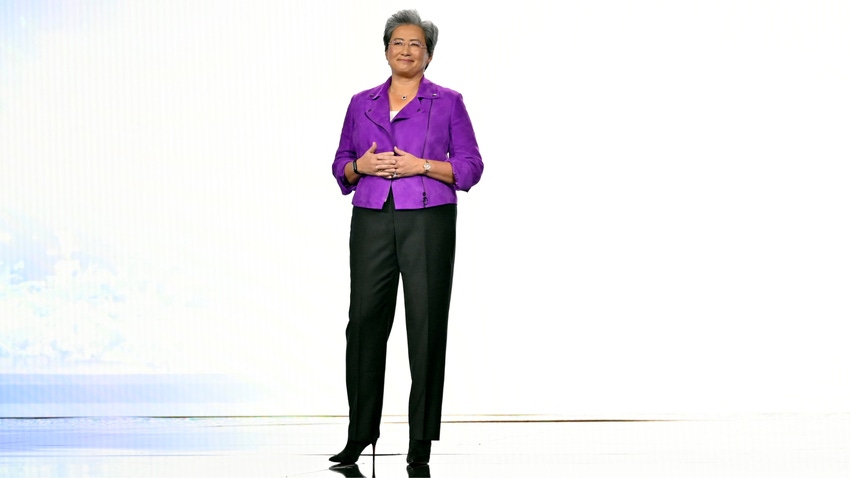

AMD CEO Lisa Su said the chipmaker sees an opportunity to develop a China-specific product set.

At a Glance

- AMD CEO Lisa Su said the chipmaker is seeing "very high" interest among a diverse set of customers.

- As for China, Su sees an opportunity to develop a product set specifically for the Asian nation.

- AI deployments are occurring faster than traditional deployments, Su said.

AMD CEO Lisa Su said the chipmaker is seeing "very high" demand for its chips designed for generative AI, as reports of GPU shortages abound.

"There is no question that the demand for generative AI solutions is very high, and there is a lot of compute capacity that needs to be put in," she said during an earnings call with analysts to discuss second-quarter results.

In particular, customer interest in its MI300X GPU is robust, with several customers looking to deploy as quickly as possible, Su added. This interest also is diverse, coming from large Tier-1 hyperscalers, a new class of AI-focused companies as well as large enterprises. This wide swatch of customers has "significantly" expanded their engagement with AMD, she said.

AMD's MI300X competes against Nvidia's popular H100 AI chips - but the MI300X has double the high memory bandwidth of H100, which makes it easier to process large AI models.

While MI300 is a GPU, AMD's MI300A integrates both CPU and GPU on one chip for the data center. Both chips will ramp up production in the fourth quarter, but the associated software will be mostly tipped towards the MI300X.

Su said what's different about AI deployments than others is that "customers are willing to go very quickly. There is a desire and agility because we all want to accelerate the amount of AI compute that's out there."

"The speed in which customers are engaged and customers are making decisions is actually faster than they would in a normal, regular environment," Su added.

For data centers, however, while AI deployments are expanding, cloud customers are continuing to optimize their compute and enterprise customers "remain cautious with new deployments," she said.

China plans

Asked by an analyst how AMD views opportunities in the Chinese market, given U.S. restrictions on sales of advanced AI chips and technology, Su said the Asian nation remains "a very important market for us."

"Our plan is to of course be fully compliant with U.S. export controls, but we do believe there's an opportunity to develop product for our customer set in China that is looking for AI solutions, and we'll continue to work in that direction," she said.

AMD's AI strategy

Su outlined a three-pronged strategy for AMD's push into AI: Deliver a broad portfolio of AI chips including GPUs, CPUs and adaptive solutions for inferencing; extend its open software platform to ensure easy deployment; and build partnerships to accelerate large-scale AI deployments.

AMD also updated the performance and features of its ROCm software platform and expanded framework support for PyTorch, TensorFlow, Onyx, OpenAI's Triton and others. "We're receiving positive feedback on the improvements and new capabilities" of the ROCm software stack, she said.

Looking ahead, Su sees AI as a key growth driver for AMD across the datacenter, edge and endpoint markets.

"Going forward, we see AI as a significant PC demand driver as Microsoft and other large software providers incorporate generative AI into their offerings. We are executing a multi-generational rise in AI processor roadmap which, together with our ecosystem partners, will fundamentally change the PC experience," Su said. "Longer term, while we are still in the very early days of the new era of AI, it is clear that AI represents a multibillion dollar growth opportunity for AMD."

Stay updated. Subscribe to the AI Business newsletter.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)