Meta's Image Generation Model Gets Video, Image Editing Abilities

Emu, which powers Meta's social media platforms, can now remove objects from images or generate gifs from text prompts

At a Glance

- Emu AI model can now remove objects from images or generate gifs from text prompts.

Meta's first image generation foundation model, Emu (Expressive Media Universe), is getting a major upgrade: It can now generate videos from text and lets users do "precise" image editing.

First showcased at the Meta Connect event in September, Emu's technology underpins many of the generative AI experiences on its social media platforms, including AI image editing tools on Instagram that lets users change a photo's visual style or background. The model is built directly into Meta AI, the company’s new assistant platform akin to OpenAI’s ChatGPT.

The new Emu Video model can generate videos based on either natural language text or images, or both. Unlike Meta’s other video generation model, Make-a-Video, Emu Video uses two diffusion models to create content rather than five models:

First, it generates an image conditioned on a text prompt

Then the model generates a video conditioned on the text and image prompts

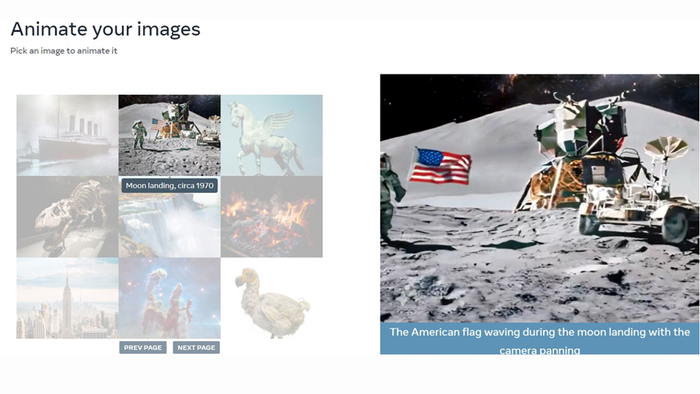

According to Meta, this simpler process allows for video generation models to be trained more efficiently. Researchers also said that Emu Video generations were preferred by 96% of users over Make-A-Video when it comes to quality and 85% of users when it comes to adherence to the text prompt. Emu Video can also animate images users upload based on text prompts.

You can try the model out for yourself on the Emu Video demo page. The demo showcases Emu Video’s capabilities from present text and image inputs.

The other Emu update: Emu Edit

Alongside the video generation update, Meta unveiled Emu Edit, with the model now able to alter images using natural language.

Emu Edit allows users to upload an image and type in what they want to be changed, added or altered.

For example, you can remove a picture of a poodle (not sure why you’d want to do that) or even replace it with something else, like a red bench. Just type in what you want to see altered and the model will perform the action.

AI-powered image alteration tools already exist – you can use Stable Diffusion-powered ClipDrop or image modification tools on Runway. However, Meta's researchers said that current methods either over-modify or under-perform on editing tasks.

“We argue that the primary objective shouldn’t just be about producing a ‘believable’ image,” a company blog post reads. “Instead, the model should focus on precisely altering only the pixels relevant to the edit request.”

"Our key insight is that incorporating computer vision tasks as instructions to image generation models offers unprecedented control in image generation and editing."

The Meta team used a dataset of 10 million synthesized images to train Emu Edit. Each sample includes an input image, description of the task to be performed and targeted output image. The company said the model is designed to meticulously stick to the provided instructions, ensuring that all aspects of the input image that are unrelated to the instructions remain unaltered.

You can check out Emu Edit generations on Hugging Face.

To support further testing of image editing models, Meta released a new benchmark – the Emu Edit Test Set - that includes seven image editing tasks, such as background alteration and object removal.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)