The Quick and the Dead: Security is the Crucible for Applied AI

July 13, 2018

By Yonatan Striem-Amit

Intelligence can best be thought of as a continuum of gradually more intelligent stages rising to cognition and self-awareness and then increasing in sophistication and complexity. Artificial Intelligence (AI) since 1950 should pass the Turing Test and exhibit behavior indistinguishable from a human, which implies that our goal in AI should be to advance, moving further and further along the continuum to human intelligence and beyond.

Given that this is fundamentally about adaptation and incremental and continuous improvement, it’s arguable that the cybersecurity domain is the best place to progress the science of AI. No data set or environment is more challenging than this.

The name of the game in security is adaptation; adapt or die. In most of IT, the enemy is nature or entropy, but cybersecurity is the portion of IT where there is a sentient, sophisticated adversary that actively uses controls and telemetry to deceive. Security is where human beings meet and fight it out in cyberspace.

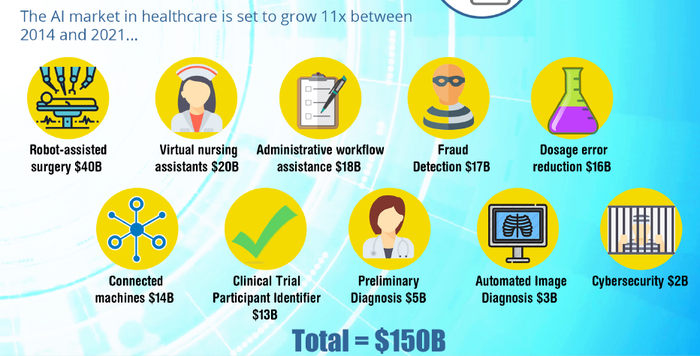

Related: 5 Ways AI Will Transform Healthcare [Free Infographic]

Never slay the same dragon twice

Despite what many IT admins may think, server crashes don't happen merely to spite you. Attackers hide in ways to avoid you and harm you intentionally. This is a dramatically unbalanced arena; the offensive side can always pick the path of least resistance, while the defenders have to lay the pieces on the map.

Within the security community today, the emphasis on the intelligence continuum is its early stages - but it is on it. The focus is on the huge dividends in automation and learning from example. The motto, 'never slay the same dragon twice', is a by-word for learning from previous mistakes, echoing some of the themes of incident-response analyst subculture.

This is precisely the guiding spirit behind applied machine learning, and it's no surprise that ML is being successfully applied in a variety of ways to get the most out of the Mark 1, carbon-based intelligence looking to prevent as much damage as possible and to spot the signal progress by attackers.

Current successful implementations include:

Prevention technologies: the branch of security where we seek to automatically avoid the damage caused by malware - without human intervention. Recently, this has advanced toward preventing 'unknown malware' (i.e. threats for which there is no knowledge or signature for prevention on day zero) and ransomware destruction. While still early on the intelligence continuum, the goal is to learn what is likely good or bad in never before seen items such as files, applications and system use.

Detection technologies: the assumption that all controls will be defeated by intelligent, motivated attackers. They will seek to pose as good traffic or will find never-before-seen techniques and communications to outmanoeuvre static, classically programmed technology. Here, we see machine learning and deep learning applications looking at masses of telemetry beyond just the individual system level. Whether it be logs, aggregate machine behaviour, or network telemetry, this is looking for the tell tales of “life” in the silicon. While we aren’t searching for alien life, we are searching for the familiar behaviors that indicate intelligent, living opponents acting through machine interfaces.

Assisted hunting and automated content extraction: as humans work to stop other humans in cyber-conflict, the scale of information to process is making the task almost always favor the attacker over the defender. The notion of hunting is not a new one, but breakthroughs in expert systems, machine learning, and deep learning are specifically trying to automate the more manual or repetitive tasks of humans. We see automated systems becoming more and more intelligent as they are able to process volumes of data that humans simply cannot handle. Effective applications of AI here enable defenders to get context, perform triage, and check for overlearning, as well as any misleading moves by attackers.

Process streamlining and incident automation: in many respects, security is still a game. The game is won or lost with the compromise of systems or preservation of integrity. Connecting dots and moving automation to later parts of the lifecycle helps overcome the problems of humans having to internalize what is happening, plan responses, and take actions in a decision loop that can be fragile and hard to plan for or reproduce. This isn’t about merely preventing and then detecting malicious incursions, it’s about actually stopping them and learning how to do so progressively faster and more intelligently.

Attackers are weaponising automation - cybersecurity must too

All of these applications are happening now to various degrees in security operations, in the products that supply security departments and, in the services and tools used to share information and coordinate defensive activity. Looked at closely, all of these are pointing to improvements in key areas along an AI continuum. It doesn’t take much extrapolation to see them coming together and moving in bounds at a high rate of improvement heading to the shared goal of all AI applications. This will continue as we see the adversaries making parallel, if not symmetrical, improvements.

The opponents are employing automation, machine and deep learning aggressively to preserve the precious resources of their own carbon-based intelligences, to develop new attack vectors and to disguise themselves more effectively. For each of the above, there is a dark counterpart. For years we’ve observed attackers using automation to scale their operations, only applying carbon-based intelligence on the last legs of successful attacks. Now, we are seeing the early stages of “genetic” malware development, designed to evade AI based defenses. This development is indicative of the persistent evolution-based war between hackers and the defenders.

If both sides of this conflict improve learning tools, and these tools have a goal to pursue that can carry through iterations and improvements, it forms an environment with natural selection, mutation and evolution. Cybersecurity can and will force innovation and adaptation of AI technologies, stretching ahead as the perfect testing ground for incremental improvement along the AI continuum.

The promise of fully automated systems and truly artificial intelligences taking over with a Mark 2 silicon brain replacing the Mark 1 carbon brain is still a long way off, but as with any ecosystem where natural selection-like principles apply, this will accelerate. Eventually we will wind up with artificial intelligences that can pass the Turing Test and process at scale on offense and defense, entering a new phase of techniques and practices, but we are not at that stage yet. Nevertheless, cybersecurity may specifically be the place where artificial intelligence is tested and incrementally improves the most because of conflict and the adversarial opportunities.

Yonatan Striem-Amit is the Chief Technology Officer of Cybereason. Cybereason delivers a proprietary technology platform that automatically uncovers malicious operations (Malops™) and reconstructs them as a clear image of a cyberattack in context. This enables enterprises to discover sophisticated targeted threats at a very early stage, disrupt them at the stem and significantly reduce the costs and damages caused by such attacks. Cybereason is headquartered in Cambridge, MA with offices in Tel Aviv, Israel

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)