Toyota, MIT share data to aid autonomous car research

New dataset called DriveSeg contains precise, pixel-level representations of common road objects

June 18, 2020

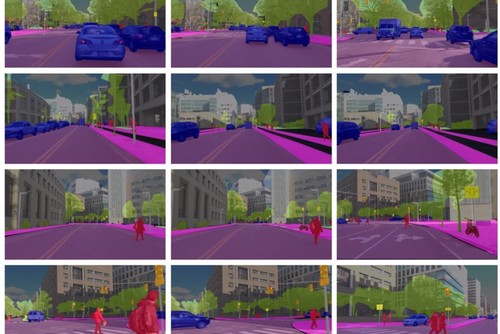

New dataset called DriveSeg contains precise, pixel-level representations of common road objects such as bicycles, pedestrians, and traffic lights

Toyota and the Massachusetts Institute for Technology have teamed up to improve understanding of assisted and autonomous driving.

The pair have shared a new, open dataset called DriveSeg in the hopes that it will assist research into driverless cars.

Autonomous systems perceive the driving environment as a continuous flow of information, much in the same way a human mind does. The hope is that the dataset will allow researchers to develop future autonomous vehicles that have a greater awareness of their surroundings and can learn from past experiences to recognize – and safely negotiate – potential problems.

Here’s some data

DriveSeg is the result of work done by the AgeLab at the MIT Center for Transportation and Logistics, and the Toyota Collaborative Safety Research Center (CSRC).

The dataset differs from much of the data previously made available to researchers in that it contains precise, pixel-level representations of common road objects such as bicycles, pedestrians, and traffic lights, through the lens of a continuous driving scene.

Conventional autonomous car training data relies on a vast array of static, single images that track road objects through the use of ‘bounding boxes’.

The main advantage of DriveSeg, its creators claim, is full scene segmentation that is more helpful in identifying amorphous objects – such as road construction and vegetation – which do not have uniform, defined shapes.

In addition, video-based driving scene perception is said to provide data which more closely resembles dynamic real-world situations. This will allow researchers to explore patterns over a period of time, which – in theory – should lead to advances in machine learning and behavioral prediction.

Bryan Reimer, principal researcher at MIT, said: “In sharing this dataset, we hope to encourage researchers, the industry and other innovators to develop new insight and direction into temporal AI modeling that assists the next generation of assisted driving and automotive safety technologies. Our long-standing relationship with Toyota CSRC has enabled our research efforts to impact future safety technologies.”

Rini Sherony, senior principal engineer at the Toyota Collaborative Safety Research Center, added: “Predictive power is an important part of human intelligence. Whenever we drive, we are always tracking the movements of the environment around us to identify potential risks and make safer decisions.

“By sharing this dataset, we hope to accelerate research into autonomous driving systems and advanced safety features that are more attuned to the complexity of the environment around them.”

Access to DriveSeg is free for the research and academic community, and comes in two parts. DriveSeg (manual) is nearly three minutes of video taken during a daytime trip round the busy streets of Cambridge, Massachusetts.

DriveSeg (semi-auto) consists of 67 ten-second video clips, or 21,000 video frames, drawn from MIT Advanced Video Technologies Consortium data.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)