Glaze: New Tool to Stop AI from Copying the Work of Artists

University of Chicago researchers develop a new tool that prevents text-to-image AI models from copying the work of artists.

At a Glance

- University of Chicago researchers develop a tool that prevents text-to-image generators from copying artists' work.

- Glaze 'cloaks' the 'style feature' of a piece of art, enough to fool AI models but undiscernible to the naked eye.

- In developing Glaze, the team used AI against itself.

A team of computer scientists from the University of Chicago has developed a tool that they say would prevent text-to-image models like DALL-E from copying the work of artists.

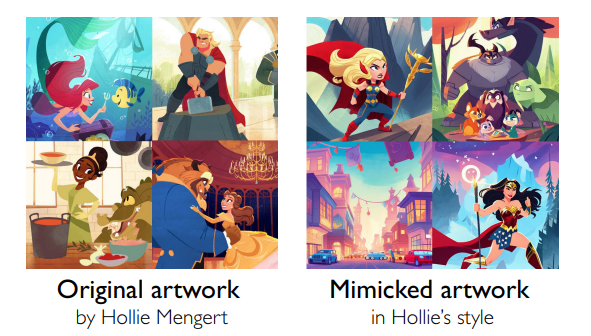

Called Glaze, this software ‘cloaks’ images so AI models cannot correctly learn the unique features of an artist’s style and therefore train on it. Text-to-image generators such as DALL-E, Stable Diffusion and Midjourney train on image datasets and generate images that mimic their creative styles.

“Artists really need this tool; the emotional impact and financial impact of this technology on them is really quite real,” said computer science professor Ben Zhao, who co-leads the research group that developed the tool.

“We talked to teachers who were seeing students drop out of their class because they thought there was no hope for the industry, and professional artists who are seeing their style ripped off left and right,” he said.

Zhao heads the university’s SAND Lab research group, the developer of Glaze, along with fellow computer science professor Heather Zheng. Three years ago, the lab developed Fawkes, an algorithm that cloaked personal photos so they cannot be used to train facial recognition AI models.

As the popularity of text-to-image models soared, artists reached out to the lab to ask whether Fawkes could be used to protect their work. But adapting it to art was insufficient because faces have fewer features to cloak – such as eye color or nose shape. A new tool would have to be developed to cloak the many more features in art that define an artist's style.

Then another challenge came up: How exactly should they cloak a design without distorting it?

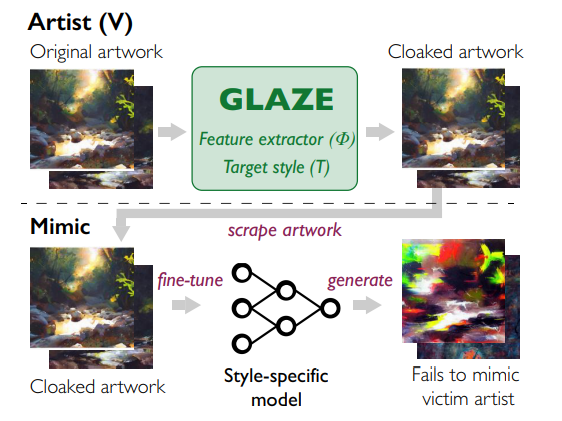

The answer was to only change the style features, not the rest of design. “We had to devise a way where you basically separate out the stylistic features from the image from the object, and only try to disrupt the style feature using the cloak,” said Shawn Shan, co-author of a research paper about Glaze.

In this endeavor, they decided to use AI against itself.

First, they used ‘style transfer’ algorithms – those that change an image into a different artistic style, such as transforming a charcoal image into a watercolor version. Glaze applies style transfer on the original art to find features that change when the image is converted into another style. Glaze then goes back to the original art and cloaks these same features “just enough to fool” AI models but remain undiscernible to the naked eye, according to the researchers.

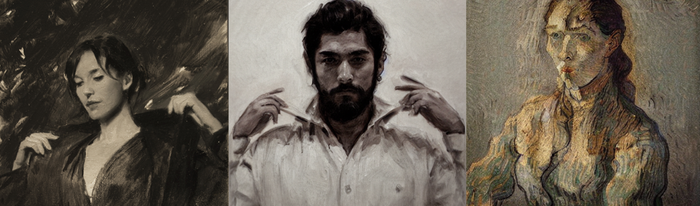

Left: Original art. Middle: Copy of artistic style without cloaking Right: Copied art with cloaking

After Glaze was applied, the AI model was far less able to mimic the artist’s style. Even when the model was trained on both cloaked and uncloaked images, the mimicry was “far less accurate” on new prompts, the team said.

Since these AI models must “constantly scrape websites for new data, an increase in cloaked images will eventually poison their ability to recreate an artist’s style,” Zhao said.

The team is working on a downloadable version of Glaze that will enable cloaking in minutes on a home computer before work is posted online.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)