AWS Unveils AI Chatbot, New Chips and Enhanced 'Bedrock'

AWS revs up its generative AI muscles with Q, two new chips to power AI workloads and Bedrock with guardrails and other new features.

At a Glance

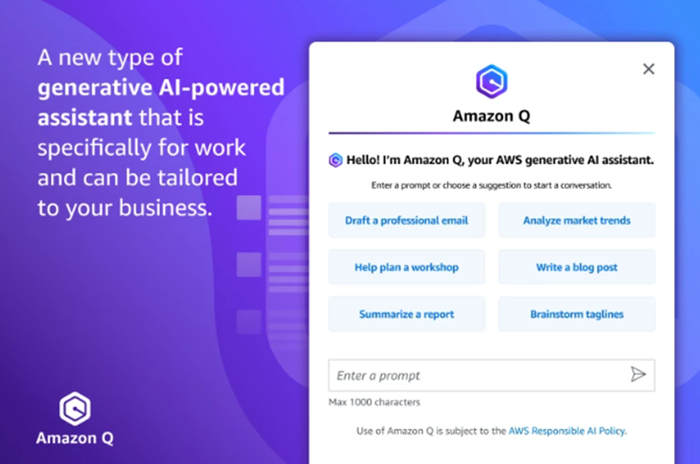

- At AWS re:Invent conference, the cloud giant unveiled Amazon Q, a generative AI chatbot for contact centers.

- AWS also unveiled Graviton4 and Trainium2 chips that can deliver faster processing at lower cost and energy use.

At its annual developer conference, AWS unveiled an AI chatbot meant to help contact center agents deliver a better customer experience.

Called Amazon Q, the chatbot will recommend responses and actions to agents interacting with a customer in real time for “faster and more accurate customer support.” Solving a customer’s problem more quickly and precisely should reduce the need to escalate the issue to a manager.

Q is available through Amazon Connect, which is AWS’ cloud-based contact center. The company said non-technical business leaders can access Q by setting up a cloud contact center “within minutes.”

AWS also announced Amazon Contact Lens, which uses generative AI to create summaries of customer interactions with agents. Typically, contact center supervisors rely on notes that agents create about a customer’s issue. They also listen to hours of customer calls or read large volumes of transcripts – so they can learn from agent-customer interactions to improve their contact center service. With Contact Lens, AWS said they save time by getting short summaries of the encounter.

AWS also unveiled Amazon Lex, which lets administrators simplify the building of self-service chatbots and interactive voice response (IVR) systems - basically a self-service voice tree.

Companies can now build chatbots and IVRs by describing what they want in plain language. Example prompts include, “Build a bot to handle hotel reservations using customer name and contact information, travel dates, room type and payment details.”

These bots, instead of answering customers with pre-programmed responses, generative AI will enable them to answer more dynamically and understand requests better. For example, if a customer wants a hotel room for Saturday and Sunday, the chatbot will interpret it as two nights.

These chatbots can also be set up to look through a company’s knowledge base to find the answer the customer wants.

“The contact center industry is poised to be fundamentally transformed by generative AI, offering customer service agents, contact center supervisors, and contact center administrators new ways to deliver personalized customer experiences even more effectively,” said Pasquale DeMaio, vice president, Amazon Connect, AWS Applications, in a statement.

Upgraded chips

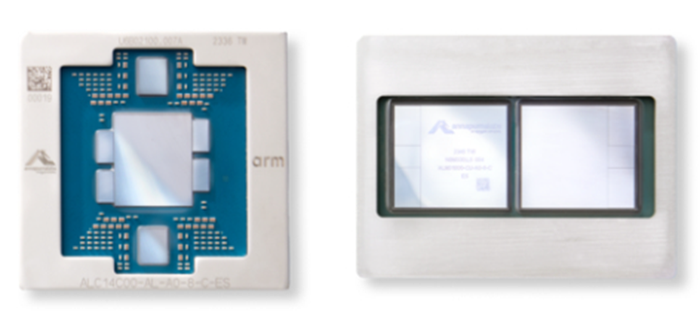

At AWS re:Invent, the cloud computing giant also took the wraps off two upgraded chips to better process AI workloads.

From left: Graviton4 and Trainium2

AWS unveiled the next generation of its AWS-designed Graviton and Trainium chip families for diverse workloads including machine learning training and generative AI applications.

The company said Graviton4 offers up to 30% better compute performance, 50% more cores, and 75% more memory bandwidth than current Graviton3 chips to deliver “the best price performance and energy efficiency.”

As for Trainium2, it is designed to deliver up to four-times faster training than first generation chips and can be deployed in EC2 UltraClusters of up to 100,000 chips, making it possible to train foundation models and large language models “in a fraction of the time” while doubling energy efficiency.

Graviton4 will be available in memory-optimized Amazon EC2 R8g instances, which lets customers process more data, scale workloads and lower costs. Graviton4-powered R8g instances are available in preview, with general availability in the next few months.

The Trainium2 chip is built to process foundation and large language models with up to trillions of parameters. AWS said it offers up to four times faster training performance, three times more memory capacity and up to double the energy efficiency compared to the first generation. It will be available in Amazon EC2 Trn2 instances, with 16 chips in a single instance. "Trn2 instances are intended to enable customers to scale up to 100,000 Trainium2 chips in next generation EC2 UltraClusters, interconnected with AWS Elastic Fabric Adapter (EFA) petabit-scale networking, delivering up to 65 exaflops of compute and giving customers on-demand access to supercomputer-class performance," the company said.

That means customers can train a 300-billion parameter large language model in weeks instead of months, according to AWS.

New features in Bedrock

AWS also unveiled new features for Bedrock, its fully managed platform that gives users access to leading large language and foundation models through a single API.

Bedrock will let customers deploy safeguards in their generative AI applications that fits their responsible AI policies. It will have knowledge bases for building generative AI applications that use proprietary data to deliver customized use cases. Bedrock also will have bot agents that can execute multistep tasks using a company's own systems and data, such as taking sales orders. Finally, Bedrock now offers support for fine-tuning Cohere Command Lite, Meta Llama 2 and Amazon Titan Text models. Support for Anthropic Claude is coming soon.

The guardrails capability is in preview while knowledge bases and agents are now generally available.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)