Create Unique Sounds with Meta's New Audiobox AI

Audiobox lets users create sounds using natural language. But Meta will not open source it over fears of misuse

At a Glance

- Meta's Audiobox AI creates custom sounds and speech from text prompts.

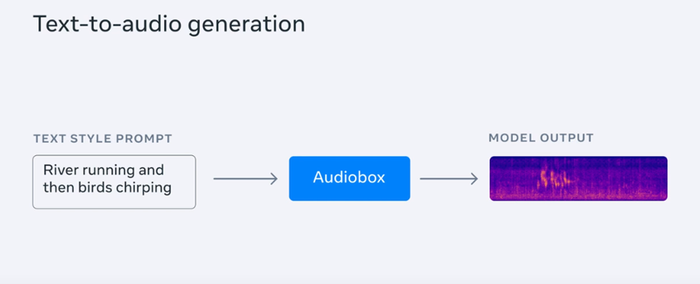

Facebook parent Meta has unveiled its latest audio generation AI model called Audiobox that lets users turn text into sound.

Simply describe what you want to hear and the text-to-audio model will turn it into the sound you requested. The successor to its Voicebox audio generation model, Audiobox takes prompts in natural language.

For example, you can type ‘a beaver munching on a slice of pineapple’ or ‘a young woman talking inside a church’ and the model will generate the desired sound.

You can listen to some AudioBox audio samples on Meta’s research website.

Audiobox can also handle audio inputs – so users can combine a voice input plus a text prompt to better synthesize the audio. This lets users specify the style of speech and sound effects they want generated – a feature not found in the prior version of the model. “When a voice input and text prompt are used together, the voice input anchors the timbre, and the text prompt can be used to change other aspects,” according to Meta.

Meta said Audiobox could be used to produce quality audio for media such as podcasts and audiobooks. As such, users get to create audio that otherwise “requires access to extensive sound libraries as well as deep domain expertise to yield optimal results — expertise that the public, or even hobbyists, may not possess,” the researchers wrote.

Meta said that audio generation systems like the new Audiobox will “lower the barrier of accessibility for audio creation and make it easy for anyone to become an audio content creator.”

“Creators could use models like Audiobox to generate soundscapes for videos or podcasts, custom sound effects for games, or any of a number of other use cases.”

The predecessor, Voicebox, uses natural language prompts to create speech in a variety of styles and languages that also can be edited. It does not create non-speech sounds, unlike Audiobox.

Detecting AI-generated audio

Audiobox has automatic audio watermarking – any audio generated by the AI model can be traced.

Meta’s watermarking method embeds a signal into the audio, which the company said is “imperceptible to the human ear” but can be detected down to the frame level. Audiobox was tested against a series of cyber attacks, but Meta’s researchers found it robust enough for actors to find it difficult to misuse the system.

A soon-to-be-released Audiobox demo will have a voice authentication feature to safeguard against impersonation.

“Anyone who wants to add a voice to the Audiobox demo will have to speak a voice prompt using their own voice. The prompt changes at regular, rapid intervals and makes it extremely difficult to add someone else’s voice with pre-recorded audio.”

Audiobox is not the only audio generation system to feature watermarking protection. Audio generated by Google DeepMind’s new Lyria model can be detected by the SynthID tool, with watermarks embedded directly into the audio waveforms of Lyria outputs.

How to access Meta Audiobox

Voicebox was first unveiled in June, but Meta uncharacteristically opted not to make the AI model open source over fears of potential misuse.

Meta has adopted the same stance for Audiobox, saying that “while we believe it is important to be open with the AI community and to share our research to advance the state of the art in AI, it is also necessary to strike the right balance between openness and responsibility.”

However, Audiobox is being released to a group of hand-selected researchers. The model will be used in AI-related speech research, specifically to “tackle the responsible AI aspects of this work.”

Researchers can also apply for a grant to conduct AI safety and responsibility research with Audiobox. Applications will open “in the coming weeks.”

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)