AI Audio Generation: Everything You Need to Know

AI tools can generate audio from text and existing audio

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a time when artificial intelligence is creating art and writing copy, there's another exciting development occurring in the world of generative AI: audio generation.

Consider being able to type a few lines about a piece of music you’d like to hear and a system would make it happen for you. Or perhaps generating voice lines for a podcast or video you’re trying to edit.

A range of models and tools see AI not just the right notes but also create an entirely new auditory landscape.

Text-to-audio

What are text-to-audio AI models?

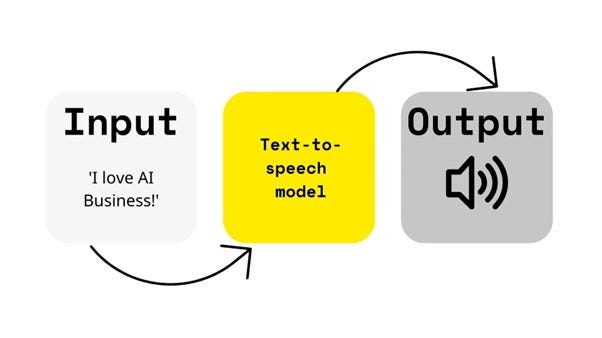

A text-to-audio AI model takes text as an input and generates audio content as a result. The output could vary from speech to music.

The most common iteration is TTS – or text-to-speech. This is used in the development of voice assistants like Siri or Alexa. TTS can be used to create spoken content for various languages.

Text-to-audio AI models

MusicLM

Creators: Google

First published: January 2023

MusicLM can generate high-fidelity music from text inputs. Users can type in a prompt such as "a guitar riff with air horns playing in time" and the model will generate a musical output.

MusicLM can generate music at a consistent 24 kHz over several minutes.

The model works by taking audio snippets and mapping them in a database of words that describe the music sounds. It then takes the user's text or audio input and generates the resulting sound.

Check out MusicLM examples - https://google-research.github.io/seanet/musiclm/examples/

Read more about MusicLM on AI Business: https://aibusiness.com/nlp/google-unveils-musiclm-an-ai-text-to-music-model

AudioPaLM

Creators: Google

First published: June 2023

AudioPaLM can generate text and speech for speech recognition and speech-to-speech translation.

To create AudioPaLM, Google combined the audio generation model AudioPaLM with its flagship large language model, PaLM-2, to create a model designed to leverage larger quantities of text training data to assist with speech tasks.

AudioPaLM can also be fine-tuned to consume and produce tokenized audio on a mixture of speech-to-text tasks. The model can also perform zero-shot speech-to-text translation for languages not seen in its training simply based on a short spoken prompt.

Check out the AudioPaLM paper - https://arxiv.org/abs/2306.12925

Read more on AI Business about AudioPaLM - https://aibusiness.com/nlp/google-combines-palm-language-model-with-audio-generation-for-translation-tasks

Voicebox

First published: June 2023

Voicebox is a generative AI model that can create audio from existing clips just two seconds long. Voicebox learns from both raw audio and an accompanying transcription to generate audio.

Voicebox can match the style for text-to-speech generation and can also be used to edit audio, such as removing background noises of a dog barking or distant car horns.

Read more about Voicebox on AI Business: https://aibusiness.com/companies/meta-unveils-revolutionary-audio-ai-but-won-t-release-it

Make-An-Audio

Creators: ByteDance - researchers from the AI lab at TikTok’s parent company

First published: January 2023

Make-An-Audio is a prompt-enhanced diffusion model capable of generating audio from text prompts.

The model can be used to create personalized audio snippets from natural language inputs and existing audio. It can also be applied to video-to-audio generation.

Read the Make-An-Audio paper - https://text-to-audio.github.io/paper.pdf

AI text-to-audio platforms

Want to try some AI text-to-audio tools? Try these:

PlayHT

PlayHT offers a range of text-to-audio tools, including voice generation for podcasts and voice cloning.

The startup behind it is trying to empower businesses to create natural speech content using state-of-the-art AI voices. The likes of Amazon, Samsung and Verizon have all used PlayHT to generate audio content.

Try PlayHT’s AI text-to-speech tech - https://play.ht/products/

Murf.ai

Murf.ai offers text-to-audio tools for corporate or entertainment purposes. Its studio includes text-to-speech for adverts, education lessons or presentations, among others.

The likes of Nasdaq, Oracle and Toyota are among Murf’s users, with its enterprise plan including a project-sharing workspace for teams to view or edit audio projects.

Resemble.ai

Text-to-audio tools on Resemble.ai enable users to create realistic voiceovers. Resemble also offers voice cloning and tools to localize audio content in various languages.

Nextflix, the World Bank Group and Boingo count among Resemble.ai’s users.

Wellsaid Labs

Seattle-based Wellsaid Labs offers text-to-speech for voiceovers. It offers a studio platform where users can craft and curate custom voices for specific use cases.

Wellsaid users include Boeing, Snowflake, Intel and Peloton.

Audio-to-text models

Whisper

Creators: OpenAI

First published: September 2022

Whisper is an open-source speech recognition system. Trained on 680,000 hours of data collected from the web, the model can transcribe into multiple languages.

Around one-third of the audio used to build Whisper is non-English, according to OpenAI. The company opened up access to the model so developers can use it as a foundation for building applications

Check out the Whisper paper - https://cdn.openai.com/papers/whisper.pdf

Read more about Whisper on AI Business - https://aibusiness.com/ml/openai-launches-ai-transcription-tool-whisper

VALL-E

Creators: Microsoft

First published: January 2023

VALL-E can generate speech audio from just three-second samples. VALL-E essentially mimics the target speaker and what they would sound like when speaking a desired text input. It can also maintain the emotion of the speaker in the sample audio.

It can also be used for text-to-speech synthesis of little prior data and could be used for tasks such as speech editing and content creation when combined with other generative AI models.

Check out the VALL-E paper - https://arxiv.org/abs/2301.02111

Read more aboutVALL-E on AI Business - https://aibusiness.com/microsoft/microsoft-s-vall-e-generates-speech-from-just-3-seconds-of-audio

Fairseq S2T

Creators:

First published: October 2020

Fairseq S2T is a Transformer-based seq2seq model designed for automatic speech recognition and speech translation.

Fairseq S2T generates transcripts and translations autoregressively. It uses a convolutional downsampler to significantly reduce the length of speech inputs before they are fed into the encoder.

Read more about Fairseq S2T - https://arxiv.org/abs/2010.05171

AudioCraft

Creators: Meta

First published: August 2023

AudioCraft is a suite of text-to-audio and music models.

It includes MusicGen, which generates Meta-owned and licensed music from text prompts, AudioGen, which generates sound effects trained from public audio, and EnCodec, which enables “higher quality” music generation with fewer artifacts.

All models are open source to researchers and practitioners. Access the AudioCraft code on GitHub. Try demos of MusicGen or listen to AudioGen samples. Read the EnCodec paper and download the code.

Read more about AudioCraft on AI Business - https://aibusiness.com/nlp/meta-open-sources-audiocraft-its-text-to-audio-or-music-models

.jpg?width=633&auto=webp&quality=80&disable=upscale)

AI audio generation applications & tools

AssemblyAI

AssemblyAI offers production-ready AI models for speech recognition, speech summarization, and more. Among its offerings is LeMUR, which helps businesses build large language model apps on spoken data.

Speechmatics

Speech recognition startup Speechmatics offers AI-powered transcription and translation offerings that span almost 50 languages. Its API allows users to send audio and receive both transcription and translation to enable business users to open their products or services to wider audiences. Video game developers Ubisoft, consulting giant Deloitte and chipmakers Nvidia are listed as partners on its website.

AWS Transcribe

Amazon Transcribe is an automatic speech recognition service from AWS designed to help developers add speech-to-text capability to their applications.

Google Speech-to-Text

Speech-to-Text from Google Cloud can be integrated into applications – allowing users to send audio and receive a text transcription.

Kaldi

Kaldi is the least flashy application on this list – found on GitHub, it’s designed for speech recognition researchers.

Wav2Letter

Meta’s Wav2letter is an automatic speech recognition for researchers and developers to transcribe speech.

Otter.ai

Otter.ai is an AI-powered transcription platform. Users can upload or record audio and Otter will generate a text transcription.

Trint

Trint is an AI transcription service where users can obtain transcription from audio inputs. Among its co-founders is former ABC journalist Jeffrey Kofman, who serves as CEO of Trint.

Audio to audio models

SepFormer

Creators:

First published: October 2020

SepFormer is a Transformer-based neural network for speech separation. It can separate multiple speeches in a single recording.

Read more about SepFormer - https://arxiv.org/abs/2010.13154

AudioLM

Creators: Google

First published: September 2022

AudioLM is an audio generation model.

It can generate semantically plausible speech from existing spoken word input while maintaining speaker identity.

Read more about AudioLM on AI Business - https://aibusiness.com/nlp/google-combines-palm-language-model-with-audio-generation-for-translation-tasks

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)