Cut Inferencing Costs with New Software Method from Vicuna Developers

New 'lookahead decoding' method could reduce inferencing costs by simplifying prediction processes

At a Glance

- A new software development method from the team that built Vicuna could greatly reduce inferencing time.

Bringing down inferencing costs is becoming an increasing focus for businesses using large language models. Nvidia came out with a hardware-focused method with its new H200 chips, saying these will cut costs by half.

Now, the team behind the Vicuna AI model has come up with a software-based approach that starts during model development that it believes could greatly reduce inferencing costs and latency.

Dubbed lookahead decoding, this new method focuses on decreasing the number of decoding steps – by requiring fewer steps to generate responses it not only reduces latency but running costs.

LMSYS Org – or Large Model Systems Organization, an open research group founded by academics − developed this novel approach to optimize GPU processing power, arguing that models like GPT-4 and Llama 2, which are based on autoregressive decoding, are slow and difficult to optimize.

By employing lookahead decoding, users can reduce the number of decoding steps, which allows the model to predict multiple tokens instead of a singular one each time. Essentially, it cuts out a few steps so the model can predict a higher number of tokens – thereby cutting down generation time.

“Lookahead decoding provides a substantial reduction of latency, ranging from 1.5x to 2.3x with negligible computation overhead,” an LMYSYS Org blog post reads. “More importantly, it allows one to trade computation for latency reduction, albeit this comes with diminishing returns.”

Lookahead decoding on LLaMA-2-Chat 7B generation (right) compared to standard use (left). The Blue fonts are tokens generated in parallel in a decoding step. Credit: LMSYS Org

Like baking a cake

Traditionally, the Jacobi iteration method is the go-to approach for non-linear systems. In large language inferencing, it can be used for parallel token generation without a draft model.

LMSYS Org argues, however, that employing the Jacobi method can decode more than one token in several steps: “Consequently, very few iterations successfully achieve the simultaneous decoding and correct positioning of multiple tokens. This defeats the fundamental goal of parallel decoding.”

Instead, lookahead decoding surpasses the traditional method by creating a new token that is decoded based on its historical values from previous iterations.

“While lookahead decoding performs parallel decoding using Jacobi iterations for future tokens, it also concurrently verifies promising n-grams from the cache. Accepting an N-gram allows us to advance N tokens in one step, significantly accelerating the decoding process.”

In simple terms, it is like baking a cake – making each layer one by one is slow. So instead, you prepare several layers at once, bringing in experience and knowledge from earlier cakes (or earlier iterations of tokens) to predict how they should turn out.

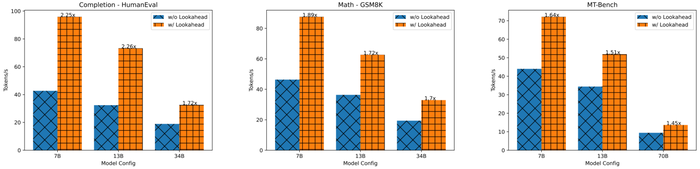

Tests: 2x inferencing reduction

To test the new approach, the Vicuna developers used two Llama models - LLaMA-2-Chat and CodeLLaMA to see how it improved their inferencing.

The team took three versions of each model, 7B, 13B, and 33B parameters and evaluated them on a single Nvidia A100 GPU. Also tested was the 70 billion version on two A100 GPUs.

They found that employing lookahead decoding drastically sped up inferencing across benchmarks like MT-Bench, HumanEval and GSM8K. LLaMA-Chat achieved 1.5x speedup on MT-Bench, CodeLLaMA on HumanEval saw a two times latency reduction and CodeLLama-Instruct was able to solve math problems from GSM8K with a 1.8x latency reduction.

Credit: LMSYS Org

How to access lookahead decoding

Lookahead decoding is available via LMSYS Org’s GitHub page.

LMSYS Org confirmed to AI Business that lookahead decoding is available under the Apache 2.0 license - meaning it's available for developers building commercial models and systems.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)