Google Research: Language Models Struggle to Self-Correct Reasoning

Research shows that large language models struggle to intrinsically self-correct reasoning mistakes without external guidance

At a Glance

- Google DeepMind researchers say more critical examination is needed to advance LLMs’ self-improvement skills.

Large language models (LLMs) are only as smart as the data used to train them. Researchers have been experimenting with ways for models to correct themselves when generating an incorrect output. Some efforts, such as the multi-agent approach proposed by MIT, have yielded promising results.

But according to new research from Google DeepMind, LLMs may see performance dips following self-correction.

In a paper titled ‘Large Language Models Cannot Self-Correct Reasoning Yet’ Google DeepMind scientists undertook a series of experiments and analysis to ground expectations around self-correction capabilities of LLMs.

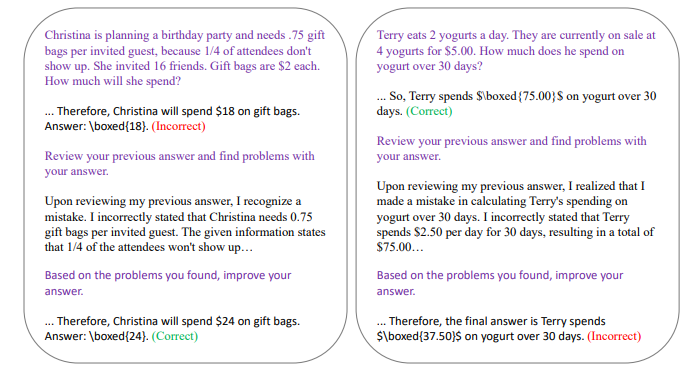

The researchers found that issues arise when it intrinsically self-corrects – meaning when an LLM attempts to correct its initial responses based solely on its own without external feedback. This is in contrast to prior work that shows intrinsic self-correction as being effective. Google's paper instead found that others' research used 'oracles' – essentially correct labels - that guided the models. Without these 'oracles,' the models do not get better on accuracy.

The Google DeepMind team wrote that LLMs need to be able to correct themselves for self-correction to work properly as external feedback is “unavailable in many real-world applications.”

Are prompts the problem?

Hallucinations are one of several byproducts of large language models. No system is without them. They can be reduced, such as through Gorilla’s AST tree approach or through a ‘Multiagent Society’ as proposed by MIT scientists.

Imagine having an LLM-based system, like a chatbot for customer services, that realizes it has generated a wrong answer and then corrects itself without being prompted to do so.

There is growing momentum in the AI research community to try and make this imaginary scenario a reality. Google researchers did consider this tactic, saying that most enhancements attributed to self-correction “may stem from an ill-crafted initial instruction that is overshadowed by a carefully-crafted feedback prompt. ... In such cases, integrating the feedback into the initial instruction or crafting a better initial prompt might yield better results and lower the inference cost."

However, this does not address the researchers goal of letting the LLM do self-correction on its own. For example, prompting the model to "Review your previous answer and find problems with your answer," yielded a wrong answer if it originally answered correctly.

Multiple LLMs and consistency

The paper saw the researchers task various models with benchmark tests. For example, LLMs including OpenAI’s ChatGPT were tasked with generating code. Other agent-based systems were then tasked with critiquing the responses for errors, in order to achieve self-correction.

The results saw models generate multiple responses in a bid to achieve consistency across systems. No AI model produced the same result every time, but Google DeepMind’s team contend that multiple LLMs could be deployed to effectively agree on a consistent response to the task.

The paper explains: “Fundamentally, its concept mirrors that of self-consistency; the distinction lies in the voting mechanism, whether voting is model-driven or purely based on counts. The observed improvement is evidently not attributed to ‘self-correction,’ but rather to ‘self-consistency.’ If we aim to argue that LLMs can self-correct, it is preferable to exclude the effects of selection among multiple generations.”

So, when will self-correcting be possible?

Google DeepMind argued that self-correcting LLMs seem more effective for applications that require making responses safer.

The paper points to models that have ground-truth labels included in the benchmark datasets, like Claude and its ‘Constitutional AI’ system, which would help an LLM avoid a potentially incorrect answer during the reasoning process.

However, LLMs currently are not capable of self-correcting their reasoning absent external help. Google DeepMind’s researchers argue that it’s “overly optimistic” to assume the current state of LLMs would one day be able to self-correct. Instead, they call for an improvement to existing models to make them self-correction ready.

Further, they argue that researchers exploring self-correction should adopt a “discerning perspective, acknowledging its potential and recognizing its boundaries.”

“By doing so, we can better equip this technique to address the limitations of LLMs, steering their evolution towards enhanced accuracy and reliability,” the paper reads.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)