Meta’s SAM Could Revolutionize Computer Vision

The Segment Anything Model could enable AI systems to understand both images and text on a webpage.

At a Glance

- Meta’s Segment Anything Model (SAM) can more easily and accurately identify pixels belonging to an object in an image.

- Users can use the model’s object identification to cut objects out of an image while keeping the object’s resolution.

- Meta states that SAM can be used for computer vision use cases as well as in object detection for VR and AR headsets.

Meta’s researchers have developed an AI model that has the potential to revolutionize the way images are identified in computer vision.

The Segment Anything Model (SAM) can more quickly, easily and accurately identify which pixels in an image or video belong to an object. It can even learn by itself to identify an object for which it was not trained. This capability can be broadly applied to tasks ranging from image editing to analyzing of scientific imagery, among other uses.

In the future, SAM could become part of larger AI systems for a more “general multimodal understanding of the world” such as understanding the images and text content of a webpage, the researchers said.

SAM uses a combination of interactive and automatic segmentation of images to create a more general-use model. It is designed to be easy and flexible to use as it removes the need for users to collect their own segmentation data and fine-tune a model for a specific use case.

Further, Meta’s researchers claim that SAM could be used in AR and VR headsets, where a user’s gaze is the input for identifying objects.

SAM “allows a greater degree of expressivity than any project Meta has done before,” said Meta AI research vice president Joelle Pineau.

Meta released the model under a permissive open license. It is also releasing the dataset, specifically for researchers, with the company claiming it is the largest-ever segmentation dataset. The dataset can be accessed via GitHub to aid further research in computer vision use cases.

How it works

SAM can identify pixels belonging to an object in an image for removal or edits. Users can click on the object to add a mask and then cut it out of the image to create a separate object. Users can also use a natural language prompt to select the object they want the model to mask.

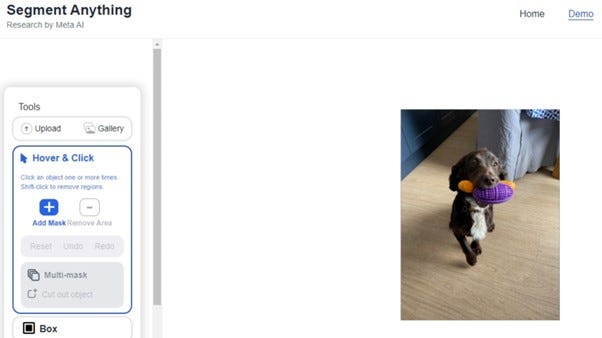

Let's say you want to cut out the image of Barney the dog with toys in tow, in the image below.

After choosing ‘add mask’ on the left, click on the object you want to remove from the picture. Here, two masks are required as the model first selects just the dog, but not the toys. Simply clicking again on the toys allows both objects to be selected.

The two diamonds represent where the user clicked to apply the mask.

Once your desired masks are in place, hit ‘cut out object’ and your desired image will be extracted. It can then be saved as a new image while maintaining the object's resolutions.

Meta explains the technical details: "Under the hood, an image encoder produces a one-time embedding for the image, while a lightweight encoder converts any prompt into an embedding vector in real-time. These two information sources are then combined in a lightweight decoder that predicts segmentation masks. After the image embedding is computed, SAM can produce a segment in just 50 milliseconds given any prompt in a web browser.”

Users can try SAM here, although it is a demo to be used for research and not for commercial purposes. Meta said that any images uploaded in the demo will be deleted at the end of the session.

The creation of Segment Anything marks another milestone in AI for Meta. Despite releasing language models like LLaMA and OPT-175B, the Facebook parent has largely focused its AI research in the past year on more image and video-focused models given its pivot to the metaverse.

Last September, it published the text-to-video tool Make-A-Video which can generate videos from text prompts. And before Make-A-Video, Meta released Make-A-Scene, a multimodal generative AI method capable of creating photorealistic illustrations from text inputs and freeform sketches.

Meta’s own chief AI scientist, Yann LeCun, has said that multimodal generative AI models like SAM will be increasingly used in the future.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)