Sony Scientists Find Computer Vision Models Biased on Race, Skin Color

Study: AI models show racial bias; Sony researchers devise novel multidimensional skin color scale to promote fairness

At a Glance

- Researchers from Sony warn that racially biased computer vision datasets could be used to train generative AI models.

- They propose a new technique to assess skin color fairness using a ‘multidimensional’ approach.

AI researchers at Sony have uncovered extensive biases against people of color in computer vision datasets and models, and have proposed a new way to fairly assess skin color bias in systems.

In a research paper titled ‘Beyond Skin Tone: A Multidimensional Measure of Apparent Skin Color’, the Sony scientists propose a new multidimensional approach to measure skin color as a way to better assess biases and promote fairness.

The proposal sees the use of a multidimensional measure of skin color using a ‘hue angle.’ Spanning red to yellow, this dimension classifies skin color in a multidimensional manner in images.

The method allowed the team to uncover biases that were otherwise invisible and find additional layers of bias related to apparent skin color within computer vision datasets and models.

A multidimensional approach toward fairness

Bias related to skin tone isn’t a new area of research. There are several worrying instances of computer vision systems misclassifying people of color. In 2015, Google’s image-recognition algorithm auto-tagged pictures of Black people as ‘gorillas.’ And a 2020 video posted on Facebook pushed a notification to users of Black men in altercations with white civilians and police officers if they’d like to “keep seeing videos about primates.”

The newly proposed approach was inspired by the researcher’s personal experiences with invisibility – like buying cosmetics and the work of artist Angelica Dass, who took a portrait of thousands of Londoners and associated their skin color with Pantone colors to illustrate the diversity of human skin color.

“One of the things that particularly resonated with me was the fact that Dass mentioned that “nobody is ‘black,’ and absolutely nobody is ‘white’ and that very different ethnic backgrounds sometimes wind up with the exact same Pantone color,” Sony AI Ethics Research Scientist and author William Thong wrote in a blog post.

“This is very similar to what we ended up finding when looking at skin color biases in image datasets and computer vision models.”

Sony’s researchers contend that other AI researchers have not considered that skin undertones have not been explored in terms of capturing human skin tone diversity. They propose that computer vision systems could still be biased against individuals who might not fit into the light-to-dark spectrum.

They contend that expanding the skin tone scale into two dimensions: light vs. dark and warm (yellow-leaning) vs. cool (red-leaning), can help to identify far more biases.

To explore the concept, the researchers created fairness assessments of computer vision datasets and models such as the Twitter (X) open-source image cropping model.

Using the multidimensional approach, the researchers found that manipulating the skin color to have a lighter skin tone or a redder skin hue decreases the accuracy in non-smiling individuals as they tend to be predicted as smiling.

They also found that darker skin tones or a yellower skin hue decrease the accuracy in smiling individuals.

The findings indicate a bias towards a light skin tone when predicting if the individual belongs to the female gender and a bias towards light or red skin hue when predicting the presence of a smile, which illustrates the importance of a multidimensional measure of skin color.

“That means there's a lot of bias that's not actually being detected, it's really important that we do not just think about diversity in this very black and white or light and dark way, but that we think of it as expansively and as globally as possible,” said Alice Xiang, lead research scientist at Sony AI and global head of AI ethics for Sony Group Corporation.

How does it work?

The researcher’s approach uses the perceptual lightness L* as a quantitative measure of skin tone, and the hue angle H* as a quantitative measure of skin hue. Together these provide a multidimensional measure of apparent skin color in images.

The paper explains: “When L∗ is over 60, it corresponds to a light skin tone (and conversely for a dark skin tone). When H∗ is over 55◦, it corresponds to a skin turning towards yellow (and conversely for a skin turning towards red).”

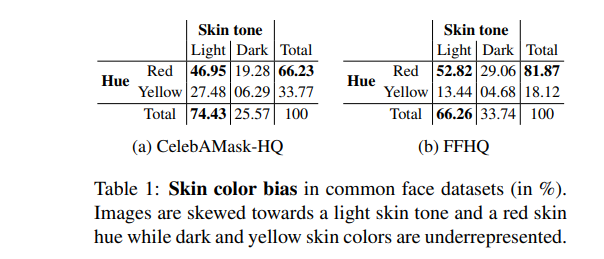

Using this method, the team evaluated computer vision datasets CelebAMask-HQ and FFHQ.

The team found that both datasets were skewed towards light skins. Measuring the hue angle further shows that both datasets are also skewed towards red skins.

The bias in these models could then be perpetuated by generative models like StyleGAN trained on these datasets to reproduce similar skin color biases in their outputs, the researchers warned.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)