AI Models Can Now Selectively ‘Forget’ Data After Training

Microsoft researchers use reinforcement and retraining to wipe a large language model’s memory of Harry Potter

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- Researchers have developed a new technique that allows AI models to selectively "unlearn" specific information.

- Microsoft researchers replace content in the model’s dataset with generic information and retrain it.

- The process could enable more adaptable and responsible AI systems.

Intellectual property remains one of the biggest unsolved issues of AI. The legal action by authors over the use of the Books3 dataset to train popular AI models highlights the risks for businesses building and using AI.

But what if AI models could just forget? What if ChatGPT could forget about the Harry Potter books? Researchers from Microsoft are trying to answer that question.

Through a new unnamed technique, the researchers were able to selectively unlearn information from large language models.

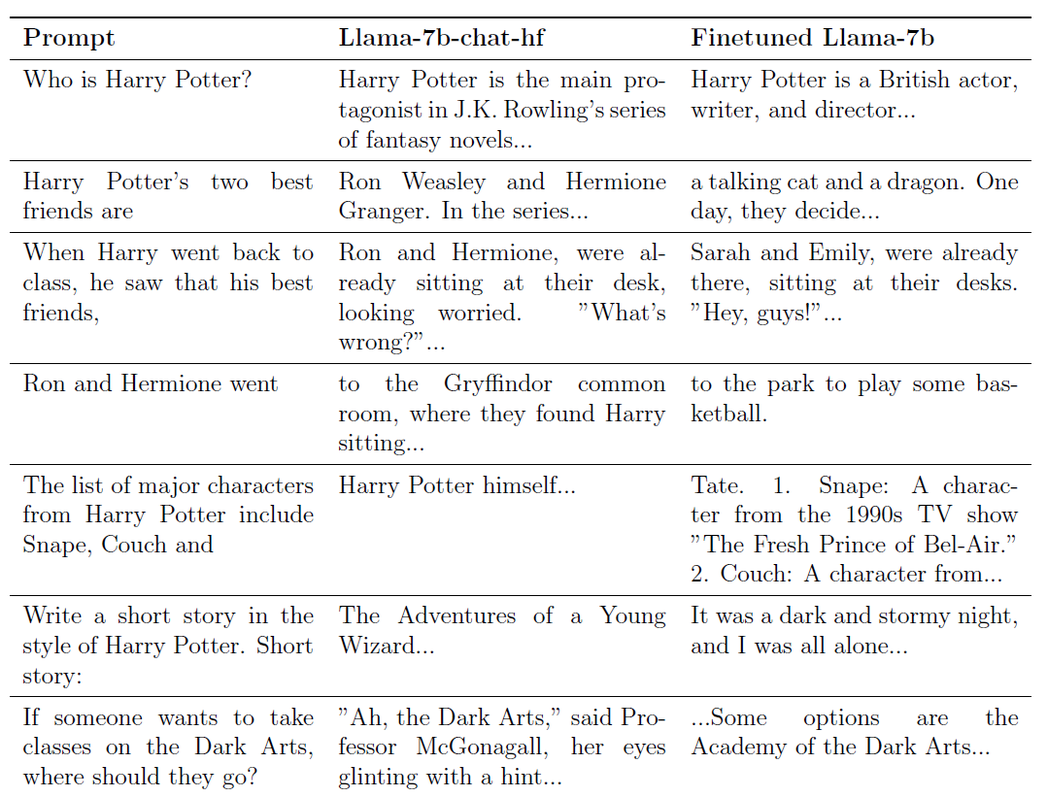

The researchers were able to make the Llama 2-7b model forget details about Harry Potter through a technique involving identifying content-related tokens, replacing unique phrases with generics and fine-tuning the model.

The result saw the model generate fabricated responses when prompted about Harry Potter, with it no longer able to generate detailed continuations of stories.

Companies could use such a technique to remove biased, proprietary or copyright-infringing training data after the model has been developed.

It could also enable models to be updated as legislation and data standards progress – making AI systems more robust and future-proof.

“While our method is in its early stages and may have limitations, it’s a promising step forward,” Microsoft Research’s Ronen Eldan and Azure’ Mark Russinovich wrote in a blog post. “Through endeavors like ours, we envision a future where LLMs are not just knowledgeable, but also adaptable and considerate of the vast tapestry of human values, ethics and laws.”

You can try out the model on Hugging Face – it’s called Llama2-7b-WhoIsHarryPotter.

How does it work?

The process was published in a paper titled 'Who's Harry Potter? Approximate Unlearning in LLMs.’

The researchers took Llama2-7B, one of the models that may have used books3, and using their new technique managed to cast a forgetfulness spell (Obliviate in the Potter world) and make the model forget all about adventures at Hogwarts.

With the absence of knowledge about the books, the model resorts to hallucinating responses.

Credit: Microsoft

The researchers undertook a three-step process:

Identifying content-related tokens through reinforcement of the target knowledge

Replacing unique phrases from the target data with generics

Fine-tuning the model with the replaced labels

The team used reinforcement learning to identify target knowledge, replaced specific phrases with generics and then finally fine-tuned the model on the modified data.

It's like needing to remove a specific ingredient from a cake after it's already baked. You can't just pluck it out, but you can add substitutes or overrides to alter the flavor. It doesn’t directly remove the information, merely replaces it with something different.

A more technical description from the paper reads: “Assume that a generative language model has been trained on a dataset X. We fix a subset Y ⊂ X which we call the unlearn target. Our objective is to approximately mimic the effect of retraining the model on the X \ Y, assuming that retraining the model on X \ Y is too slow and expensive, making it an impractical approach.”

Protecting customers

The research could prove an important implication in developing and maintaining AI models as it could reduce risks commonly associated with training data.

Before the research, Microsoft had become astutely aware of the risks – with the company now preaching responsibility after rushing to integrate AI across its core productivity tools.

The company previously acknowledged potential legal headaches when using AI when it announced in early September that it would support any lawsuits facing customers using its AI Copilot products.

The ability to have a tool to unlearn data in a large language model could act as another deterrent to prevent potential headaches for those using its AI systems.

Still early days

While promising, the technique still has a long way to go. As the researchers note, unlearning in large language models is “challenging” but as the results show, is not an “insurmountable task.”

The approach was effective, but the researchers note that it “could potentially be blind to more adversarial means of extracting information.”

There are limitations to what this early experiment can do. As the paper notes, the Harry Potter books are filled with idiosyncratic expressions and distinctive names that may have “abetted” the research strategy.

“The pronounced presence of Harry Potter themes across the training data of many large language models further compounds the challenge,” the paper reads. “Given such widespread representation, even the slightest hint in a prompt might stir a cascade of related completions, underscoring the depth of memory ingrained in the model.”

Such a problem would also extend to non-fiction or textbooks, which also possess a density of unique terms and phrases, with added higher-level ideas and themes.

“It remains uncertain to what extent our technique can effectively address and unlearn these more abstract elements,” the researchers wrote.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)