Falcon 180B: The Powerful Open Source AI Model … That Lacks Guardrails

Falcon 180B beats GPT 3.5, Llama 2 and rivals Google’s PaLM-2

.jpg?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

At a Glance

- A new large version of the Falcon 40B model has dropped – it’s powerful but is susceptible to 'problematic' outputs.

The team behind the Falcon 40B open source model has released a souped-up version that is more than four times larger – but lacks alignment guardrails.

The Technology Innovation Institute (TII) published Falcon 180B on Hugging Face this week. It was trained on 3.5 trillion tokens from TII’s RefinedWeb dataset.

Falcon 180B achieves state-of-the-art results across natural language tasks – it topped the Hugging Face leaderboard for pre-trained open access models, scoring higher than proprietary models like Google's PaLM-2.

You can use Falcon 180B for commercial applications – but under very restrictive conditions. The full license is here.

TII released a base version and a version fine-tuned on chat and instruction data.

You can try out the model for yourself via the Falcon Chat Demo Space.

One ‘slight’ issue with Falcon 180B

The TII team wants developers to further build upon the base Falcon 180B model and create “even better instruct/chat versions.”

The model does have one major flaw, however, as it lacks alignment guardrails. Falcon 180B has not undergone any advanced tuning or alignment so it can produce what TII calls "problematic” outputs – especially if prompted to do so.

The base version also lacks a prompt format – meaning on its own, the base Falcon 180B will not generate conversational responses.

How does Falcon 180B perform?

Falcon 180B outperformed Meta Llama 2 and OpenAI’s GPT 3.5 on the MMLU benchmark test.

The model was about on par with Google's PaLM 2-Large on various tests including HellaSwag, WebQuestions and Winogrande.

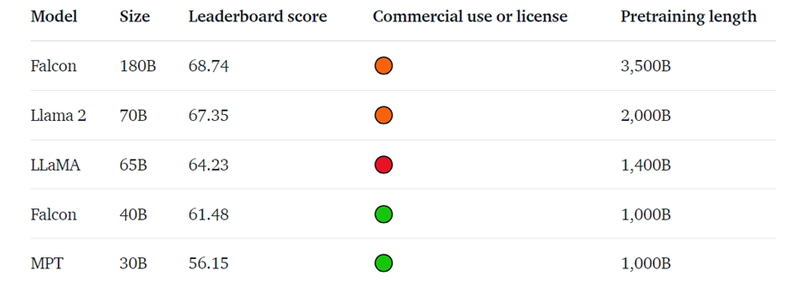

But it was on the Hugging Face Leaderboard that Falcon 180B shone – becoming the highest-scoring openly released pre-trained large language model with a score of 68.74. Meta's Llama 2 previously held the top spot with a score of 67.35.

The Hugging Face leaderboard. Credit: Hugging Face

Stay updated. Subscribe to the AI Business newsletter.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)