DeepMind's Gato: A step towards artificial general intelligence?

Most AI systems are taught one or two responsibilities. Google's DeepMind can juggle several.

Most AI systems are taught one or two responsibilities. Google's DeepMind can juggle several.

DeepMind, the minds behind AlphaFold, has unveiled its latest project: Gato – a “general purpose” system that’s designed to take on several different tasks.

Most AI systems are taught one or two responsibilities. The Google-owned company has come up with a method by which an AI system can undertake a handful of different tasks.

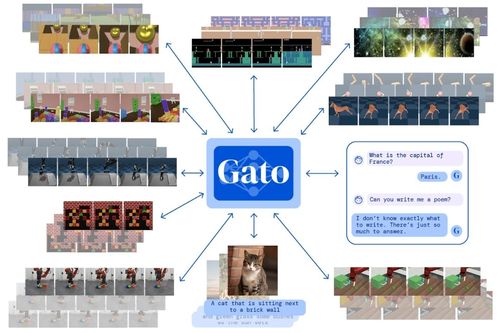

Gato – which is short for ‘A General Agent’ - can play Atari, caption images, chat, stack blocks with a real robot arm and more. The system can decide whether to output text, joint torques, button presses, or other tokens based on context.

The likes of Nando de Freitas, Yutian Chen and Ali Razavi were part of the team behind the system.

How it works

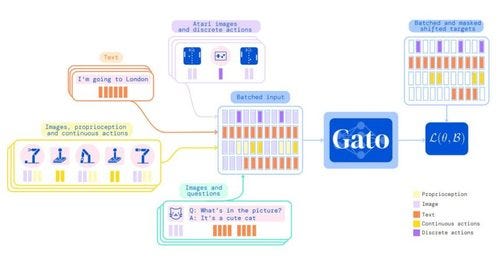

In a paper detailing Gato, the researchers sought to apply a similar approach found in large-scale language modeling. Gato was trained on data covering different tasks and modalities. This data was serialized into a flat sequence of tokens which was then batched and processed by a transformer neural network similar to a large language model.

The loss is masked so that Gato only predicts action and text targets, the paper reads.

DeepMind's Gato

Upon deployment, a prompt is tokenized, which forms an initial sequence. The environment yields the first observation – which again, is tokenized and appended to the sequence. Gato then samples the action vector autoregressively, one token at a time.

Once all tokens comprising the action vector have been sampled, the action is decoded and sent to the environment which steps and yields a new observation. Then the procedure repeats. The DeepMind researchers suggest the model “always sees all previous observations and actions within its context window of 1024 tokens.”

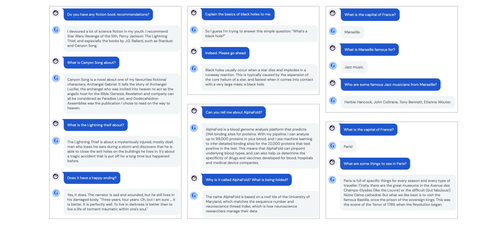

What is the capital of France?

The system itself is built on a sizable dataset comprising data from both simulated and real-world environments. It was also built using several natural language and image datasets.

While Gato can do a host of tasks compared with other AI systems, it appears to struggle to get everything right.

In their 40-page paper, the DeepMind researchers showcased examples of some of the tasks it performs. In terms of dialogue, the system provides a relevant response, but tends to be "often superficial or factually incorrect.”

For example, when asked what the capital of France is, the system replies ‘Marseille’ on occasion and Paris on another. The research team suggests that such inaccuracies “could likely be improved with further scaling.”

Gato also struggles with memory constraints, much to the detriment of learning to adapt to a new task via conditioning on a prompt, like demonstrations of desired behavior.

“Due to accelerator memory constraints and the extremely long sequence lengths of tokenized demonstrations, the maximum context length possible does not allow the agent to attend over an informative-enough context,” the paper reads.

The researchers opted to fine-tune the agent’s parameters on a limited number of demonstrations of a single task and then evaluate the fine-tuned model’s performance in the environment.

Judge me by my size, do you?

In terms of size, Gato was DeepMind’s study focused on three Gato model sizes in parameters of 79 million, 364 million and 1.18 billion.

The lowest model was found to perform the worst, with the results suggesting that the greater the capacity allows for the model to use representations learned from the diverse training data at test time.

Though sizable, 364 million and 1.18 billion are dwarfed in terms of size by the language models that inspired Gato in the first place.

Arguably the most famous language model, Open AI’s GPT-3 weighs in at 175 billion parameters. On par with GPT-3 in terms of size is the newly released OPT-175B from Meta.

Both however are dwarfed by the 204 billion HyperClova model from Naver, DeepMind's Gopher, which has 280 billion parameters and MT-NLP, or Megatron, from Microsoft and Nvidia, which boasts 530 billion.

The world’s largest language model belongs to WuDao 2.0, with Chinese researchers claiming it has 1.75 trillion parameters. Google Brain previously developed an AI language model with 1.6 trillion parameters, using what it called Switch Transformers. However, neither of these two were monolithic transformer models, preventing a meaningful ‘apples-to-apples’ comparison.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)