Web Apps Powered by On-Device AI? Ex-Google Engineer Shows It's Possible

Former Google Photos engineer proposes novel way of building AI-powered web apps from your laptop

At a Glance

- Build a local AI web app capable of ingesting documents from your device, not the cloud, with this new development method.

To make use of large language models, web apps need to be connected to cloud servers. A former Google engineer has proposed a new method for running AI locally.

Jacob Lee previously worked on Google Photos and now helps maintain the popular LangChain framework. In a blog post for Ollama, Lee proposed a new method for apps to access large language models that could reduce costs - since connecting to cloud servers can be expensive. It also reduces privacy concerns around the data leaving your device.

By using a combination of open source tools, Lee was able to build a web app that lets you have a conversation with a document. You can access a demo here – simply install the Ollama desktop app, run a set of commands to ensure it runs locally and then you are able to interact with a chatbot about a report or paper in natural language right from your PC.

You will also need a Mistral instance running via Ollama on your local machine – a full explainer to run the demo can be found in Lee's blog post.

How does it work?

By using the likes of LangChain, Ollama and Transformers.js, Lee was able to develop the product faster and cheaper than cloud-based means. All of these tools can run from your browser.

The default model in the demo system is Llama 2 from Meta. However, after initial testing, Mistral 7B dropped, the LLM was designed to be a cheaper alternative to GPT-4. Lee was able to run it on his 16GB M2 Macbook Pro.

Credit: Ollama

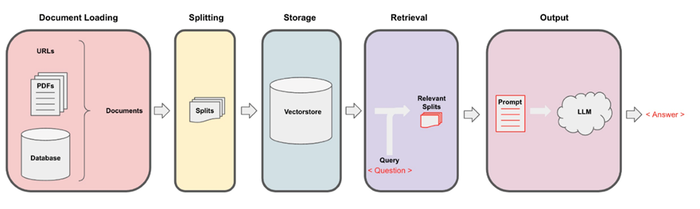

There’s a technical five-step process as to how the concept works:

1. Data Ingestion - Load documents such as a PDF. Use LangChain to split the document into chunks. Create vector embeddings for each chunk using Transformers.js. Store chunks and vectors in the Voy vector store database.

2. Retrieval - Take the user's text question. Search the vector store to find chunks that are most similar to the question.

3. Generation - Pass question and retrieved chunks to Ollama local AI. Ollama runs the Mistral AI model locally, which then generates an answer based on retrieved data.

4. Dereferencing – For any follow-up questions, rephrase them standalone first. Then redo the retrieval and generation steps.

5. Exposing local AI – The Ollama tool exposes the local Mistral model to the web app. App requests access and uses the generation model.

Lee built a local AI-powered web app by ingesting documents into a vector store for retrieval, then exposed a locally running large language model via Ollama to generate answers to user questions based on the retrieved relevant data.

In simpler terms, he made a website that can have conversations about documents without needing the internet, by using smart software running completely on your computer.

Useful for businesses and enterprise developers

Lee’s local concept could prove useful for businesses and enterprise developers. By moving away from the cloud and onto local devices, it could reduce operating expenses, especially at scale.

It would also allow applications to become highly customizable as users can adopt fine-tuned models built using proprietary in-house data.

And since nothing leaves the premises when processed locally, it alleviates privacy concerns and reduces the risk of data breaches.

Lee said such systems “will become more and more common” as new models are being made smaller and faster, enabling them to run more easily on local devices.

But to make it even easier for non-technical users to create something like this, Lee proposed the idea of a new browser API where a web app can request access to a locally running LLM, like a Chrome extension.

“I’m extremely excited for the future of LLM-powered web apps and how tech like Ollama and LangChain can facilitate incredible new user interactions,” Lee wrote.

Lee’s concept adds to a growing list of ways AI is improving web app development. There’s MetaGPT, which allows users to use natural language to build apps – simply type your desired app in text and it will generate it. There are also website builder platforms like CodeWP.ai that generate HTML code for sites like WordPress. And new developer environment tools that generate code like GitHub Copilot and Replit AI. Even Google is getting in on the act, creating Project IDX, a new development environment that gives users AI tools to try out.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)