Meta Develops Invisible Watermarks to Track AI Image Origins

Stable Signature creates a watermark that remains in images even if they are later edited

At a Glance

- Meta has developed a method to embed watermarks into AI-generated images that are invisible to the human eye.

- The watermarks can be used to trace the source of an AI-generated image as Meta raises concerns about misuse and deepfakes.

Since AI image generation captured the public's fascination last summer with the release of DALL-E, researchers have been coming up with ways to identify images as being made by AI. Google DeepMind developed SynthID. MIT scientists developed PhotoGuard. And now Meta has thrown its hat into the ring with Stable Signature.

Detailed in a paper titled ‘The Stable Signature: Rooting Watermarks in Latent Diffusion Models,’ Meta’s researchers developed a system that leaves a secret binary signature into all images generated by latent diffusion models, like Stable Diffusion – creating a watermark for AI-generated images.

Developed with France's National Institute for Research in Digital Science and Technology (Inria), the watermark is invisible to the naked eye but can be detected by algorithms. Meta says its marks can even be detected if the image is edited by a human post-generation.

The Facebook parent said it is exploring ways to incorporate the Stable Signature research into its line of open source generative AI models, like its flagship Llama 2 model. It is also exploring ways to expand the Stable Signature to other modalities, like video.

“By continuing to invest in this research, we believe we can chart a future where generative AI is used responsibly for exciting new creative endeavors,” a company blog post reads.

While it does work with popular open source models like Stable Diffusion, Stable Signature does have its limitations. For starters, it does not work on non-latent generative models.

Stable Signature can be accessed via GitHub. However, according to the license, it currently cannot be used for commercial purposes yet. Stable Signature was published under a CC BY-NC 4.0 license, which only allows for non-commercial use cases – meaning it cannot be used in a for-profit product or solution.

How Stable Signature works

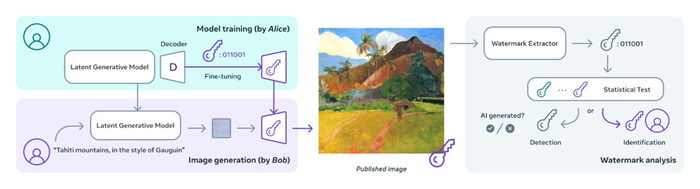

The Stable Signature works by fine-tuning the decoder part of a latent diffusion model like Stable Diffusion to embed a hidden binary signature into the generated image.

To achieve this, the researchers first pre-train a watermark extractor network using a standard deep learning watermarking method. They then fine-tuned the decoder by adding a loss term that encourages the extracted watermark from the generated images to match a target signature.

The process results in a watermark that cannot be edited out of an image even if it is cropped or edited. It is also designed to be fast so it does not interrupt the diffusion process.

The Stable Signature method encompasses a two-step process. Meta explains:

“First, two convolutional neural networks are jointly trained. One encodes an image and a random message into a watermark image, while the other extracts the message from an augmented version of the watermark image. The objective is to make the encoded and extracted messages match. After training, only the watermark extractor is retained.

“Second, the latent decoder of the generative model is fine-tuned to generate images containing a fixed signature. During this fine-tuning, batches of images are encoded, decoded, and optimized to minimize the difference between the extracted message and the target message, as well as to maintain perceptual image quality. This optimization process is fast and effective, requiring only a small batch size and a short time to achieve high-quality results.”

In addition, if users use a fine-tuning method like DreamBooth or ControlNet, it would not affect the watermark as those tools impact at the latent model level, with no change to the decoder.

Potential business applications

AI image generation was the wind that blew generative AI into the mainstream. Models like Stable Diffusion and DALL-E captured the imagination of the public starting in mid-2022, and later even fooling social media users with images of the Pope in a puffer jacket.

But as Meta warns, there is a nefarious side. The viral image of an explosion near the Pentagon turned out to be AI-generated, with the team behind Stable Signature warning that AI image generation can lead to “potential misuse from bad actors who may intentionally generate images to deceive people.”

Employing a tool like Stable Signature could allow fact-checkers to check the origin of an image. It could also be used by businesses to detect if one of their AI-generated images is being used elsewhere, like a news image being used by a rival publication without permission.

Stock image libraries that offer AI-generated images, like Adobe, Shutterstock and Getty Images could employ Stable Signature to track how their models are being used after distribution - and trace harmful deepfakes or copyright violations back to specific users.

It could even have an impact on the art world – allowing people who run art competitions or galleries to determine whether a work is AI-generated or not.

A tool like Stable Signature could help businesses deal with emerging synthetic media challenges posed by generative AI. The code to access it is available via GitHub, though it is still early in its development, so it may take some refining before it can be helpful in monitoring AI-generated content, especially beyond images.

Read more about:

ChatGPT / Generative AIAbout the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=700&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)

.jpg?width=300&auto=webp&quality=80&disable=upscale)